Dependency Parsing with Dynamic Bayesian Network PowerPoint PPT Presentation

1 / 1

Title: Dependency Parsing with Dynamic Bayesian Network

1

Dependency Parsing with Dynamic Bayesian Network

Bilmes, J. and Zweig, G. (2002). The graphical

models toolkit An open source software system

for speech and time-series processing. IEEE Int.

Conf. on Acoustics, Speech, and Signal

Processing, June 2002. Orlando Florida. Frank,

R., Mathis, D., and Badecker, W. (2005). The

acquisition of anaphora by simple recurrent

networks. Unpublished manuscript. Kersten, D. and

Yuille, A. (2003). Bayesian models of object

perception. Cognitive Neuroscience,

1319. Klein, D. and Manning, C. (2004).

Corpus-based induction of syntactic structure

Models of dependency and constituency. In

Proceedings of the 42nd Annual Meeting of the

ACL. Knill, D. C. and Richards, W., editors

(1996). Perception as Bayesian inference.

Cambridge University Press. Kording, K. and

Wolpert, D. (2004). Bayesian integration in

sensorimotor learning. Nature, 427244247. Lives

cu, K., Glass, J., and Bilmes, J. (2003). Hidden

featuremodels for speech recognition using

dynamic bayesian networks. In 8th European

Conference on Speech Communication and Technology

(Eurospeech). Marcus, M., Santorini, B., and

Marcinkiewicz, M. A. (1993). Building a large

annotated corpus of english the penn treebank.

Computational Linguistics, 19. Murphy, K.

(2002). Dynamic Bayesian Networks

Representation, Inference and Learning. PhD

thesis, Univ. of California at Berkeley. Peshkin,

L., Pfeffer, A., and Savova, V. (2003). Bayesian

nets for syntactic categorization of novel words.

In Proceedings of the NAACL. Rao, R. P. N.

(2005). Hierarchical bayesian inference in

networks of spiking neurons. In Saul, L. K.,

Weiss, Y., and Bottou, L., editors, Advances in

Neural Information Processing Systems 17. MIT

Press, Cambridge, MA. Shieber, S. (1985).

Evidence against the context-freeness of natural

language. Linguistics and Philosophy,

8333343. Stocker, A. and Simoncelli, E.

(2005). Constraining a bayesian model of human

visual speed perception. In Saul, L. K., Weiss,

Y., and Bottou, L., editors, Advances in Neural

Information Processing Systems 17. MIT Press,

Cambridge, MA. Yu, A. J. and Dayan, P. (2005).

Inference, attention, and decision in a bayesian

neural architecture. In Saul, L. K., Weiss, Y.,

and Bottou, L., editors, Advances in Neural

Information Processing Systems 17. MIT Press,

Cambridge, MA.

Virginia Savova JHU

Leonid Peshkin Harvard

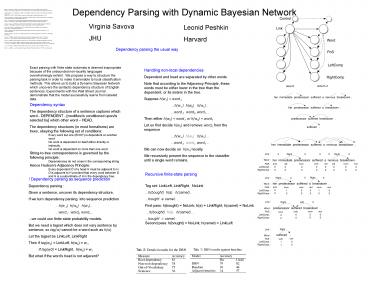

Dependency parsing the usual way

Exact parsing with finite state automata is

deemed inapropriate because of the unbounded

non-locality languages overwhelmingly exhibit.

We propose a way to structure the parsing task in

order to make it amenable to local classification

methods. This allows us to build a Dynamic

Bayesian Network which uncovers the syntactic

dependency structure of English sentences.

Experiments with the Wall Street Journal

demonstrate that the model successfully learns

from labeled data.

Handling non-local dependencies Dependent and

head are separated by other words Note that

according to the Adjacency Principle, these words

must be either lower in the tree than the

dependent, or its sisters in the tree. Suppose

h(w1) word-1 ...h(w-1) h(w0)

h(w1)... ...word-1 word0 word1... Then either

h(w0) word-1 or h(w0) word1 Let us first

decide h(w0) and remove word0 from the sequence

...h(w-1) h(w0) h(w1)... ...word-1 word0

word1... We can now decide on h(w1) locally. We

recursively present the sequence to the

classifier until a single word remains.

Dependency syntax The dependency structure of a

sentence captures which word - DEPENDENT -

modifies/is conditioned upon/is selected by

which other word HEAD. The dependency

structures (in most formalisms) are trees,

obeying the following set of conditions Every

word but one (ROOT) is dependent on another word.

No word is dependent on itself either directly

or indirectly. No word is dependent on more than

one word. String-to-tree correspondence is

governed by the following principle Dependencies

do not cross in the corresponding string. Hence

Hudsons Adjacency Principle Every dependent D

of a head H must be adjacent to H. D is adjacent

to H provided that every word between D and H is

a subordinate of H in the dependency tree.

Recursive finite-state parsing Tag set

LinkLeft, LinkRight, NoLink ...h(bought) h(a)

h(camel)... ...bought a camel... First pass

h(bought) NoLink h(a) LinkRight h(camel)

NoLink ...h(bought) h(a) h(camel)... ...bought

a camel... Second pass h(bought) NoLink

h(camel) LinkLeft

! Dependency parsing as sequence

prediction Dependency parsing Given a sentence,

uncover its dependency structure. If we turn

dependency parsing into sequence

prediction ...h(w-1) h(w0) h(w1)... ...word-1

word0 word1... ...we could use finite-state

probability models. But we need a tagset which

does not vary sentence by sentence, so tag(w)

cannot be a word such as h(w). Let the tagset be

LinkLeft, LinkRight Then if tag(w0) LinkLeft,

h(w0) w-1 if tag(w0) LinkRight,

h(w0) w1 But what if the words head is not

adjacent?