Software Engineering PowerPoint PPT Presentation

1 / 57

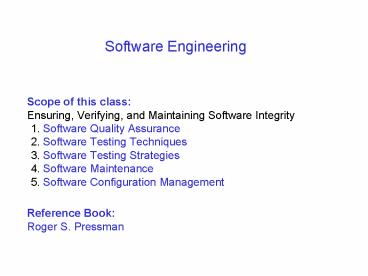

Title: Software Engineering

1

Software Engineering

- Scope of this class

- Ensuring, Verifying, and Maintaining Software

Integrity - 1. Software Quality Assurance

- 2. Software Testing Techniques

- 3. Software Testing Strategies

- 4. Software Maintenance

- 5. Software Configuration Management

- Reference Book

- Roger S. Pressman

2

Software Quality Assurance

- Goal is to produce high quality sofware.

- SQA is an umbrella activity, it comprises

- 1. Analysis, design, coding and testing methods

and tools - 2. Formal technical review, followed in each

step - 3. a multitiered testing policy

- 4. control of software documentation

- 5. a procedure to comply with software

development standards - 6. Measurement and reporting mechanisms

3

Software Quality and SQA

- Definition of software quality

- Conformance to explicitly stated functional and

performance - requirements, explicitly documented development

standards, and implicit characteristics that are

expected of all professionally developed

software. - Three important points

- 1. Software requirement is the foundation of the

software quality - 2. Specified standards has to be followed

- 3. Implicit requirements (good maintainability

etc.)

4

Software quality factors

- 1. Directly measured (errors/KLOC/unit time etc.)

- 2. Indirectly measured (usability,

maintainability)

5

Software quality factors

- McCall's Software Quality Factors

6

Software quality factors

- Correctness how it conforms the specification

and mission objective - Reliability with what required precision

- Efficiency Computing resources and code required

- Integrity control of unauthorized persons access

to the software - Usability effort required to learn, operate,

prepare input etc. - Maintainability locate and fix an error in the

program - Flexibility effort required to modify an

operational program - Testability effort required to test a program

- Portability to transfer program to other

hardware/software system - Reusability to the extent the program or its

part can be reused - Interoperability to couple one system to another

7

Software quality factors

- Difficult to get a direct measure of all the

quality factors above. - F_qc1xm1 c2 x m2 ...... cn x mn

- c1 ... cn are the weights

- m1 to mn are the metrics.

- Many of the metrics can only be measured

subjectively. - Auditability,accuracy,completeness,conciseness,con

sistency, etc.... - The metrices has the value of 0 to 10.

8

Quality factors and metrices

- Software Quality Metric Correctness Reliability

Efficiency Integrity Maintaina - Auditability x x

- Accuracy x

- Complexity x x

- Security x

9

Software Quality Assurance

- Definition SQA A planned and systematic

pattern of actions that are required to ensure

quality in software. - SQA Acitivities

- 1. Application of technical methods High

quality specification and design. - 2. Conduct of formal technical reviews Uncover

quality problems, may be defects too. - 3. software testing Design test cases to detect

errors. - 4. enforcement of standards assessment of

adherence to standards. - 5. control of change Formalize request for

change, control the impact of change.

Applied during development and maintenance phase. - 6. Measurement Collect software quality metrics

to track software quality. - 7. Record keeping and reporting Collection and

dissemination of SQA information-

results of reviews, audits, change control,

testing etc.

10

Formal Technical Reviews

- Objective

- 1. to uncover errors in function, logic,

implementaion of any software module. - 2. verify that software under review meets its

requirements document. - 3. to ensure that the software has been

represented using predefined standards. - 4. to make projects more manageable.

11

Formal Technical Reviews

- The review meeting

- 1. 3-5 people should be involved.

- 2. Advance preparation of about 2 hours.

- 3. Duration should be less than 2 hours.

- Review leader, reviewers, producer

- Recorder one reviewer becomes the recorder of

the proceedings of the meet. - Producer--gt informs the project leader for a

review. - Review leader --gt evaluates for readiness of a

review, generates copies of product materials and

distributes them to the reviewers for advance

preparation. - Meet Producer walks thru the product.

- Decision 1. accpet with modification 2. reject

due to errors 3. accept provisionally

12

Formal Technical Reviews

- Review reporting and record keeping

- 1. What was reviewed? 2. Who reviewed? 3.

Findings and conclusions - Two purposes

- 1. to identify problem areas within the

product. - 2. to prepare AI chesclist for the producer.

- There has to be a follow-up mechanism.

13

Formal Technical Reviews

- Review guidelines

- 1. Review the product, not the producer.

- 2. Set an agenda and maintain it. (do not

drift) - 3. Limit debate and rebuttal discuss off-line.

- 4. Enunciate problem areas postpone the

problem-solving. - 5. Take written notes

- 6. Limit the no of participants and advance

prepapration is needed. - 7. Develop a checklist for the products to be

reviewed. - 8. Allocate resources and time schedule for the

reviewers. - 9. Conduct meaningful training for reviewers.

- 10. Review early reviews.

14

Software Quality Metrics

- Software Quality Indices

- Obtain the following values from data and

architectural design. - S1 total number of modules defined in the

architecture. - S2 the number of modules that produces data to

be used elsewhere. - S3 the number of modules whose correct

function depends on prior processi

ng. - S4 the number of database items (objects and

attributes). - S5 the total number of unique database items.

- S6 the number of database segments.

- S7 the number of modules with single entry and

exit.

15

Software Quality Metrics

- Compute the following intermediate values

- Program structure D1 If the architectural

design was developed using a distinct method

(data flow oriented design or object oriented

design) then - D1 1 else D1 0.

- Module independence D2 1 (S2/S1)

- Module not independent on prior processing D3

1 (S3/S1) - Database size D4 1 (S5/S4)

- Database compartmentalization D5 1 (S6/S4)

- Module entarnce / exit characteristics D6 1

(S7/S1)

16

Software Quality Metrics

- DSQI ? wi Di

- wi Relative weights of the intermediate

values. - If DSQI is lower, it calls for further design

work and review. - If major changes are planned to the design then

the effect on DSQI can be calculated.

17

Software Quality Metrics

- Software Maturity Index SMI IEEE proposed.

- MT the number of modules in the current

release. - Fc the number of modules in the current

release that have been changed. - Fa the number of modules in the current

release that have been added. - Fd deleted modules in the current release.

- MT - (FaFc Fd)

- SMI ----------------------

-------- - MT

- As SMI approaches 1.0, the product begins to

stabilize.

18

Halstead's Software Science

- M.H.Halstead principally attempts to estimate

the programming effort. - The measurable and countable properties are

- Also called primitive measures

- n 1 number of unique or distinct operators

appearing in that implementation - n 2 number of unique or distinct operands

appearing in that implementation - N1 total usage of all of the operators

appearing in that implementation - N 2 total usage of all of the operands

appearing in that implementation - Operators can be "" and "" but also an index

"..." or a statement separation "....". The

number of operands consists of the numbers of

literal expressions, constants and variables.

19

Halstead's Software Science

- Estimated length N n1log 2n 1 n 2log 2n 2

- Experimentally observed that N gives a

rather close agreement to prgram length. - Program Volume V N log 2(n1n2)

- Volume of information required (in bits) to

specify a program. May vary with the programming

language. Theoretically a minimum volume must

exist for a particular algorithm. - Volume ratio L (2/N1) x (n2/N2)

- Defined as the ratio of the volume of the most

compact program to the volume of the actual

program. L must always be less than 1. - Language level Each language may be categorized

by language level l, which will vary among

languages. Halstead says that language level is

constant for a given language. Others

argued/showed that it is a function of both

language and programmer. - Program level measure of program complexity

PL (n1/n2)(N2/2)-1

20

- Example Interchange Sort Program

- (FORTRAN)

- SUBROUTINE SORT(X,N)

- DIMENSION X(N)

- IF (N.LT.2) RETURN

- DO 20 I 2,N

- DO 10 J 1,I

- IF(X(I).GE.X(J)) GOTO 10

- SAVE X(I)

- X(I) X(J)

- X(J) SAVE

- 10 CONTINUE

- 20 CONTINUE

- RETURN

- END

Operators of the Sort Program Operator Count 1

End of Statement 7 2 Array subscript 6 3

5 4 IF( ) 2 5 DO 2 6 , 2 7 End of

program 1 8 .LT. 1 9 .GE. 1 10. GOTO 10

1 n1 10 N1 28

Operands of the Sort Program Operand Count 1 X

6 2 I 5 3 J 4 4 N 2 5 2 2 6 SAVE

2 7 1 1 n2 7 N2 22

Results Length N n1log2n1 n2log2n2

10log210 7log27 52.87 --- N1 N2

50. Volume V N log2(n1n2)

(2822)log2(107) 204 bits.

(equivalent assembler volume is 328

bits) Program Level PL (n1/n2)(N2/2))-1

10/722/2-1 110/7 15.714 Testing Effort

e V/PL 12.98.

21

Halstead's Software Science

Advantages of Halstead Do not require in-depth

analysis of programming structure. Predicts rate

of error. Predicts maintenance effort. Useful

in scheduling and reporting projects. Measure

overall quality of programs. Simple to

calculate. Can be used for any programming

language. Numerous industry studies support the

use of Halstead in predicting programming effort

and mean number of programming bugs.

22

Halstead's Software Science

Drawbacks of Halstead It depends on completed

code. It has little or no use as a predictive

estimating model. But McCabe's model is more

suited to application at the design level.

23

McCabe's Complexity Metric

This is based on the control flow

representation. McCabe's Complexity measure is

based on cyclomatic complexity. V(G) is the

number of regions in a planar graph. As the

number of regions increases with the number of

decision paths and loops McCabe's Complexity

measure gives quantitative measure of testing

difficulty and an indication of ultimate

reliablity. Experiments show that there are

distinct relationship between this measure and

the number of errors in a source code and also

the time required to find and correct such

measures.

24

V(G) 3

25

McCabe's Complexity Metric

Advantages of McCabe Cyclomatic Complexity It

can be used as a maintenance metric. Used as a

quality metric, gives relative complexity of

various designs. It can be computed early in

life cycle than of Halstead's metrics. Measures

the minimum effort and best areas of

concentration for testing. It guides the testing

process by limiting the program logic during

development. Is easy to apply.

26

McCabe's Complexity Metric

Drawbacks of McCabe Cyclomatic Complexity The

cyclomatic complexity is a measure of the

program's control complexity and not the data

complexity The same weight is placed on nested

and non-nested loops. However, deeply nested

conditional structures are harder to understand

than non-nested structures. It may give a

misleading figure with regard to a lot of simple

comparisons and decision structures.

27

Measures of reliability and availability

- MTBF Mean Time Between Failure

- MTBF MTTF MTTR

- MTTF Mean time to failure

- MTTR Mean Time to Repair

- Some say that MTBF is better than defects/KLOC.

- End user is concerned with failures not the

total error count. - Software availability is the probability that a

program is operating accroding to requirements at

a given point of time. - Availability MTTF / (MTTFMTTR) 100

- Note MTBF is equally sensitive to MTTF and MTTR

but availibility is somewhat more sensitive to

MTTR. - Availability is an indirect measure of the

maintainability of the software.

28

Software reliability models

- Principal factors for software reliability

models - 1. Fault introduction depends upon the

characteristics of the developed code and the

development process characteristics. - 2. Fault removal depends upon time,

operational profile and the quality of the repair

activity. - 3. Environment depends upon the operational

profile. - Software relibility models of two categories

- 1. models that predict reliablity as a function

of calendar time - 2. elapsed processing

time.

29

Software reliability models

- Criteria for software reliability models

- 1. Predictive validity ability to predict

future failure behaviour based on data collected

during testing and operational phases. - 2. Capability ability to generate data that can

be readily applied to pragmatic industrial

software development efforts. - 3. MTBF is equally sensitive to MTTF and MTTR

but availability is somewhat more sensitive to

MTTR.

30

Software Testing Techniques

- Software testing is a critical element of SQA and

represents the ultimate review of specification,

design, and coding. - Approximately 40 of the total project effort

goes into testing. - Software testing fundamentals

- Testing objectives

- Test information flow

- Test Case design

- Developer develops a software out of

specification and design. constructive - Tester designs test cases and out there to

demolish the software.

31

Software Testing Techniques

- Testing Objectives

- 1. Process of executing a program with the

intent of finding an error. - 2. A good test case is the one which has a high

probability of finding an error. - 3. Successful test is one which uncovers an yet

undiscovered error. - Testing cannot show the absence of defects,

- it can only show that software defects are

present.

32

Software Testing Techniques

- Test Information Flow

- Two classes of input for the test process

- 1. a software requirement specification, design

specification, and source code. - 2. a test configuration (test plan and procedure

testing tools test cases expected

behaviour for the test cases)

Software configuration

Evaluation

Test Results

Errors

Testing

Debug

Error Rate Data

Corrections

Reliability Model

Test Configuration

Expected Results

Predicted reliability

33

Software Testing Techniques

- Test Case Design

- As challenging as the design of the product

itself. - A product can be tested in two ways

- 1. The designed functions are known

- test to demonstrate that all are fully

operational. (Black Box). - 2. The internal workings of a product is known,

test that the internal operation of the

product performs according to specification.

(White Box). - It is difficult to do exhaustive white box

testing, however, some important logical paths

can be selected and exercised. - Black box testing ensures that the interfaces are

working fine.

34

White Box Testing

- White Box Testing

- Test cases are selected on the basis of

examination of the code, rather than the

specifications.

35

White Box Testing

- White Box Testing

- White Box testing is a test case design method.

- Criteria

- All independent paths within the module has been

tested at least once. - Exercise all logical decisions on their true and

false sides. - Execute all loops at their boundaries and within

their operational bounds, - Execute internal data structures to ensure their

validity.

36

Basis Path Testing

- Basis Path Testing

- Basis Path Testing is a White Box testing

technique. - Steps

- 1.Derive a logical complexity measure of a

procedural design. - 2.Use the above to derive basis set of execution

paths. - 3.Derive the test cases out of these basis set.

- 4.It guarantees that every statement in the

program is executed at least once.

37

Basis Path Testing

- Flow Graph Notation

Sequence

if

while

until

Case

38

Basis Path Testing

- Cyclomatic Complexity

1

1

2,3

2

1

1

6

3

4,5

6

8

7

4

5

7

8

9

9

10

10

11

Flow graph

11

Flow Chart

39

Basis Path Testing

- Cyclomatic Complexity

- Each circle is a flow graph node, represents one

or more statements. - A sequence of process boxes and a decision

diamond can map into a node. - Arrows are called edges, represents the flow of

control. - Areas bounded by edges and nodes are called

regions. - Each node that contains a condition is called a

predicate node and is characterized by two edges

emanating from it.

40

Basis Path Testing

- Cyclomatic Complexity

- Cyclomatic complexity is a software metric that

gives a quantitative measure of the logical

complexity of the program. - This value defines the number of independent path

in the basis set of a program and provides the

upper bound on the number of tests. - Independent path Any path through the program

that introduces at least one new set of

processing statements or a condition. - Rather, it will introduce at least one not yet

traversed edge.

41

Basis Path Testing

- P11-11 P21-2-3-4-5-10-1-11

- P31-2-3-6-8-9-10-1-11 P41-2-3-6-7-9-10-1-11

- P51-2-3-4-5-10-1-2-3-6-8-9-10-1-11 Basis set

may not be unique.

1

1

2,3

2

1

1

6

3

4,5

6

8

7

4

5

7

8

9

9

10

10

11

42

Basis Path Testing

- Cyclomatic Complexity

- How many paths to look for?

- Cyclomatic complexity computation

- Equals the number of regions.

- Cyclomatic Complexity V(G) E-N2

- where Enumber of flow graph edges

- NNumber of flow graph nodes

- V(G)P1

- where Pnumber of predicate nodes.

- Cyclomatic complexity is upper bound on the

number of independent paths.

43

Basis Path Testing

- Deriving Test Cases

- Steps

- Using the design or code as a foundation, draw a

corresponding flow graph. - Determine the cyclomatic complexity of the graph.

- Determine a basis set of linearly independent

paths. - Prepare test cases that will force execution

through each of the path in the basis set.

44

Loop Testing

- Home Task Automating basis set generation using

graph matrix - Loop Testing

- This white box technique focuses exclusively on

the validity of loop constructs. - Four different classes of loops can be defined

- simple loops,

- nested loops,

- concatenated loops, and

- unstructured loops.

45

Loop Testing

- Simple Loops

- The following tests should be applied to simple

loops where n is the maximum number of allowable

passes through the loop - skip the loop entirely,

- only pass once through the loop,

- m passes through the loop where m lt n,

- n-1, n, n 1 passes through the loop.

46

Loop Testing

- Nested Loops

- The testing of nested loops cannot simply extend

the technique of simple loops since this would

result in a geometrically increasing number of

test cases. - One approach for nested loops

- Start at the innermost loop. Set all other loops

to minimum values. - Conduct simple loop tests for the innermost loop

while holding the outer loops at their minimums.

Add tests for out-of-range or excluded values. - Work outward, conducting tests for the next loop

while keeping all other outer loops at minimums

and other nested loops to typical values. - Continue until all loops have been tested.

47

Loop Testing

- Concatenated Loops

- Concatenated loops can be tested as simple loops

if each loop is independent of the others. If

they are not independent (e.g. the loop counter

for one is the loop counter for the other), then

the nested approach can be used. - Unstructured Loops

- This type of loop should be redesigned not

tested!!!

48

Black Box Testing

- The functionality of each module is tested with

regards to its specifications (requirements) and

its context (events). Only the correct

input/output relationship is scrutinized.

49

Black Box Testing

- Focus Functional testing of the software.

- Input conditions should be such that it

exercises all functional - requirements of the software.

- Black box testing is complementary to white box

testing, - not an alternative.

- Attempts to uncover errors in the following

category - 1. incorrect or missing functions

- 2. interface errors

- 3. errors in data structures or external

database access - 4. performance errors

- 5. initialization or termination errors

50

Black Box Testing

- White box testing is performed early in the

testing process, while - Black box testing is performed during the later

part. - Tests are designed to answer the following

- How functional validity is tested?

- What classes of input will make good test cases?

- Is the system particularly sensitive to certain

input values? - How are the boundaries of data class isolated?

- What data rates and data volumes can the system

tolerate? - What effect will specific combinations of data

have on system operation?

51

Black Box Testing Methods

- An equivalence class is a set of test cases such

that any one member of the class is

representative of any other member of the class. - Example

- Suppose the specifications for a database product

state that the product must be able to handle any

number of records from 1 through 16,383. - There are three equivalence classes

- Equivalence class 1 less then one record.

- Equivalence class 2 from 1 to 16,383 records.

- Equivalence class 3 more than 16,383 records.

52

Black Box Testing Methods

- Equivalence Partitioning

- This technique divides the input domain of

program in classes of data from which test cases

can be derived. - Goal To define a test case which that uncovers a

class of errors, thereby reducing the total

number of test cases. - example how it reacts to all character data.

- Evaluate equivalence classes for an input

condition. - Equivalence class a set of valid or invalid

states for input conditions.

53

Black Box Testing Methods

- Guidelines for defining Equivalence Classes

- If an input condition specifies a range, one

valid and two invalid equivalence classes are

defined. - If an input condition requires a specific input

value, one valid and two invalid equivalence

classes are defined. - If an input condition specifies a member of a

set, one valid and one invalid equivalence

classes are defined. - If an input condition is Boolean, one valid and

one invalid class are defined.

54

Black Box Testing Methods

- Example User dials up a bank with six-digit

password. - Area code blank or 3-digit number

- Prefix 3-digit number not beginning with 0 or 1

- Suffix four digit number

- Password 6-digit alphanumeric

- Commands check, deposit, bill pay, etc.

55

Black Box Testing Methods

- Example User dials up a bank with six-digit

password. - Area code boolean may or may not be present

- range 200 to 999

- Prefix range - gt200

- Suffix value 4 digit length

- Password boolean may or may not be present

- value six character string

- Commands set - check, deposit, bill pay,

etc.

56

Black Box Testing Methods

- Boundary Value Analysis

- A test case on or just to one side of a boundary

of an equivalence class is selected, the

probability of detecting a fault increases. - Test case 1 0 records Member of equivalence

class 1 and adjacent to boundary value - Test case 2 1 record Boundary value

- Test case 3 2 records Adjacent to boundary

value - Test case 4 723 records Member of equivalence

class 2 - Test case 5 16,382 records Adjacent to boundary

value - Test case 6 16,383 records Boundary value

- Test case 7 16,384 records Member of

equivalence class 3 and adjacent to boundary value

57

Black Box Testing Methods

- Boundary Value Analysis

- This method leads to a selection of test cases

that exercise boundary values. It complements

equivalence partitioning since it selects test

cases at the edges of a class. Rather than

focusing on input conditions solely, BVA derives

test cases from the output domain also. - BVA guidelines include

- For input ranges bounded by a and b, test cases

should include values a and b and just above and

just below a and b respectively. - If an input condition specifies a number of

values, test cases should be developed to

exercise the minimum and maximum numbers and

values just above and below these limits. - Apply guidelines 1 and 2 to the output.

- If internal data structures have prescribed

boundaries, a test case should be designed to

exercise the data structure at its boundary.