Math 416 PowerPoint PPT Presentation

1 / 63

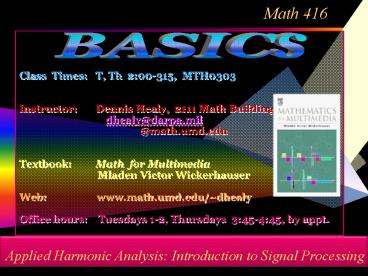

Title: Math 416

1

Math 416

Class Times T, Th 200-315,

MTH0303 Instructor Dennis Healy, 2111

Math Building dhealy_at_darpa.mil

_at_math.umd.edu Textbook Math

for Multimedia

Mladen Victor Wickerhauser Web

www.math.umd.edu/dhealy Office hours

Tuesdays 1-2, Thursdays 345-445, by appt.

BASICs

Applied Harmonic Analysis Introduction to Signal

Processing

2

Homework

- Read Chapter 1 in text

- Do the following 3 problems

- Prove the orthogonality relations for the cosines

and sines of period 1

3

Homework (continued)

- If f(t) is a fixed period 2 p function of the

form - where the coefficients Ai Bj are assumed to be

given fixed numbers, - then show that these coefficients satisfy the

formulae

f(t) A0/2 A1 Cos( t) A2 Cos(2 t) A3

Cos(3 t) B1 Sin( t) B2 Sin(2 t)

B3 Sin(3 t)

4

Homework (continued)

- Write a program in Mathematica or Matlab that

computes the best least squares fit to a table of

sample values input using - A) Polynomial basis up to some degree input

- B) Bandlimited Fourier basis (sins and cosines)

up to some degree input - Output is a table of the best fit coefficients

and a plot of the data points and fitting curve. - Make and plot some interesting examples

5

Last time

6

Computational Errors

- To err is human to forgive, divine.

but to really foul up you need a computer..

7

Example Square wave Fit, 2 bases

Polynomial Basis

Sin Basis

8

Mathematica Demo of fitting

Polys

Slider changes order of model (number of sin

terms, bandwidth of fit)

Sins

Order 14 fit

Also played with the precision of the

computations used to obtain the fit.

9

Mathematica Comparison

Sins

Polys

Order 14 fit Order 24 fit

10

Mathematica Comparison

Polys

Sins

Dependence on word length 5 digits of precision

11

Comparison of Designs

64 Samples

Z

Sin

Poly

14 basis elts

24 basis elts

Angle Matrix White 0 Black 60

ZTZ

12

Suggests it is important to think about Errors

- A lot of roundoff errors can happen with the many

operations in solving a matrix system like the

one we get with least squares. - More critical issue for some matrices, very

small differences in matrix elements can lead to

big changes in the solutions. - These are called Ill conditioned systems

- Small roundoff errors and noise can get amplified

into big errors in the solution.

13

Example Ill-conditioned System

(0,-3)

2x1 x2 3

2.1x1 x2 3

14

Suppose noise /quantization at 1 relative input

error

Output relative error 100

(0,-3)

2x1 x2 3

(.50125,-4.0125)

2.1x1 x2 3

15

Polynomial Basis is Not the best choice for

Numerical work

- Sampled Polynomial Basis elements become

essentially parallel for even moderate powers. - Like the two lines in the last example

16

The Point!

- Important to know the limits of digital signal

processing methods due to errors - Where does error come from and how can we reduce

it? - Model error Capturing the real

situation in mathematics - Truncation error Approximating the mathematics

into a computer - Numerical error How accurately the computer

algorithm works - How do we interpret computational results

- Can we get some idea of how much of our answer is

bogus? - Do we know how sensitive our answer is to

uncertainty in the model?

These issues and related determine if, when, how

we use numerical computations in DSP problems

17

Accuracy Precison in numerical procedures for

approximating desired result

- Error generally arises from the a combination of

inaccuracy and imprecision

Accuracy unbiased, no systematic error

Precision specificity,small variance

18

Quantifying error

(Absolute) Error The difference between the true

value and the numerical approximation

i.e. Approximation true value true error

Generally more useful to think about the size of

the error in a scale set by the size of number

being approximated

19

ERROR SOURCES

- Truncation error Method dependent

- Errors resulting from the use of an approximation

rather than an exact mathematical procedure - Round-off error Machine/Standard dependent

- Errors resulting from being forced to approximate

numbers.

Eg Error in representing 2 by

1.414

20

Example First Derivatives

- Use forward, backward, central divided difference

approximations to estimate the first derivative

of - at x 0.5 with h 0.5 and 0.25 (exact sol.

-0.9125)

Results

21

Centered Difference truncation scales best with

refinement

Proof Taylor Polynomial with Remainder

)

(

x

f

i

backward

forward

central

h

i

x i-2 x i-1 x i

x i1 x i2

-

)

(

)

(

x

f

x

f

i

1

i

O(h)

)

(

x

f

forward

i

h

-

)

(

)

(

x

f

x

f

-

O(h)

1

i

i

)

(

x

f

backward

i

h

-

)

2

)

(

)

(

x

f

x

f

O(h

-

)

(

1

i

1

i

x

f

central

i

h

2

22

ERROR SOURCES

- Truncation error Method dependent

- Errors resulting from the use of an approximation

rather than an exact mathematical procedure - Round-off error Machine/Standard dependent

- Errors resulting from being forced to approximate

numbers.

Eg Error in representing 2 by

1.414

23

Round-off Errors

- Computers can represent numbers to a finite

precision - Integer math can be exact, but only in a limited

in range (fixed point) - Real number math is approximate on computers.

(floating point)

So how do computers represent numbers? Binary

representation of the integers and real numbers

in computer hardware

24

Binary Signed Integer Representation

- First bit of word indicates the sign 0

negative (off), 1 positive (on) - Remaining bits store a number

- Example, 8 bit integer representation of 22

1 0 0 1 0 1 1 0

Sign Integer

( 0 x 26 0 x 25 1 x 24 0 x 23 1

x 22 1 x 21 0 x 20 ) 16 4 2 22

25

Signed Integer Representation

- 8-bit word

- /- 0000000 are the same, therefore we may use

-0 to represent -128 - Total number of 8 bit integers 28 256 -128

, 127

- 16-bit word

- Range -32,768 to 32,767

- 32-bit word

- Range -2,147,483,648 to 2,147,483,64

26

Signed Integer Operations

- Signed Integer arithmetic can be exact as long as

you don't get remainders in division - Eg 7/2 3 in integer math

- or overflow the maximum integer

- For a 8-bit computer max abs 127 (or -128)

- So 123 45 overflow

- and -74 2 underflow

27

Twos complement binary representation of

integers

Much more common than signed integer

representation

For an N-bit (unsigned) binary representation,

full range of integers is 0,, 2 N -1 Low

half of that range (msb 0) consists of

integers 0,1,, 2 N-1 -1 Obvious

thing to do is use these to represent the

non-negative integers up to 2 N-1 -1 Upper half

of the range (msb 1) consists of integers 2

N-1 , 1 2 N-1 1,, 2 N -1

Can write this set as -2 N-1 , -2

N-1 1,, -1 2 N Equivalent to the set -2

N-1 , -2 N-1 1,, -1 modulo 2 N (up to

multiples of 2 N )

Eg N 8 000000000 to 12701111111

0 0/1 0/1 0/1 0/1 0/1

0/1 0/1

Eg N 8 12810000000 to 25511111111

0 0/1 0/1 0/1 0/1 0/1

0/1 0/1

28

Residue classes and residue arithmetic

- am is a set integers equivalent to a mod m

- a represents this set, but so would a - 527 m

of course. - Zm am a in Z

- Eg Z4

- There are m elements in Zm Eg 0 m, 1

m,,m-1 m - am bm defined a bm

- Example 34 24 54 14

- -24 -14 -3 4 1

4 - Likewise multiplication is well defined

29

Twos complement binary representation of

integers

Much more common than signed integer

representation

For an N-bit (unsigned) binary representation,

full range of integers is 0, 2 N -1 Low

half of that range (msb 0) consists of

integers 0,1,, 2 N-1 -1 Obvious thing

to do is use those as our non-negative integers

up to 2 N-1 -1 Upper half of the range (msb

1) consists of integers 2 N-1 , 1 2 N-1 1,, 2

N -1 Can write this set as -2 N-1 ,

-2 N-1 1,, -1 2 N Equivalent to the

set -2 N-1 , -2 N-1 1,, -1 modulo 2 N (up

to multiples of 2 N ) Use the upper half

of the range represents negatives -2 N-1 , -2

N-1 1,, -1

-4 -3-2 -1 0 1 2 3

0 1 2 3 4 5 6 7

Equivalent mod 8

30

Twos complement binary representation of

integers

Heres what it would be for example with 8 bit

representation The positives 0 to 127

represented (usual) using the least significant 7

of the 8 bits

0 1 1 0 0

1 1 1

Eg 103

The negatives of integers 1 to 127 are

represented by their twos complement i.e. -k

is represented as 28 -k 256 - k

Eg find twos complement of - 103

1 0 0 1 1 0

0 0

Flip each bit of 103 (same as 255-103)

1 0 0 1 1

0 0 1

Add 1

153 -103 mod 256

(same as 256-103)

31

Twos complement binary representation of

integers

Much more common than signed integer

representation

For an N-bit (unsigned) binary representation,

full range of integers is 0, 2 N -1 Low

half of that range (msb 0) consists of

integers 0,1,, 2 N-1 -1 Obvious thing

to do is use those as our non-negative integers

up to 2 N-1 -1 Upper half of the range (msb

1) consists of integers 2 N-1 , 1 2 N-1 1,, 2

N -1 Can write this set as -2 N-1 ,

-2 N-1 1,, -1 2 N Equivalent to the

set -2 N-1 , -2 N-1 1,, -1 modulo 2 N (up

to multiples of 2 N ) Use the upper half

of the range represents negatives -2 N-1 , -2

N-1 1,, -1

-4 -3-2 -1 0 1 2 3

0 1 2 3 4 5 6 7

Equivalent mod 8

POINT if we use the N-bit strings but consider

them and operations done on them as being

done modulo 2 N we get many benefits.

Best is that we can add and subtract the

integers within range using same

circuitry since both are done as addition modulo

2 N .

32

Twos complement binary subtraction of integers

Heres subtraction as addition mod 28 in 8 bit

twos complement representation Eg 127 -

103

0 1 1 1 1

1 1 1

127

0 1 1 0 0

1 1 1

103

Know 127 -103 is the same as 127 twos

complement of 103 mod 256

twos complement of - 103 256 -103 153

1 0 0 1 1 0

0 0

153 -103 mod 256

0 0 0 1 1

0 0 0

127 153 Mod 256

24 as expected

33

Other representations of numbers

- We have looked at integer representations for

computer. - There are also floating point computer

representations for real numbers - Thats what was used in the Mathematica demos we

looked at.

34

Floating-Point Representation

- Integer representations are exact within some

bounded range of integers- Real numbers are

represented differently - Representation aims for approx. constant relative

error from tiny fractions to huge numbers - Store as

- sign is 1 or 0 for negative or positive

- exponent is the power (positive or negative) of

base (usually 2) - Significand or mantissa contains significant

digits

35

A Floating-Point Representation

sign of number

signed exponent mantissa

- m mantissa

- B Base of the number system

- e signed exponent

- Note the mantissa is usually normalized first

nonzero digit is at decimal point (bit at binary

point) - Otherwise representation is not unique.

36

8 digit base 10 example

- 8-place word

sign signed exponent mantissa

10951467 (base B 10) mantissa m

-(110-1 410-2 610-3 710-4 )

-0.1467 signed exponent e (9101 5100)

95

-

95

e

10

1467

0

mB

.

37

8 bit base 2 example

sign signed exponent

mantissa

11011010 base B 2 mantissa m

(12-1 02-2 12-3 02-4 ) ½ 1/8

signed exponent e (021

120) 1

1

e

5/4

2

5/8

mB

38

Attributes of Floating Point Representations

- Range from largest positive float to largest

negative. Primarily determined by the base B

and the range of exponents - Precision determined by number of digits in the

mantissa (significand) - Gap between adjacent floats is not constant, as

it would be for integer or fixed point - Gap varies to give (roughly) constant relative

error in representing and numbers in range. - Overflow number too large to be represented as a

float (outside range) - Underflow nonzero number which is too small in

absolute value to be represented with the given

representation

39

Visualizing 8 bit base 2 floats

sign signed exponent

mantissa

mantissa

M

0

M

M

Ms

(restrict to positive, positive exponents for the

moment)

40

Range and coverage (positive, positive exponents)

underflow

9/2

9/16 15/16

0

1

1/2

2

4

8 values with steps of 1/8

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/2

8 values with steps of 1/16

mantissa

15/8

41

Range and coverage

underflow

underflow

9/2

9/16 15/16

0

0

1

1/2

2

4

8 values with steps of 1/8

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/2

8 values with steps of 1/16

mantissa

15/8

42

Range and coverage

underflow

underflow

9/2

9/16 15/16

0

0

1

1/2

2

4

8 values with steps of 1/8

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/2

8 values with steps of 1/16

mantissa

15/8

43

Range and coverage

Negative numbers here

Negative exponents let you represent numbers here

underflow

overflow

9/2

15/2

-15/16 -9/16

-1

-2

-1/2

4

8 values with steps of 1/16

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/8

8 values with steps of 1/16

8 values with steps of 1/2

mantissa

0

15/8

44

Range and coverage

Negative numbers here

Negative exponents let you represent numbers here

underflow

overflow

9/2

15/2

-15/16 -9/16

-1

-2

-1/2

4

8 values with steps of 1/16

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/8

8 values with steps of 1/8

8 values with steps of 1/16

8 values with steps of 1/2

underflow

45

Range and coverage

Negative exponents let you represent numbers here

underflow

overflow

9/2

15/2

-15/16 -9/16

-1

-2

-1/2

4

8 values with steps of 1/16

8 values with steps of 1/16

8 values with steps of 1/4

8 values with steps of 1/8

8 values with steps of 1/8

8 values with steps of 1/2

In General For floating point system w/ base B,

precision p mantissa Only finitely many numbers

can be represented Given x, let fl(x) be the

closest floating point number (round) Can show

relative error of fl(x) for any x between

underflow and overflow is bounded by ½ B1-p

46

Errors in Floating Point Arithmetic

- In addition to the intrinsic errors in

representing an arbitray number in floating

point

- Arithmetic is approximate a reasonable model for

arithmetic combination of floats is - Form the true arithmetic combination

- Normalize

- Round the mantissa to the allowed precision

- There will in general be round off error

Normalize .10448 x 10 2

Round .1045 x 10 2

47

Errors in Floating Point Arithmetic

Normalize .10448 x 10 2

Round .1045 x 10 2

Error 2 x 10 -2

EG 5227 x 10 1 .5221 x 10 1 .9976 x

10 2 - .1103 x 10 3

This might be an intermediate step in a long

calculation.

Add .9976 x 10 2

48

Errors in Floating Point Arithmetic

Normalize .10448 x 10 2

Round .1045 x 10 2

Error 2 x 10 -2

This might be an intermediate step in a long

calculation. Next step

Add .9976 x 10 2

.1045 x 10 2

Normalize .11021 x 10 3

Round .1102 x 10 3

.9976 x 10 2

1 .1021 x 10 2

Error -1 x 10 -2

49

Errors in Floating Point Arithmetic

This might be an intermediate step in a long

calculation. Next

Subtract .1103 x 10 3

.1102 x 10 3

Normalize -.1000 x 10 0

Only one digit significant at this point

- .1103 x 10 3

- .0001 x 10 3

True cumulative result in exact arithmetic 5227

x 10 1 .5221 x 10 1 .9976 x 10 2 -

.1103 x 10 3

- .0920

Out of 4 digits of precision, only one is

significant after 4 arithmetic operations!

50

Errors in Floating Point Arithmetic

5227 x 10 1 .5221 x 10 1 .9976 x 10

2 - .1103 x 10 3

Try same calculation with a different order of

operations

Try right to left this time!

- .1103 x 10 3

.09976 x 10 3

Normalize -.1054 x 10 2

No need to round

- .01054 x 10 3

Next Add .5221 x 10 1

-.1054 x 10 2

Normalize -.5319 x 10 1

No need to round

- .05319 x 10 2

51

Errors in Floating Point Arithmetic

Working the same calculation with a different

order of ops

5227 x 10 1 .5221 x 10 1 .9976 x 10

2 - .1103 x 10 3

Finally Add .5227 x 10 1

- .5319 x 10

No need to round

Normalize -.9200 x 10 -1

.5227 x 10 1

- .0092 x 10 1

This time, Answer is correct to original

precision

Arithmetic depends on ordering of steps in

floating point

52

Common Floating Point Lingo Single Precision

- bit (binary digit) 0 or 1

- byte 4 bits, 24 16 possible values

- word 2 bytes 8 bits, 28 256 possible values

In single precision, a real variable (number) is

stored in four words, or 32 bits (64 bits for

Supercomputers)

23 for the digits 32 bits 8

for the signed exponent 1 for

the sign

53

Double Precision

- In double precision, a real variable is stored in

eight words, or 64 bits - 16 words, 128 bits for supercomputers

- signed exponent ? 210 ? 1024

52 for the digits 64 bits 11

for the signed exponent 1 for

the sign

54

MATLAB

- MATLAB uses double precision

- 4 bytes 64 bits

55

Some things to bear in mind

- Intrinsic errors in representing numbers in

floating point. - Arithmetic is approximate a reasonable model for

arithmetic combination of floats is - Form the true arithmetic combination

- Normalize

- Round the mantissa to the allowed precision

- There will in general be round off error

Build up of errors in simple combinations

(catastrophic loss of precision) Even in simple

things like repeated adds.

56

function sout SumPrecisionLoss(tenmillions,tick)

Demonstrates loss of precision in repeated

floating point operations Implements a "clock"

which increments "elapsed time" by adding time

increment "tick." Time is displayed every 10

million ticks. User specifies how long to run

the clock by indicating how many "tenmillions"

Error at the end of this time is indicated in

"ticks" For example, tick 100 nanosecs

(.0000001) will show a lot of error in ticks

after not so long, say 100 or 1000 secs.

57

function sout SumPrecisionLoss(tenmillions,tick)

Demonstrates loss of precision in repeated

floating point operations s0 for

m1tenmillions for n110000000 s s

tick end disp( 'iteration ')

disp(nm) sout s end disp( 'iteration

') disp(m107) sout s disp( 'error

in clock ticks') disp ((s -

tenmillions107tick)/tick )

58

Run this clock for 10 billion ticks One tick

.1 microsec, so 1000 seconds

gtgt SumPrecisionLoss(1000,.0000001) iteration

1.000000000000000e010 sout

9.999998790135827e002 error in clock ticks

-1.209864173006281e003

121 microseconds off, or .12 milliseconds

59

The Patriot Missile Failure From Doug Arnolds

website On February 25, 1991, during the

(First) Gulf War, an American Patriot Missile

battery in Dharan, Saudi Arabia, failed to track

and intercept an incoming Iraqi Scud missile.

The Scud struck an American Army barracks,

killing 28 soldiers and injuring around 100 other

people .

A report of the General Accounting office,

GAO/IMTEC-92-26, entitled Patriot Missile

Defense Software Problem Led to System Failure

at Dhahran, Saudi Arabia reported on the cause of

the failure. It turns out that the cause was an

inaccurate calculation of the time since boot due

to computer arithmetic errors in a running sum.

60

Specifically, the time in tenths of second as

measured by the system's internal clock was

multiplied by 1/10 to produce the time in

seconds. This calculation was performed using a

24 bit fixed point register. In particular, the

value 1/10, which has a non-terminating binary

expansion, was chopped at 24 bits after the radix

point. The small chopping error, when

multiplied by the large number giving the time in

tenths of a second, led to a significant error.

Indeed, the Patriot battery had been up around

100 hours, and an easy calculation shows that the

resulting time error due to the magnified

chopping error was about 0.34 seconds. (The

number 1/10 equals 1/241/251/281/291/2121/213

.... In other words, the binary expansion of

1/10 is 0.0001100110011001100110011001100.... Now

the 24 bit register in the Patriot stored instead

0.00011001100110011001100 introducing an error of

0.0000000000000000000000011001100... binary, or

about 0.000000095 decimal. Multiplying by the

number of tenths of a second in 100 hours gives

0.0000000951006060100.34.) A Scud travels

at about 1,676 meters per second, and so travels

more than half a kilometer in this (error) time.

This was far enough that the incoming Scud was

outside the "range gate" that the Patriot

tracked. Ironically, the fact that the bad time

calculation had been improved in some parts of

the code, but not all, contributed to the

problem, since it meant that the inaccuracies did

not cancel.

61

Financial meltdown and poor numerics in

Excel?INQUIRING MINDS WANT TO KNOW!

- Whats 77.1 x 850 ? Dont ask Excel 2007

- 65,535 the Number of the Beast

- By Dan Goodin

- http//www.theregister.co/uk/2007/09/26/excel_200

7_bug/

62

Perils of computer number representation Ariane 5

French rocket Ariane 501 was scheduled to launch

on the morning of June 4, 1996, from the launch

site in Kourou, French Guiana.

Failure of the launch due to the un-manned rocket

exploding after 42 seconds from the time of the

launch.

63

On June 4, 1996 an unmanned Ariane 5 rocket

launched by the European Space Agency exploded

just forty seconds after its lift-off from French

Guiana The

rocket was on its first voyage, after a decade of

development costing 7 billion. The destroyed

rocket and its cargo were valued at 500 million.

A board of inquiry investigated the causes of the

explosion and in two weeks issued a report. It

turned out that the cause of the failure was a

software error in the inertial reference system.

Specifically a 64 bit floating point number

relating to the horizontal velocity of the rocket

with respect to the platform was converted to a

16 bit signed integer. The number was larger than

32,767, the largest integer storeable in a 16 bit

signed integer, and thus the conversion failed.

(from Doug Arnolds web site)