Syntax indicates structure of program code PowerPoint PPT Presentation

1 / 34

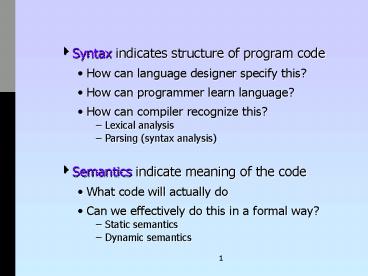

Title: Syntax indicates structure of program code

1

- Syntax indicates structure of program code

- How can language designer specify this?

- How can programmer learn language?

- How can compiler recognize this?

- Lexical analysis

- Parsing (syntax analysis)

- Semantics indicate meaning of the code

- What code will actually do

- Can we effectively do this in a formal way?

- Static semantics

- Dynamic semantics

2

- Different languages are either designed to or

happen to meet different programming needs - Scientific applications

- FORTRAN

- Business applications

- COBOL

- AI

- LISP, Scheme ( Prolog)

- Systems programming

- C

- Web programming

- Perl, PHP, Javascript

- General purpose

- C, Ada, Java

3

- Many factors influence language design

- Architecture

- Most languages were designed for single processor

von Neumann type computers - CPU to execute instructions

- Data and instructions stored in main memory

- General language approach

- Imperative languages

- Fit well with von Neumann computers

- Focus is on variables, assignment, selection and

iteration - Examples FORTRAN, Pascal, C, Ada, C, Java

4

- Imperative language evolution

- Simple straight-line code

- Top-down design and process abstraction

- Data abstraction and ADTs

- Object-oriented programming

- Some consider object-oriented languages not to be

imperative, but most modern oo languages have

imperative roots (ex. C, Java) - Functional languages

- Focus is on function and procedure calls

- Mimics mathematical functions

- Less emphasis on variables and assignment

- In strictest form has no iteration at all

recursion is used instead - Examples LISP, Scheme

5

- Logic programming languages

- Symbolic logic used to express propositions,

rules and inferences - Programs are in a sense theorems

- User enters a proposition and system uses

programmers rules and propositions in an attempt

to prove it - Typical outputs

- Yes Proposition can be established by program

- No Proposition cannot be established by program

- Example Prolog see example program

6

- How is HLL code processed and executed on the

computer? - Compilation

- Source code is converted by the compiler into

binary code that is directly executable by the

computer - Compilation process can be broken into 4 separate

steps - Lexical Analysis

- Breaks up code into lexical units, or tokens

- Examples of tokens reserved words, identifiers,

punctuation - Feeds the tokens into the syntax analyzer

7

- Syntax Analysis

- Tokens are parsed and examined for correct

syntactic structure, based on the rules for the

language - Programmer syntax errors are detected in this

phase - Semantic Analysis/Intermediate Code Generation

- Declaration and type errors are checked here

- Intermediate code generated is similar to

assembly code - Optimizations can be done here as well, for

example - Unnecessary statements eliminated

- Statements moved out of loops if possible

- Recursion removed if possible

8

- Code Generation

- Intermediate code is converted into executable

code - Code is also linked with libraries if necessary

- Note that steps 1) and 2) are independent of the

architecture depend only upon the language

Front End - Step 3) is somewhat dependent upon the

architecture, since, for example, optimizations

will depend upon the machine used - Step 4) is clearly dependent upon the

architecture Back End

9

- Interpreting

- Program is executed in software, by an

interpreter - Source level instructions are executed by a

virtual machine - Allows for robust run-time error checking and

debugging - Penalty is speed of execution

- Example Some LISP implementations, Unix shell

scripts and Web server scripts

10

- Hybrid

- First 3 phases of compilation are done, and

intermediate code is generated - Intermediate code is interpreted

- Faster than pure interpretation, since the

intermediate codes are simpler and easier to

interpret than the source codes - Still much slower than compilation

- Examples Java and Perl

- However, now Java uses JIT Compilation also

- Method code is compiled as it is called so if it

is called again it will be faster

11

- How do we formally define a language?

- Assume we have a language, L, defined over an

alphabet, ?. - 2 related techniques

- Recognition

- An algorithm or mechanism, R, will process any

given string, S, of lexemes and correctly

determine if S is within L or not - Not used for enumeration of all strings in L

- Used by parser portion of compiler

12

- Generation

- Produces valid sentences of L

- Not as useful as recognition for compilation,

since the valid sentences could be arbitrary - More useful in understanding language syntax,

since it shows how the sentences are formed - Recognizer only says if sentence is valid or not

more of a trial and error technique

13

- So recognizers are what compilers need, but

generators are what programmers need to

understand language - Luckily there are systematic ways to create

recognizers from generators - Thus the programmer reads the generator to

understand the language, and a recognizer is

created from the generator for the compiler

14

- Grammar

- A mechanism (or set of rules) by which a language

is generated - Defined by the following

- A set of non-terminal symbols, N

- Do not actually appear in strings

- A set of terminal symbols, T

- Appear in strings

- A set of productions, P

- Rules used in string generation

- A starting symbol, S

15

- Noam Chomsky described four classes of grammars

(used to generate four classes of languages)

Chomsky Hierarchy - )Unrestricted

- Context-sensitive

- Context-free

- Regular

- More info on unrestricted and context-sensitive

grammars in a theory course - The last two will be useful to us

16

- Regular Grammars

- Productions must be of the form

- ltnongt ? lttergtltnongt lttergt

- where ltnongt is a nonterminal, lttergt is a

terminal, and represents either or - Can be modeled by a Finite-State Automaton (FSA)

- Also equivalent to Regular Expressions

- Provide a model for building lexical analyzers

17

- Have following properties (among others)

- Can generate strings of the form ?n, where ? is a

finite sequence and n is an integer - Pattern recognition

- Can count to a finite number

- Ex. an n 85

- But we need at least 86 states to do this

- Cannot count to arbitrary number

- Note that an for any n (i.e. 0 or more

occurrences) is easy do not have to count - Important to realize that the number of states is

finite cannot recognize patterns with an

arbitrary number of possibilities

18

- Example Regular grammar to recognize Pascal

identifiers (assume no caps) - N Id, X T a..z, 0..9 S Id

- P

- Id ? aX bX a b z

- X ? aX bX 0X 9X a z 0

9 - Consider equiv. FSA

a

0

Id

z

9

19

- Example Regular grammar to generate a binary

string containing an odd number of 1s - N A,B T 0,1 S A P

- A ? 0A 1B 1

- B ? 0B 1A 0

- Example Regular grammars CANNOT generate strings

of the form anbn - Grammar needs some way to count number of as and

bs to make sure they are the same - Any regular grammar (or FSA) has a finite number,

say k, of different states - If n gt k, not possible

20

- If we could add a memory of some sort we could

get this to work - Context-free Grammars

- Can be modeled by a Push-Down Automaton (PDA)

- FSA with added push-down stack

- Productions are of the form

- ltnongt ? ?, where ltnongt is a nonterminal and ? is

any sequence of terminals and nonterminals

21

- So how to generate anbn ? Let a0, b1

- N A T 0,1 S A P

- A ? 0A1 01

- Note that now we can have a terminal after the

nonterminal as well as before - Can also have multiple nonterminals in a single

production - Example Grammar to generate sets of balanced

parentheses - N A T (,) S A P

- A ? AA (A) ()

22

- Context-free grammars are also equivalent to BNF

grammars - Developed by Backus and modified by Naur

- Given a (BNF) grammar, we can derive any string

in the language from the start symbol and the

productions - A common way to derive strings is using a

leftmost derivation - Always replace leftmost nonterminal first

- Complete when no nonterminals remain

23

- Example Leftmost derivation of nested parens

(()(())) - A ? (A)

- ? (AA)

- ? (()A)

- ? (()(A))

- ? (()(()))

- We can view this derivation as a tree, called a

parse tree for the string

24

- Parse tree for (()(()))

A

(

A

)

A

A

)

(

)

(

A

)

(

25

- If, for a given grammar, a string can be derived

by two or more different parse trees, the grammar

is ambiguous - Some languages are inherently ambiguous

- All grammars that generate that language are

ambiguous - Many other languages are not themselves

ambiguous, but can be generated by ambiguous

grammars - It is generally better for use with compilers if

a grammar is unambiguous - Semantics are often based on syntactic form

26

- Ambiguous grammar example Generate strings of

the form 0n1m, where n,m gt 1 - N A,B,C T 0,1 S A P

- A ? BC 0A1

- B ? 0B 0

- C ? 1C 1

- Consider the string 00011

A

A

C

B

0

A

1

B

0

C

1

B

C

B

0

1

1

B

0

0

0

27

- We can easily make this grammar unambiguous

- Remove production A ? 0A1

- Note that nonterminal B can generate an arbitrary

number of 0s and nonterminal C can generate an

arbitrary number of 1s - Now only one parse tree

A

C

B

B

0

C

1

B

0

1

0

28

- Context-free grammars cannot generate everything

- Ex Strings of the form WW in 0,1

- Cannot guarantee that arbitrary string is the

same on both sides - Compare to WWR

- These we can generate from the middle and build

out in each direction - For WW we would need separate productions for

each side, and we cannot coordinate the two with

a context-free grammar - Need Context-Sensitive in this case

29

- Ok, we can generate languages, but how to

recognize them? - We need to convert our generators into

recognizers, or parsers - We know that a Context-free grammar corresponds

to a Push-Down Automaton (PDA) - However, the PDA may be non-deterministic

- As we saw in examples, to create a parse tree we

sometimes have to guess at a substitution

30

- May have to guess a few times before we get the

correct answer - This does not lend itself to programming language

parsing - Wed like parser to never have to guess

- To eliminate guessing, we must restrict the PDAs

to deterministic PDAs, which restricts the

grammars that we can use - Must be unambiguous

- Some other, less obvious restrictions, depending

upon parsing technique used

31

- There are two general categories of parsers

- Bottom-up parsers

- Can parse any language generated by a

Deterministic PDA - Build the parse trees from the leaves up back to

the root as the tokens are processed - Correspond LR(k) grammars

- Left to right processing of string

- Rightmost derivation of parse tree (in reverse)

- k symbols lookahead required

- LR parsers are difficult to write by hand, but

can be produced systematically by programs such

as YACC (Yet Another Compiler Compiler).

32

- Top-down parsers

- Build the parse trees from the root down as the

tokens are processed - Also called predictive parsers, or LL parsers

- Left-to-right processing of string

- Leftmost derivation of parse tree

- The LL(1) that we saw before means we can parse

with only one token lookahead

33

- Sematics indicate the meaning of a program

- What do the symbols just parsed actually say to

do? - Two different kinds of semantics

- Static Semantics

- Almost an extension of program syntax

- Deals with structure more than meaning, but at a

meta level - Handles structural details that are difficult or

impossible to handle with the parser - Ex Has variable X been declared prior to its

use? - Ex Do variable types match?

34

- Dynamic Semantics (often just called semantics)

- What does the syntax mean?

- Ex Control statements

- Ex Parameter passing

- Programmer needs to know meaning of statements

before he/she can use language effectively