Agnostically Learning Decision Trees PowerPoint PPT Presentation

1 / 48

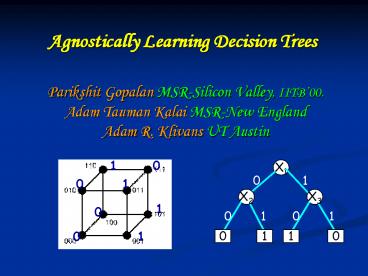

Title: Agnostically Learning Decision Trees

1

Agnostically Learning Decision Trees

Parikshit Gopalan MSR-Silicon Valley,

IITB00.Adam Tauman Kalai MSR-New EnglandAdam

R. Klivans UT Austin

1

0

X1

0

1

1

0

0

1

2

Computational Learning

3

Computational Learning

4

Computational Learning

f0,1n ! 0,1

x, f(x)

Learning Predict f from examples.

5

Valiants Model

f0,1n ! 0,1

Halfspaces

-

x, f(x)

-

-

-

-

-

-

-

-

-

-

Assumption f comes from a nice concept class.

6

Valiants Model

f0,1n ! 0,1

Decision Trees

X1

x, f(x)

Assumption f comes from a nice concept class.

7

The Agnostic Model Kearns-Schapire-Sellie94

f0,1n ! 0,1

Decision Trees

x, f(x)

No assumptions about f. Learner should do as well

as best decision tree.

8

The Agnostic Model Kearns-Schapire-Sellie94

Decision Trees

x, f(x)

No assumptions about f. Learner should do as well

as best decision tree.

9

Agnostic Model Noisy Learning

f0,1n ! 0,1

- Concept Message

- Truth table Encoding

- Function f Received word.

- Coding Recover the Message.

- Learning Predict f.

10

Uniform Distribution Learning for Decision Trees

- Noiseless Setting

- No queries nlog n Ehrenfeucht-Haussler89.

- With queries poly(n). Kushilevitz-Mansour91

Agnostic Setting Polynomial time, uses queries.

G.-Kalai-Klivans08

Reconstruction for sparse real polynomials in the

l1 norm.

11

The Fourier Transform Method

- Powerful tool for uniform distribution learning.

- Introduced by Linial-Mansour-Nisan.

- Small depth circuits Linial-Mansour-Nisan89

- DNFs Jackson95

- Decision trees Kushilevitz-Mansour94,

ODonnell-Servedio06, G.-Kalai-Klivans08 - Halfspaces, Intersections Klivans-ODonnell-Serve

dio03, Kalai-Klivans-Mansour-Servedio05 - Juntas Mossel-ODonnell-Servedio03

- Parities Feldman-G.-Khot-Ponnsuswami06

12

The Fourier Polynomial

- Let f-1,1n ! -1,1.

- Write f as a polynomial.

- AND ½ ½X1 ½X2 - ½X1X2

- Parity X1X2

- Parity of ? ½ n ??(x) ?i 2 ?Xi

- Write f(x) ?? c(?)??(x)

- ?? c(?)2 1.

Standard Basis Function f Parities

13

The Fourier Polynomial

- Let f-1,1n ! -1,1.

- Write f as a polynomial.

- AND ½ ½X1 ½X2 - ½X1X2

- Parity X1X2

- Parity of ? ½ n ??(x) ?i 2 ?Xi

- Write f(x) ?? c(?)??(x)

- ?? c(?)2 1.

c(?)2 Weight of ?.

?

14

Low Degree Functions

- Sparse Functions Most of the weight lies on

small subsets. - Halfspaces, Small-depth circuits.

- Low-degree algorithm. Linial-Mansour-Nisan

- Finds the low-degree Fourier coefficients.

Least Squares Regression Find

low-degree P minimizing Ex P(x) f(x)2 .

15

Sparse Functions

- Sparse Functions Most of the weight lies on a

few subsets. - Decision trees.

- t leaves ) O(t) subsets

- Sparse Algorithm.

- Kushilevitz-Mansour91

Sparse l2 Regression Find t-sparse P

minimizing Ex P(x) f(x)2 .

16

Sparse l2 Regression

- Sparse Functions Most of the weight lies on a

few subsets. - Decision trees.

- t leaves ) O(t) subsets

- Sparse Algorithm.

- Kushilevitz-Mansour91

Sparse l2 Regression Find t-sparse P

minimizing Ex P(x) f(x)2 . Finding large

coefficients Hadamard decoding. Kushilevitz-Mans

our91, Goldreich-Levin89

17

Agnostic Learning via l2 Regression?

18

Agnostic Learning via l2 Regression?

19

Agnostic Learning via l2 Regression?

Target f

Best Tree

- l2 Regression

- Loss P(x) f(x)2

- Pay 1 for indecision.

- Pay 4 for a mistake.

- l1 Regression KKMS05

- Loss P(x) f(x)

- Pay 1 for indecision.

- Pay 2 for a mistake.

20

Agnostic Learning via l1 Regression?

- l2 Regression

- Loss P(x) f(x)2

- Pay 1 for indecision.

- Pay 4 for a mistake.

- l1 Regression KKMS05

- Loss P(x) f(x)

- Pay 1 for indecision.

- Pay 2 for a mistake.

21

Agnostic Learning via l1 Regression

Target f

Best Tree

Thm KKMS05 l1 Regression always gives a good

predictor. l1 regression for low degree

polynomials via Linear Programming.

22

Agnostically Learning Decision Trees

Sparse l1 Regression Find a t-sparse polynomial

P minimizing Ex P(x) f(x) .

- Why is this Harder

- l2 is basis independent, l1 is not.

- Dont know the support of P.

G.-Kalai-Klivans Polynomial time algorithm for

Sparse l1 Regression.

23

The Gradient-Projection Method

L1(P,Q) ?? c(?) d(?) L2(P,Q) ?? (c(?)

d(?))21/2

f(x)

P(x) ?? c(?) ??(x)

Q(x) ?? d(?) ??(x)

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

24

The Gradient-Projection Method

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

25

The Gradient-Projection Method

Projection

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

26

The Gradient-Projection Method

Projection

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

27

The Gradient

f(x)

P(x)

Increase P(x) if low. Decrease P(x) if high.

- g(x) sgnf(x) - P(x)

- P(x) P(x) ? g(x).

28

The Gradient-Projection Method

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

29

The Gradient-Projection Method

Projection

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

30

Projection onto the L1 ball

Currently ??c(?) gt t Want ??c(?) t.

31

Projection onto the L1 ball

Currently ??c(?) gt t Want ??c(?) t.

32

Projection onto the L1 ball

- Below cutoff Set to 0.

- Above cutoff Subtract.

33

Projection onto the L1 ball

- Below cutoff Set to 0.

- Above cutoff Subtract.

34

Analysis of Gradient-Projection Zinkevich03

- Progress measure Squared L2 distance from

optimum P. - Key Equation

- Pt P2 - Pt1 P2 2? (L(Pt) L(P))

- Within ? of optimal in 1/?2 iterations.

- Good L2 approximation to Pt suffices.

?2

Progress made in this step.

How suboptimal current soln is.

35

Gradient

f(x)

P(x)

- g(x) sgnf(x) - P(x).

Projection

36

The Gradient

f(x)

P(x)

- g(x) sgnf(x) - P(x).

- Compute sparse approximation g KM(g).

- Is g a good L2 approximation to g?

- No. Initially g f.

- L2(g,g) can be as large 1.

37

Sparse l1 Regression

Approximate Gradient

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

38

Sparse l1 Regression

Projection Compensates

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

39

KM as l2 Approximation

The KM Algorithm Input g-1,1n ! -1,1, and

t. Output A t-sparse polynomial g

minimizing Ex g(x) g(x)2 Run Time

poly(n,t).

40

KM as L1 Approximation

The KM Algorithm Input A Boolean function g

? c(?)??(x). A error bound ?. Output

Approximation g ? c(?)??(x) s.t c(?)

c(?) ? for all ? ½ n. Run Time poly(n,1/?)

41

KM as L1 Approximation

Only 1/?2

- Identify coefficients larger than ?.

- Estimate via sampling, set rest to 0.

42

KM as L1 Approximation

- Identify coefficients larger than ?.

- Estimate via sampling, set rest to 0.

43

Projection Preserves L1 Distance

L1 distance at most 2? after projection. Both

lines stop within ? of each other.

44

Projection Preserves L1 Distance

L1 distance at most 2? after projection. Both

lines stop within ? of each other. Else, Blue

dominates Red.

45

Projection Preserves L1 Distance

L1 distance at most 2? after projection. Projectin

g onto the L1 ball does not increase L1 distance.

46

Projection Preserves L1 Distance

L1 distance at most 2? after projection. Projectin

g onto the L1 ball preserves L1 distance.

47

Sparse l1 Regression

- L1(P, P) 2?

- L1(P, P) 2t

- L2(P, P)2 4?t

P

P

Can take ? 1/t2.

Variables c(?)s. Constraint ?? c(?)

t Minimize ExP(x) f(x)

48

Agnostically Learning Decision Trees

Sparse L1 Regression Find a sparse polynomial P

minimizing Ex P(x) f(x) .

- G.-Kalai-Klivans08

- Can get within ? of optimum in poly(t,1/?)

iterations. - Algorithm for Sparse l1 Regression.

- First polynomial time algorithm for Agnostically

Learning Sparse Polynomials.

49

l1 Regression from l2 Regression

Function f D ! -1,1, Orthonormal Basis

B. Sparse l2 Regression Find a t-sparse

polynomial P minimizing Ex P(x) f(x)2

. Sparse l1 Regression Find a t-sparse

polynomial P minimizing Ex P(x) f(x) .

G.-Kalai-Klivans08 Given solution to l2

Regression, can solve l1 Regression.

50

Agnostically Learning DNFs?

- Problem Can we agnostically learn DNFs in

polynomial time? (uniform dist. with queries) - Noiseless Setting Jacksons Harmonic Sieve.

- Implies weak learner for depth-3 circuits.

- Beyond current Fourier techniques.

Thank You!