Confirmatory Factor Analysis PowerPoint PPT Presentation

1 / 167

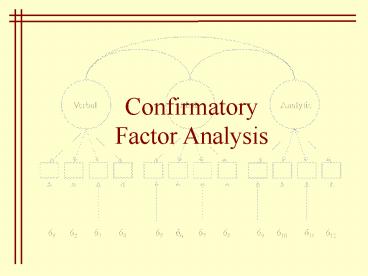

Title: Confirmatory Factor Analysis

1

Confirmatory Factor Analysis

2

In principal components analysis and factor

analysis, the goals of the estimation procedure

determine the solution (e.g., variance-maximizing

linear combinations or simple structure). In

confirmatory factor analysis, the theory or

expectations of the researcher determines the

solution. The researcher anticipates rather than

discovers a structure to the data.

3

The quality of those expectations are then

judged by how well they match the original data

(e.g., the variance-covariance matrix for the

observed variables). The expectations imply

certain things about the variance-covariance

matrix, which can be tested for goodness of fit.

4

In confirmatory factor analysis, expectations are

articulated very clearly. Those expectations then

dictate the elements of certain matrices that

reflect the relations between latent variables

(i.e., common factors) and observed variables,

the variances and covariances for latent

variables, and the variances and covariances for

specific factors (i.e., errors).

5

Some elements of these matrices are dictated to

have certain values (e.g., 0) and others are free

to be estimated. Once the matrices have been

estimated, they can be used to reconstruct the

variance-covariance matrix for the original

variables. The similarity of this reconstructed

matrix to the actual variance-covariance matrix

indicates the quality of the solution and the

original guiding expectations.

6

Expectations can be represented in a measurement

model that indicates how latent variables are

related to observed variables and to other latent

variables.

x2

x1

X1

X5

X4

X3

X2

d1

d5

d4

d3

d2

7

A measurement model implies that the observed

variables are weighted linear combinations of the

latent variables.

l11

l21

l31

l42

l52

8

These linear combinations resemble those from

exploratory factor analysis. A key difference is

that each of the common factors does not

contribute to all observed variables. The

underlying theory has dictated that some weights

are 0. If that assumption is wrong, then the

ability to reconstruct the variances and

covariances among the observed variables will

suffer.

9

The assumptions about the weights are represented

in the L matrix, with some values fixed to be 0

and others free to be estimated. These weights

represent the relations between the observed

variables and the latent variables. They contain

the same kind of information as the structure and

pattern matrices in exploratory factor analysis.

10

The assumptions about the weights may resemble

simple structure from exploratory factor

analysis, but that is not required. The

particular weights that are proposed should

reflect the underlying theory being tested.

11

The guiding theoretical model also will make

assumptions about the number and relationships

among the latent variables. That defines the F

matrixthe variance-covariance matrix for the

latent variables.

12

The model assumes that each person has a score on

each latent variable and a score on an error

latent variable for each measure.

13

Because of expectations about the weights, the

linear combinations can be simplified.

14

The model also makes assumptions about the

variances and covariances of the error latent

variables. These are contained in the Qd matrix.

When the errors are assumed to be uncorrelated,

the off-diagonal elements of this matrix will be

zero. This is an often made, but often

unrealistic assumption. Confirmatory factor

models allow correlated errors to be explicitly

modeled.

15

This model implies uncorrelated errors.

16

The covariance (and variances) among the observed

variables can be estimated from the latent

variable parameters. If the model-implied

parameters are correct, then the reproduced

variances and covariances (S) should be close to

the observed variances and covariances (S).

17

More generally

Reminder X is a linear combination of latent

variables and so its variance can be obtainable

from the variance-covariance matrix for the

latent variables along with the weights for

creating the linear combination.

18

The estimation procedure most commonly used in

confirmatory factor analysis is maximum

likelihood estimation. This approach seeks the

parameter estimates that maximize the probability

of the data that were actually obtained. The

approach is best understood by comparing it to

the more familiar ordinary least squares approach

to estimation.

19

In ordinary least squares, we seek a parameter

estimate that minimizes an error function. The

sample mean is a good example. There is no other

location for central tendency (e.g., mode,

median) that does a better job of minimizing the

following

Provided we find this rule satisfactory, then the

mean provides a good way of capturing the most

typical score in a distribution.

20

Similarly, the regression coefficients in

multiple regression are derived so that they

minimize

Provided this rule is satisfactory, then the

regression weights are optimal for prediction.

21

As an alternative estimation procedure, maximum

likelihood finds the parameter estimates that

maximize the probability of the data. The maximum

likelihood estimate for the sample mean and

variance finds the values that maximize the

following

22

Note the explicit assumption that the data are

normally distributed. If that assumption is in

error, then the normal probability density

function will not provide an optimal solution to

the problem.

23

Provided the data are normally distributed, the

maximum likelihood estimates for m and s make the

obtained data more likely than any other

parameter estimates. The estimation process also

produces standard errors, making hypothesis tests

possible as well. But, the validity of these

hypothesis tests rests on the validity of the

normality assumption.

24

The approach can be extended to multivariate data

as well. We could seek the maximum likelihood

estimates for a bivariate normal distribution

25

Three bivariate normal distributions varying only

in the value of r. The validity of estimates of r

rely on the validity of the assumption of

bivariate normality.

26

In the context of confirmatory factor analysis,

maximum likelihood estimates represent model

parameters that make the obtained data most

likely, within the constraints imposed by the

model.

27

All of the model assumptions are contained in the

reproduced variance-covariance matrix, S. The

probability density function, assuming

multivariate normality, is

28

The likelihood function that is maximized is thus

29

It is more convenient to work with the log of the

likelihood function, and, the function can be

simplified

30

The last part of this formula tends toward an

identity matrix as the reproduced

variance-covariance matrix approaches the actual

variance-covariance matrix. The trace of that

matrix product will be larger as it approaches an

identity matrix. A chi-square test allows a test

of the badness of fit of the reproduced and

obtained covariance matrices.

31

The quality of the original model and its ability

to reproduce the actual variance-covariance

matrix is more easily gauged by the

goodness-of-fit index (GFI). The numerator of the

ratio tends toward zero as the reproduced

variance-covariance matrix approaches the actual

variance-covariance matrix. This index is similar

to R2 in multiple regression.

32

The estimation procedure can capitalize on

chance, so the adjusted goodness-of-fit index

(AGFI) was created to account for that. It is

similar to the adjusted R2 in multiple regression.

33

The mental abilities data set can be used to

compare the exploratory and confirmatory

approaches to factor analysis.

Hypothetical data (N 500) were created for

individuals completing a 12-section test of

mental abilities. All variables are in standard

form.

34

The scree test clearly shows the presence of

three factors

35

On average, the three factors extracted can

accounted for about half of the variance in the

individual subtests.

36

Factor analysis accounts for less variance than

principal components and rotation shifts the

variance accounted for by the factors.

37

The initial extraction . . .

38

Oblique rotation . . .

39

The correlations among the factors . . .

40

The confirmatory approach begins with an explicit

model that implies the elements of the key

matrices.

41

(No Transcript)

42

(No Transcript)

43

Parameter estimates.

44

Tests of significance for parameter estimates t

values.

45

CHI-SQUARE WITH 51 DEGREES OF FREEDOM 55.50 (P

0.31) ESTIMATED NON-CENTRALITY PARAMETER (NCP)

4.50 90 PERCENT CONFIDENCE INTERVAL FOR NCP

(0.0 26.77) MINIMUM FIT FUNCTION VALUE

0.11 POPULATION DISCREPANCY FUNCTION VALUE (F0)

0.0090 90 PERCENT CONFIDENCE INTERVAL FOR F0

(0.0 0.054) ROOT MEAN SQUARE ERROR OF

APPROXIMATION (RMSEA) 0.013 90 PERCENT

CONFIDENCE INTERVAL FOR RMSEA (0.0

0.032) P-VALUE FOR TEST OF CLOSE FIT (RMSEA lt

0.05) 1.00 EXPECTED CROSS-VALIDATION INDEX

(ECVI) 0.22 90 PERCENT CONFIDENCE INTERVAL FOR

ECVI (0.21 0.26) ECVI FOR SATURATED MODEL

0.31 ECVI FOR INDEPENDENCE MODEL

3.98 CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66

DEGREES OF FREEDOM 1962.12 INDEPENDENCE AIC

1986.12 MODEL AIC 109.50 SATURATED AIC

156.00 INDEPENDENCE CAIC 2048.69 MODEL CAIC

250.29 SATURATED CAIC 562.74 ROOT MEAN SQUARE

RESIDUAL (RMR) 0.028 STANDARDIZED RMR

0.028 GOODNESS OF FIT INDEX (GFI) 0.98 ADJUSTED

GOODNESS OF FIT INDEX (AGFI) 0.97 PARSIMONY

GOODNESS OF FIT INDEX (PGFI) 0.64 NORMED FIT

INDEX (NFI) 0.97 NON-NORMED FIT INDEX (NNFI)

1.00 PARSIMONY NORMED FIT INDEX (PNFI)

0.75 COMPARATIVE FIT INDEX (CFI)

1.00 INCREMENTAL FIT INDEX (IFI) 1.00 RELATIVE

FIT INDEX (RFI) 0.96 CRITICAL N (CN) 696.82

46

CHI-SQUARE WITH 51 DEGREES OF FREEDOM 55.50 (P

0.31) (This test models the variances and

covariances as implied by the parameter

expectations, df 78-12-12-3) CHI-SQUARE FOR

INDEPENDENCE MODEL WITH 66 DEGREES OF FREEDOM

1962.12 (This test only models the variances of

the variables and assumes all covariances are 0,

df 78-12) GOODNESS OF FIT INDEX (GFI)

0.98 ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

47

CORRELATION MATRIX TO BE ANALYZED

V1 V2 V3

V4 M1 M2

-------- -------- -------- --------

-------- -------- V1 1.00

V2 0.52 1.00 V3 0.52

0.48 1.00 V4 0.54 0.54

0.49 1.00 M1 0.16

0.22 0.19 0.23 1.00 M2

0.22 0.28 0.23 0.23

0.48 1.00 M3 0.19 0.21

0.13 0.17 0.47 0.46

M4 0.22 0.23 0.23 0.17

0.48 0.49 R1 0.23

0.25 0.29 0.23 0.14 0.22

R2 0.22 0.17 0.21

0.17 0.17 0.23 R3 0.28

0.22 0.26 0.22 0.18

0.23 R4 0.27 0.25 0.24

0.26 0.21 0.23

CORRELATION MATRIX TO BE ANALYZED

M3 M4 R1 R2

R3 R4 --------

-------- -------- -------- --------

-------- M3 1.00 M4

0.50 1.00 R1 0.15 0.28

1.00 R2 0.11 0.19

0.47 1.00 R3 0.17 0.25

0.50 0.51 1.00 R4

0.15 0.23 0.51 0.52 0.52

1.00

48

FITTED COVARIANCE MATRIX

V1 V2 V3 V4 M1

M2 -------- --------

-------- -------- -------- --------

V1 1.00 V2 0.53 1.00

V3 0.51 0.49 1.00 V4

0.54 0.52 0.50 1.00

M1 0.21 0.20 0.20 0.21

1.00 M2 0.21 0.21

0.20 0.21 0.48 1.00 M3

0.21 0.20 0.19 0.20

0.46 0.47 M4 0.22 0.21

0.21 0.22 0.49 0.50

R1 0.24 0.23 0.22 0.23

0.19 0.20 R2 0.23

0.23 0.22 0.23 0.19 0.19

R3 0.25 0.24 0.23

0.24 0.20 0.20 R4 0.25

0.24 0.23 0.25 0.20

0.21 FITTED COVARIANCE MATRIX

M3 M4 R1 R2

R3 R4 -------- --------

-------- -------- -------- --------

M3 1.00 M4 0.48 1.00

R1 0.19 0.20 1.00 R2

0.19 0.20 0.48 1.00

R3 0.20 0.21 0.50 0.50

1.00 R4 0.20 0.21

0.51 0.51 0.53 1.00

49

FITTED RESIDUALS V1

V2 V3 V4 M1

M2 -------- -------- --------

-------- -------- -------- V1

0.00 V2 -0.01 0.00 V3

0.01 -0.01 0.00 V4

0.00 0.02 -0.01 0.00 M1

-0.05 0.01 -0.01 0.02

0.00 M2 0.01 0.07 0.03

0.02 0.00 0.00 M3

-0.02 0.01 -0.06 -0.04 0.01

-0.01 M4 0.00 0.01

0.02 -0.04 -0.01 -0.01 R1

-0.01 0.02 0.07 -0.01

-0.05 0.02 R2 -0.01 -0.05

-0.01 -0.06 -0.03 0.04

R3 0.03 -0.02 0.03 -0.03

-0.02 0.03 R4 0.02

0.00 0.01 0.02 0.01 0.03

FITTED RESIDUALS M3

M4 R1 R2 R3

R4 -------- -------- --------

-------- -------- -------- M3

0.00 M4 0.01 0.00 R1

-0.04 0.08 0.00 R2

-0.08 -0.01 -0.01 0.00 R3

-0.02 0.04 0.00 0.01

0.00 R4 -0.05 0.01 0.00

0.01 -0.01 0.00

50

STANDARDIZED RESIDUALS

V1 V2 V3 V4 M1

M2 -------- --------

-------- -------- -------- --------

V1 0.00 V2 -0.87 0.00

V3 0.77 -0.64 0.00 V4

0.17 1.20 -0.66 0.00

M1 -1.43 0.45 -0.21 0.63

0.00 M2 0.16 2.27

0.79 0.66 0.19 0.00 M3

-0.51 0.37 -1.84 -1.12

0.81 -0.40 M4 0.05 0.38

0.63 -1.40 -0.49 -1.08

R1 -0.30 0.59 2.23 -0.24

-1.44 0.68 R2 -0.35

-1.68 -0.27 -1.83 -0.76

1.11 R3 1.02 -0.62 0.92

-0.92 -0.59 0.81 R4

0.60 0.12 0.21 0.57 0.36

0.83 STANDARDIZED RESIDUALS

M3 M4 R1 R2

R3 R4 --------

-------- -------- -------- --------

-------- M3 0.00 M4

1.00 0.00 R1 -1.10 2.45

0.00 R2 -2.38 -0.34

-0.32 0.00 R3 -0.70 1.15

-0.03 0.74 0.00 R4

-1.44 0.45 -0.21 0.74 -0.91

0.00

51

!Confirmatory Factor Analysis Model, Mental

Abilities

QPLOT OF STANDARDIZED RESIDUALS

3.5...............................................

........................... .

.. .

. . .

. . .

. .

.

. . .

.

. .

. . .

. . .

. x .

.

. . .

. x

. .

. x . .

x .

. .

xx . .

N .

. . O .

x.

. R .

x. . M .

xx

. A .

.x .

L . xx

. .

xx

. Q .

. . U .

xx

. A .

.

N . xx

. T .

x

. I .

. L .

x

. E .

x.

. S . .

. .

.

. . x .

. .

x .

. . x

. . .

. .

x .

. . .

. .

.

. . .

. . .

. . .

. . .

. .

.

. -3.5......................

..................................................

.. -3.5

3.5

STANDARDIZED RESIDUALS

52

The chosen model fits the data quite well. How

would other models do? A complete confirmatory

analysis would not only test the preferred model

but also examine alternative models to assess how

easily they could account for the data. To the

extent that reasonable alternatives exist, the

preferred model must be considered with more

caution.

53

Other models can easily be tested.

Verbal

Math

Analytic

d1

d2

d3

d4

d9

d10

d11

d12

d6

d5

d7

d8

54

(No Transcript)

55

(No Transcript)

56

(No Transcript)

57

CHI-SQUARE WITH 54 DEGREES OF FREEDOM 214.02 (P

0.0) CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66

DEGREES OF FREEDOM 1962.12 GOODNESS OF FIT

INDEX (GFI) 0.93 ADJUSTED GOODNESS OF FIT

INDEX (AGFI) 0.90

58

F1

d1

d2

d3

d4

d9

d10

d11

d12

d6

d5

d7

d8

59

(No Transcript)

60

(No Transcript)

61

(No Transcript)

62

CHI-SQUARE WITH 54 DEGREES OF FREEDOM 770.57 (P

0.0) CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66

DEGREES OF FREEDOM 1962.12 GOODNESS OF FIT

INDEX (GFI) 0.73 ADJUSTED GOODNESS OF FIT

INDEX (AGFI) 0.61

63

F1

F2

d1

d2

d3

d4

d9

d10

d11

d12

d6

d5

d7

d8

64

(No Transcript)

65

(No Transcript)

66

(No Transcript)

67

CHI-SQUARE WITH 53 DEGREES OF FREEDOM 488.89 (P

0.0) CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66

DEGREES OF FREEDOM 1962.12 GOODNESS OF FIT

INDEX (GFI) 0.84 ADJUSTED GOODNESS OF FIT

INDEX (AGFI) 0.76

68

Verbal

Math

Analytic

d1

d2

d3

d4

d9

d10

d11

d12

d6

d5

d7

d8

69

(No Transcript)

70

Oops!

71

(No Transcript)

72

CHI-SQUARE WITH 51 DEGREES OF FREEDOM 742.32 (P

0.0) CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66

DEGREES OF FREEDOM 1962.12 GOODNESS OF FIT

INDEX (GFI) 0.75 ADJUSTED GOODNESS OF FIT

INDEX (AGFI) 0.61

73

Verbal

Math

Analytic

d1

d2

d3

d4

d9

d10

d11

d12

d6

d5

d7

d8

74

(No Transcript)

75

(No Transcript)

76

(No Transcript)

77

CHI-SQUARE WITH 54 DEGREES OF FREEDOM 1296.47

(P 0.0) CHI-SQUARE FOR INDEPENDENCE MODEL WITH

66 DEGREES OF FREEDOM 1962.12 GOODNESS OF FIT

INDEX (GFI) 0.70 ADJUSTED GOODNESS OF FIT

INDEX (AGFI) 0.56

78

Just because one model fits the data well, that

does not mean that other models cant fit the

data well too. It is important to test

alternative models to provide some sense of how

well the preferred model performs against

competitors.

79

One distinct advantage of confirmatory factor

analysis over exploratory approaches is that

goodness-of-fit indices can determine more

precisely how well the solution fits the

data. The principal components analysis of the

Ofir and Simonson (2001) Need for Cognition data

(N 201) suggested a single component solution.

80

1. I would prefer complex to simple

problems. 2. I like to have the

responsibility of handling a situation that

involves a lot of thinking. 3.

Thinking is not my idea of fun. 4. I

would rather do something that requires little

thought than something that is sure to challenge

my thinking abilities. 5. I try to

anticipate and avoid situations where there is

likely a chance I will have to think in depth

about something. 6. I find joy in

deliberating hard and for long hours.

7. I only think as hard as I have to.

8. I prefer to think about small, daily projects

to long-term ones. 9. I like tasks

that require little thought once Ive learned

them. 10. The idea of relying on thought

to make my way to the top appeals to me.

11. I really enjoy a task that involves coming

up with new solutions to problems. 12.

Learning new ways to think doesnt excite me very

much. 13. I prefer my life to filled

with puzzles that I must solve. 14. The

notion of thinking abstractly appeals to me.

15. I would prefer a task that is

intellectual, difficult, and important to one

that is somewhat important but does not require

much thought. 16. I feel relief rather

than satisfaction after completing a task that

required a lot of attention. 17. Its

enough for me that something gets the job done I

dont care how or why it works.. 18. I

usually end up deliberating about issues even

though they do not affect me personally.

81

Each items is rated using the following scale 1

very characteristic of me 2 somewhat

characteristic of me 3 neutral 4 somewhat

uncharacteristic of me 5 very uncharacteristic

of me

82

(No Transcript)

83

One component is the best solution here, but is

it a good solution?

Only 32 of the variance is accounted for by the

component.

84

One-factor confirmatory factor analysis

Parameter estimates.

85

(No Transcript)

86

One factor confirmatory factor analysis t-values

for parameter estimates. All parameter estimates

are significantly different from 0.

87

(No Transcript)

88

The fit statistics suggest that the model does

not provide an especially good description of the

variances and covariances among the Need for

Cognition items

- CHI-SQUARE WITH 135 DEGREES OF FREEDOM 422.61

(P 0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 153

DEGREES OF FREEDOM 1535.33 - GOODNESS OF FIT INDEX (GFI) 0.81

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.76

89

Does a two-factor model fit better, with separate

latent variables for items scored in positive

versus negative directions?

90

Better, but still not great

- CHI-SQUARE WITH 134 DEGREES OF FREEDOM 361.07

(P 0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 153

DEGREES OF FREEDOM 1535.33 - GOODNESS OF FIT INDEX (GFI) 0.85

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.81

91

The next step? Probably scale refinement. The

confirmatory factor analysis may only tell us

that the presumed model is not adequate to

explain the pattern of variances and covariances

in the data. It wont necessarily identify a

specific problem that needs to be addressed.

92

- Additional important issues in confirmatory

factor analysis - Nested models

- Model identification

- Correlation versus covariance matrices

- Other goodness-of-fit indices

- Model modification (exploratory confirmatory

factor analysis)

93

Careful use of confirmatory factor analysis

should test competing models and attempt to

examine nested models that place more

restrictions on the original formulation in an

attempt to determine the most parsimonious

account of the data.

94

A multitrait-multimethod matrix problem.

95

The standard multitrait-multimethod matrix is

used to determine the reliability, convergent

validity, and discriminant validity for measures

of multiple traits collecting using different

methods.

96

(No Transcript)

97

Parameter estimates for the hypothesized model.

98

An excellent fit

- CHI-SQUARE WITH 39 DEGREES OF FREEDOM 46.69 (P

0.19) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

99

An alternative model might argue that there is no

method variance and so the method latent

variables can safely be dropped.

100

(No Transcript)

101

Is there method variance? Restricting the model

to just traits tests whether eliminating method

variance matters.

102

Clearly there is method variance because

eliminating this part of the original model

reduces the goodness of fit.

- CHI-SQUARE WITH 51 DEGREES OF FREEDOM 695.28 (P

0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.71

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.56

103

Another alternative model could argue that all of

the covariance among items is due to common

methods of measurementa pure method artifact

model.

Paper

IRT

104

(No Transcript)

105

Is trait variance important? Restricting the

model to just methods determines if eliminating

traits reduces the goodness of fit.

106

A poor fit. Clearly trait variance is necessary.

- CHI-SQUARE WITH 54 DEGREES OF FREEDOM 1184.25

(P 0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.65

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.49

107

Perhaps there are elements of the methods that

are commonboth might use the same question

content, require the same response type (e.g.,

True vs. False), etc. This can be modeled by

allowing the method latent variables to be

correlated.

Paper

IRT

108

(No Transcript)

109

Can the model be improved by allowing the methods

factors to be correlated?

110

No, that does not improve matters. Still a poor

fit

- CHI-SQUARE WITH 53 DEGREES OF FREEDOM 1094.18

(P 0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.66

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.50

111

Are the separate traits really just a single

latent variable?

If so, then there is no evidence for discriminant

validity.

Trait

Paper

IRT

112

(No Transcript)

113

Can the basic methods-only model be improved by

adding a single trait latent variable?

114

Including trait information, but assuming that

only a single trait latent variable exists does

not fit the data adequately

- CHI-SQUARE WITH 42 DEGREES OF FREEDOM 601.40 (P

0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.80

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.62

115

Previously, adding a correlation between methods

latent variables improved fit.

Does it make the fit adequate now?

Trait

Paper

IRT

116

(No Transcript)

117

Does it help to let the methods latent variables

correlate?

118

Still not an acceptable fit

- CHI-SQUARE WITH 41 DEGREES OF FREEDOM 513.62 (P

0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.82

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.65

119

Trait

A pure single trait model could also be proposed.

120

(No Transcript)

121

Does a one-factor trait model provide an adequate

fit?

122

Definitely not

- CHI-SQUARE WITH 54 DEGREES OF FREEDOM 1334.96

(P 0.0) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.65

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.50

123

Of the several models tested, the original

multitrait multimethod model provided an

excellent fit and apparently much better fit than

the alternatives. Is a simpler version of that

model possible?

124

For example, can all weights for a latent

variable be set equal?

125

(No Transcript)

126

Can all weights for a particular latent variable

be set equal?

127

Yes, that is nearly as good as the original model

and provides a more parsimonious account of the

data

- CHI-SQUARE WITH 58 DEGREES OF FREEDOM 65.11 (P

0.24) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

128

Can the model be further constrained so that ALL

trait loadings are the same, and, ALL methods

loadings are the same?

129

(No Transcript)

130

Can all trait loadings be set equal and all

methods loadings be set equal?

131

Still an excellent fit and more parsimony

- CHI-SQUARE WITH 61 DEGREES OF FREEDOM 66.72 (P

0.29) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

132

Can the latent trait correlations be set equal?

133

(No Transcript)

134

Can the latent trait correlations be set equal?

135

Still not much reduction in fit

- CHI-SQUARE WITH 63 DEGREES OF FREEDOM 67.01 (P

0.34) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

136

Can the error variances (not shown) be set equal

as well?

137

(No Transcript)

138

Can the error variances be set equal?

139

A very simple model, with excellent fit

- CHI-SQUARE WITH 74 DEGREES OF FREEDOM 77.09 (P

0.38) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

140

When a parameter in one model is fixed or

constrained in a second model, the models are

said to be nested. The difference between their

respective chi-square values is itself a

chi-square, with degrees of freedom equal to the

difference in the two models degrees of freedom.

The new chi-square tests whether the two models

have significantly different fit to the data.

141

The Identification Problem

A model must be identified before valid and

unique parameter estimates can be obtained.

Identification means that there is sufficient

information in the data and model to provide

unique estimation of the free parameters.

Identification is obtained by restricting the

model in some way. Example There are an infinite

number of solutions to the following simple

model X Y 15. A unique solution can only

be found by restricting the model in some way,

for example, by requiring that X 5. Then the

unique solution for Y is 10.

142

Similarly, and with greater complexity,

confirmatory factor models must have a sufficient

number of restrictions before the parameters can

be estimated uniquely. Models are restricted by

either forcing some parameters to values of 0 or

constraining some parameters to be equal to other

parameters. One important condition for

identification is called the order condition.

This means that the number of parameters

estimated in the model is less than or equal to

the number of distinct values in the

variance-covariance matrix (S). Another important

requirement is that latent variables have a

specified scale.

143

Scaling For a model to be identified, the latent

variables must be given a scale. This can be done

by either standardizing the latent variables

(setting the diagonals of the F matrix to 1.00)

or by setting one l for each latent variable to

1.00 to give that latent variable the same scale

as the observed variable. Unfortunately, this

can produce different results for the tests of

individual parameters.

144

(No Transcript)

145

Parameter estimates when latent variable scales

are set by standardizing the F matrix.

146

The t-values for testing the parameter values for

significance.

147

(No Transcript)

148

Parameter estimates when latent variable scales

are set by fixing elements of the L matrix to

1.00.

149

The t values for testing the parameter values for

significance.

150

Depending on the method of scaling, the t values

for parameter estimates can vary.

Standardized F matrix

Scaling by l

151

The method of scaling has no effect on the

goodness-of-fit. Both approaches will produce the

same overall goodness of fit

- CHI-SQUARE WITH 39 DEGREES OF FREEDOM 46.69 (P

0.19) - CHI-SQUARE FOR INDEPENDENCE MODEL WITH 66 DEGREES

OF FREEDOM 2346.60 - GOODNESS OF FIT INDEX (GFI) 0.98

- ADJUSTED GOODNESS OF FIT INDEX (AGFI) 0.97

152

Because the method of scaling has no effect on

the overall goodness-of-fit, a test of any given

parameter can be obtained by taking the

difference between the goodness of fit chi-square

with that parameter free and the goodness of fit

chi-square when that parameter is fixed. The

difference is a test (c2, 1 df) of whether the

two models are the same, which is the same as

asking if the parameter is 0.

153

Fixing f21 to 0 in the l scaled model produces an

overall goodness of fit c2 of 52.63 (df 40).

The same goodness of fit is obtained in the model

that uses a standardized F matrix with f21 set to

0. The model that has f21 free produces an

overall goodness of fit c2 of 46.69 (df

39). The difference is a c2 of 5.94 (df 1),

significant at p lt .05.

154

Analyzing Correlation versus Covariance Matrices

Confirmatory factor analysis models are based on

the decomposition of covariance matrices, not

correlation matrices. The solutions hold,

strictly speaking, for the analysis of covariance

matrices. To the extent that the solution depends

on the scale of the variables, analyses based on

covariance matrices and correlation matrices can

differ.

155

Other Goodness-of-Fit Indices

In addition to the chi-square goodness of fit

test and the fit indices (GFI, AGFI), another

common fit statistic is based on the difference

between the original covariance matrix and the

covariance matrix implied by the model.

Root-Mean-Squared Residual (RMR)

Small values are desirable, indicating a good

reproduction of the original covariance matrix.

156

One other commonly reported fit index is the

Root-Mean-Square Error of Approximation (RMSEA),

a chi-square goodness of fit statistic, adjusted

for degrees of freedom and sample size

157

Some Common Rules of Thumb for Model Fit

158

Model Modification(exploratory confirmatory

factor analysis)

When a presumed model does not fit, changes to

the model can be tested for goodness-of-fit,

but the exercise is no longer confirmatory and

may capitalize on chance and other biases.

Cross-validation is essential in such cases. Some

software provides guidance in the modification

process by identifying the fixed parameters that

could be set free to improve the model fit.

159

In LISREL, the modification indices are the

changes in the goodness-of-fit c2 that would

result from setting that parameter free.

160

In LISREL, the modification indices are the

changes in the goodness-of-fit c2 that would

result from setting that parameter free.

161

The parameter estimates if set free are also

provided. The approach assumes that only a single

parameter is set free at a time.

162

Reliability and Attenuation Confirmatory factor

analysis has a close relation to classical

measurement theory. When the latent variables are

standardized, the l are the correlations between

each variable and the true score. The square of

these l are the individual item reliabilities. If

these are averaged, they can be used in the

Spearman-Brown formula to provide an estimate of

standardized coefficient alpha

163

An alternative estimate of reliability is

164

For the Need for Cognition data, the one factor

confirmatory factor analysis parameter estimates

yield reliability estimates of a .90 r2c

.91 A good reminder that reliability is a faulty

indicator of dimensionality.

165

fVM

Math

Verbal

Analytic

l1V

l5M

X5

X1

Inherent in the measurement model is the

classical measurement theory notion of

attenuation. The correlation between X1 and X5 is

estimated by l1VfVMl5M.

166

(No Transcript)

167

When used wisely, confirmatory factor analysis is

a powerful tool that can test well-specified

models and compare competing models. Like any

statistical procedure, however, it can be biased

when it is used in a more exploratory manner (as

in model modification). In those cases, careful

cross-validation is necessary to insure the

validity of the best fitting model.