Vector Error Diffusion PowerPoint PPT Presentation

Title: Vector Error Diffusion

1

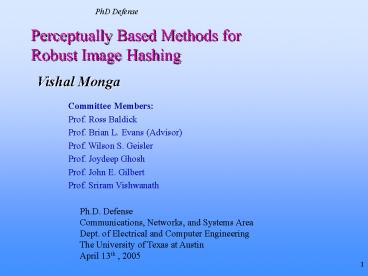

PhD Defense

Perceptually Based Methods for Robust Image

Hashing

Vishal Monga

Committee Members Prof. Ross Baldick Prof.

Brian L. Evans (Advisor) Prof. Wilson S.

Geisler Prof. Joydeep Ghosh Prof. John E.

Gilbert Prof. Sriram Vishwanath

Ph.D. Defense Communications, Networks, and

Systems Area Dept. of Electrical and Computer

Engineering The University of Texas at

Austin April 13th , 2005

2

Introduction

The Dichotomy of Image Hashing

- Signal processing methods

- Capture perceptual attributes well, yield robust

and visually meaningful representations - Little can be said about how secure these

representations are

- Cryptographic methods

- Provably secure

- However, do not respect underlying structure on

signals/images

Towards a joint signal processing-cryptographic

approach..

3

Hash Example

Introduction

- Hash function Projects value from set with large

(possibly infinite) number of members to set with

fixed number of (fewer) members - Irreversible

- Provides short, simple representationof large

digital message - Example sum of ASCII codes forcharacters in

name modulo N ( 7),a prime number

Database name search example

4

Introduction

Image Hashing Motivation

- Hash functions

- Fixed length binary string from a large digital

message - Used in compilers, database searching,

cryptography - Cryptographic hash security applications e.g.

message authentication, ensuring data integrity - Traditional cryptographic hash

- Not suited for multimedia ? very sensitive to

input, i.e. change in one input bit changes

output dramatically - Need for robust perceptual image hashing

- Perceptual based on human visual system response

- Robust hash values for perceptually identical

images must be the same (with high probability)

5

Introduction

Image Hashing Applications

- Applications

- Image database search and indexing

- Content dependent key generation for watermarking

- Robust image authentication hash must tolerate

incidental modifications yet be sensitive to

content changes

Tampered

Original Image

JPEG Compressed

Different hash values

Same hash value h1

h2

6

Outline

- Perceptual image hashing

- Motivation applications

- Contribution 1 A unified framework

- Formal definition of desired hash

properties/goals - Novel two-stage hashing algorithm

- Review of existing feature extraction techniques

- Contribution 2 Robust feature extraction

- Contribution 3 Clustering algorithms for

feature vector compression - Randomized clustering for secure hashing

- Summary

7

Perceptual Hash Desirable Properties

Contribution 1 A Unified Framework

- Hash function takes two inputs

- Image (class of images, e.g. natural

images) - Secret key (key space)

- Perceptual robustness

- Fragility to visually distinct inputs

- Unpredictability

8

Hashing Framework

Contribution 1 A Unified Framework

- Two-stage hash algorithm Monga Evans, 2004

- Feature vectors extracted from perceptually

identical images should be close in some

distance metric

Input Image I

Final Hash

Intermediatehash

Compression

(e.g. 128 bits)

(e.g. 1 MB)

Extract visually robust feature vector

Clustering of similar feature vectors

9

Outline

- Perceptual image hashing

- Motivation applications

- Contribution 1 A unified framework

- Formal definition of desired hash

properties/goals - Novel two-stage hashing algorithm

- Review of existing feature extraction techniques

- Contribution 2 Robust feature extraction

- Contribution 3 Clustering algorithms for

feature vector compression - Randomized clustering for secure hashing

- Summary

10

Invariant Feature Extraction

Existing techniques

- Image statistics based approaches

- Intensity statistics Intensity histograms of

image blocks Schneider et al., 1996 mean,

variance and kurtosis of intensity values

extracted from image blocks Kailasanathan et

al., 2001 - Statistics of wavelet coefficients Venkatesan et

al., 2000 - Relation based approaches Lin Chang, 2001

- Invariant relationship between corresponding

discrete cosine transform (DCT) coefficients in

two 8 ? 8 blocks - Preserve coarse representations

- Threshold low frequency DCT coefficients

Fridrich et al., 2001 - Low-res wavelet sub-bands Mihcak Venkatesan,

2000, 2001 - Singular values and vectors of sub-images Kozat

et al., 2004

11

Open Issues

Related Work

- A robust feature point scheme for hashing

- Inherent sensitivity to content-changing

manipulations (useful in authentication) - Representation of image content robust to global

and local geometric distortions - Exploit properties of human visual system

- Randomized algorithms for secure image hashing

- Quantifying impact of randomization in enhancing

hash security - Trade-offs with robustness/perceptual

significance of hash

Necessitates a joint signal processing-cryptograp

hic approach

12

Outline

- Perceptual image hashing

- Motivation applications

- Review of existing techniques

- Contribution 1 A unified framework

- Formal definition of desired hash

properties/goals - Novel two-stage hashing algorithm

- Contribution 2 Robust feature extraction

- Contribution 3 Clustering algorithms for

feature vector compression - Randomized clustering for secure hashing

- Summary

13

Hypercomplex or End-Stopped Cells

- Cells in visual cortex that help in object

recognition - Respond strongly to line end-points, corners and

points of high curvature Hubel et al.,1965

Dobbins, 1989

- End-stopped wavelet basis Vandergheynst et al.,

2000 - Apply First Derivative of Gaussian (FDoG)

operator to detect end-points of structures

identified by Morlet wavelet

Synthetic L-shaped image

Morlet wavelet response

End-stopped wavelet response

14

Contribution 2 Robust Feature Extraction

Computing Wavelet Transform

- Generalize end-stopped wavelet

- Employ wavelet family

- Scale parameter 2, i scale of the

wavelet - Discretize orientation range 0, p into M

intervals i.e. - ?k (k p/M ), k 0, 1, M - 1

- End-stopped wavelet transform

15

Contribution 2 Robust Feature Extraction

Proposed Feature Detection MethodMonga Evans,

2004

- Compute wavelet transform of image I at suitably

chosen scale i for several different orientations

- Significant feature selection Locations (x,y) in

the image that are identified as candidate

feature points satisfy - Avoid trivial (and fragile) features Qualify a

location as a final feature point if

- Randomization Partition image into N

(overlapping) random regions using a secret key

K, extract features from each random region - Perceptual Quantization Quantize feature vector

based on distribution (histogram) of image

feature points to enhance robustness

16

Contribution 2 Robust Feature Extraction

Iterative Feature Extraction Algorithm Monga

Evans, 2004

- Extract feature vector f of length P from image

I, quantize f perceptually to obtain a binary

string bf1 (increase count) - 2. Remove weak image geometry Compute 2-D

order statistics (OS) filtering of I to produce

Ios OS(Ip,q,r) - 3. Preserve strong image geometry Perform

low-pass linear shift invariant (LSI) filtering

on Ios to obtain Ilp - 4. Repeat step 1 with Ilp to obtain bf2

- 5. IF (count MaxIter) go to step 6.

- ELSE IF D(bf1, bf2) lt ? go to step 6.

- ELSE set I Ilp and go to step 1.

- 6. Set fv(I) bf2

MaxIter, ?, P, and count are algorithm

parameters. count 0 to begin with fv(I)

denotes quantized feature vector D(.,.)

normalized Hamming distance between its arguments

17

Contribution 2 Robust Feature Extraction

Image Features at Algorithm Convergence

Original image

JPEG with Quality Factor of 10

Additive White Gaussian Noise with zero mean and

s 10

Stirmark local geometric attack

18

Contribution 2 Robust Feature Extraction

Quantitative Results Feature Extraction

- Quantized feature vector comparison

- D(fv(I), fv(Iident)) lt 0.2

- D(fv(I), fv(Idiff)) gt 0.3

Table 1. Comparison of quantized feature vectors

Normalized Hamming distance between quantized

feature vectors of original and attacked

images Attacked images generated by Stirmark

benchmark software

19

Contribution 2 Robust Feature Extraction

Comparison with other approaches

YES ? survives attack, i.e. features were

close content changing manipulations, should

be detected

20

Outline

- Perceptual image hashing

- Motivation applications

- Contribution 1 A unified framework

- Formal definition of desired hash

properties/goals - Novel two-stage hashing algorithm

- Review of existing feature extraction techniques

- Contribution 2 Robust feature extraction

- Contribution 3 Clustering algorithms for

feature vector compression - Randomized clustering for secure hashing

- Summary

21

Clustering Problem Statement

Feature Vector Compression

- Goals in compressing to a final hash value

- Significant dimensionality reduction while

retaining robustness, fragility to distinct

inputs, randomization. - Question Minimum length of the final hash value

(binary string) needed to meet the above goals ?

where 0 lt e lt d, C(li), C(lj) denote the

clusters to which these vectors are mapped

22

Clustering Possible compression methods

Possible Solutions

- Error correction decoding Venkatesan et al.,

2000 - Applicable to binary feature vectors

- Break the vector down to segments close to the

length of codewords in a suitably chosen error

correcting code

- More generally vector quantization/clustering

- Minimize an average distance to achieve

compression close to the rate distortion

limit - (metric space of feature

vectors) - P(l) probability of occurrence of vector l

- D(.,.) distance metric defined on feature

vectors - ck codewords/cluster centers, Sk kth

cluster

23

Contribution 3 Clustering Algorithms

Is Average Distance the Appropriate Cost for the

Hashing Application?

- Problems with average distance VQ

- No guarantee that perceptually distinct feature

vectors indeed map to different clusters no

straightforward way to trade-off between the two

goals - Must decide number of codebook vectors in advance

- Must penalize some errors harshly e.g. if vectors

really close are not clustered together, or

vectors very far apart are compressed to the same

final hash value - Define alternate cost function for hashing

- Develop clustering algorithm that tries to

minimize that cost

24

Contribution 3 Clustering Algorithms

Cost Function for Feature Vector Compression

- Define joint cost matrices C1 and C2 (n x n)

- n total number of vectors be clustered, C(li),

C(lj) denote the clusters that these vectors are

mapped to - Exponential cost

- Ensures that severe penalty is associated if

feature vectors far apart and hence perceptually

distinct are clustered together

a gt 0, ? gt 1 are algorithm parameters

25

Contribution 3 Clustering Algorithms

Cost Function for Feature Vector Compression

- Further define S1 as

- S2 is defined similarly

- Normalize to get ,

- Then, minimize the expected cost

- p(i) p(li), p(j) p(lj)

26

Contribution 3 Clustering Algorithms

Clustering Hardness Claims a Good Heuristic

- Decision version of the clustering problem

- For a fixed number of clusters k, is there a

clustering with cost less than a constant? - k-way weighted graph cut problem known to be

NP-complete and reduces to our clustering

problem in log-space Monga et al., 2004

- A good heuristic?

- Motivated by the stable roommate/spouse problem

- Give preference to the bully or the strongest

candidates in ordered fashion intuitively this

minimizes the grief

- Our clustering problem

- Notion of strength is captured by the probability

mass of the data point/feature vector

27

Contribution 3 Clustering Algorithms

Basic Clustering Algorithm Monga et al. 2004

Type II error

Type I error

- Heuristic Select the data point associated with

the highest probability mass as the cluster

center

- For any (li, lj) in cluster Sk

- No errors until this stage of the algorithm

28

Contribution 3 Clustering Algorithms

Handling the unclustered data points

Approach 2

Approach 1

All clusters are candidates assign to one that

minimizes a joint cost

29

Contribution 3 Clustering Algorithms

Clustering Algorithms Revisited

- Approach 2

- Smoothly trades off the minimization of

vs. - via the parameter ß

- ß ½ ? joint minimization

30

Contribution 3 Clustering Algorithms

Clustering Results

- Compress binary feature vector of L 240 bits

- e 0.2, d 0.3 (normalized hamming distance)

- At approximately the same rate, the cost is

orders of magnitude lower for the proposed

clustering

31

Contribution 3 Clustering Algorithms

Validating the Perceptual Significance

- Applied the two-stage hash algorithm to a natural

image database of 100 images - For each image 20 perceptually identical images

were generated using the Stirmark benchmark

software - Attacks included JPEG compression with varying

quality factors, AWGN addition, geometric attacks

viz. small rotation and cropping,

linear/non-linear filtering etc. - Results

- Robustness Final hash values for the original

and distorted images same in over 95 cases - Fragility 1 collision in all pairings (4950) of

100 images

- In comparison, 40 collisions for traditional VQ

and 25 for error correction decoding

More analysis

32

Outline

- Perceptual image hashing

- Motivation applications

- Contribution 1 A unified framework

- Formal definition of desired hash

properties/goals - Novel two-stage hashing algorithm

- Review of existing feature extraction techniques

- Contribution 2 Robust feature extraction

- Contribution 3 Clustering algorithms for

feature vector compression - Randomized clustering for secure hashing

- Summary

33

Contribution 3 Clustering Algorithms

Randomized Clustering

- Heuristic for the deterministic map

- Select the highest probability data point as

the cluster center

- Randomization Scheme

- Select cluster centers probabilistically via a

randomization parameter

i runs over unclustered data points

34

Security Via Randomization

Contribution 3 Clustering Algorithms

- Conjecture

- Randomization makes generation of malicious

inputs harder - Adversary model

- U set of all possible feature vector pairs in

L - the error set for deterministic

clustering - Adversary has complete knowledge of feature

extraction and deterministic clustering ? will

contrive to generate input pairs over E

Clustering cost computed

over the error set E

- As randomization increases, adversary achieves

little success

35

Contribution 3 Clustering Algorithms

The rest of the story.

- An appropriate choice of s preserves perceptual

robustness while significantly enhancing

security result of a joint crypto-signal

processing approach

36

Contribution 3 Clustering Algorithms

Uniformity of the hash distribution

Kullback-Leibler (KL) distance of the hash

distribution measured against the uniform

distribution

- Hash distribution is close to uniform for s lt

1000

37

Summary of contributions

- Two-stage hashing framework

- Media dependent feature extraction followed by

(almost) media independent clustering - Robust feature extraction from natural images

- Iterative feature extractor that preserves

significant image geometry, features invariant

under several attacks - Algorithms for feature vector compression

- Novel cost function for the hashing application

- Greedy heuristic based clustering algorithms

- Randomized clustering for secure hashing

- Image authentication under geometric attacks

(not presented)

38

Questions and Comments!