Segmentation PowerPoint PPT Presentation

Title: Segmentation

1

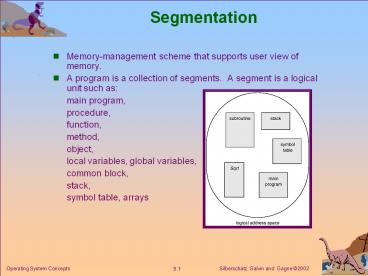

Segmentation

- Memory-management scheme that supports user view

of memory. - A program is a collection of segments. A segment

is a logical unit such as - main program,

- procedure,

- function,

- method,

- object,

- local variables, global variables,

- common block,

- stack,

- symbol table, arrays

2

Logical View of Segmentation

1

2

3

4

user space

physical memory space

3

Segment Tables

- Load segments separately into memory

- Each process has a segment table in memory that

maps segment-numbers (table index) to physical

addresses. - Each entry has

- base contains the starting physical address

where the segments reside in memory. - limit specifies the length of the segment.

4

Segment Table Example

5

Segmentation Addressing

- Logical addresses are a segment number and an

offset within the segment

6

Segmentation Registers

- Each process has a segment-table base register

(STBR) and a segment-table length register (STLR)

that are loaded into the MMU. - STBR points to the segment tables location in

memory - STLR indicates number of segments used by a

program - Segment number s is legal if s lt STLR

7

Considerations

- Since segments vary in length, memory allocation

is a dynamic storage-allocation problem. - Allocation.

- first fit/best fit

- external fragmentation

- (Back to the old problems -)

- Sharing.

- shared segments

- same segment number

8

Memory Protection

- Protection. With each entry in segment table

associate - validation bit 0 ? illegal segment

- read/write/execute privileges

- Protection bits associated with segments code

sharing occurs at segment level.

9

Dynamic Linking

- Linking postponed until execution time.

- Small piece of code, stub, used to locate the

appropriate memory-resident library routine. - Stub replaces itself with the address of the

routine, and executes the routine. - Dynamic linking is particularly useful for

libraries, which may be loaded in advance, or on

demand - Also known as shared libraries

- Permits update of system libraries without

relinking - I NEED TO LEARN MORE ABOUT THIS

10

Dynamic Loading

- Routine is not loaded until it is called

- When loaded, address tables in the resident

portion are updated - Better memory-space utilization unused routine

is never loaded. - Useful when large amounts of code are needed to

handle infrequently occurring cases.

11

Paging

- Divide physical memory into fixed-sized blocks

called frames (size is power of 2, between 512

bytes and 8192 bytes). - Divide logical memory into blocks of same size

called pages. - Keep track of all free frames.

- To run a program of size n pages, need to find n

free frames and load program (for now, assume all

pages are loaded).

12

Logical View of Paging

13

Page Tables

- Each process has a page table in memory that maps

page numbers (table index) to physical addresses.

14

Paging Addressing

- Logical addresses are a page number and an offset

within the segment

15

Page Table Example

16

Paging Registers

- Each process has a page-table base register

(PTBR) and a page-table length register (PTLR)

that are loaded into the MMU - PTBR points to the page table in memory.

- PTLR indicates number of page table entries

17

Free Frames

Before allocation

After allocation

18

Real Paging Examples

- Example from Geoffs notes x 2

- Multiply offsets by page table width address

width - WORK OUT DETAILS

19

Memory Protection

- Can access only inside frames anyway

- PTLR protects against accessing non-existent, or

not-in-use, parts of a page table. - An alternative is to attach a valid-invalid bit

to each entry in the page table - valid indicates that the associated page is in

the process logical address space, and is thus a

legal page. - invalid indicates that the page is not in the

process logical address space. - Finer grained protection is achieved using rwx

bits for each page

20

Valid (v) or Invalid (i) Bit In A Page Table

21

Relocation and Swapping

- Relocation requires update of page table

- Swapping is an extension of this

22

TLBs

- In simple paging every data/instruction access

requires two memory accesses. One for the page

table and one for the data/instruction. - The two memory access problem can be solved by

the use of a special fast-lookup hardware cache

called associative memory or translation

look-aside buffers (TLBs) - Associative memory parallel search

- Address translation (A, A)

- If A is in associative register, get frame

out. - Otherwise get frame from page table in memory

- Fast cache is an alternative

Page

Frame

23

Paging Hardware With TLB

24

TLB Performance

- Effective access time

- Assume memory cycle time is X time unit

- Associative lookup ? time unit

- Hit ratio ? percentage of times that a page

number is found in the associative registers - Effective Access Time (EAT)

- EAT (X ?) ? (2X ?)(1 ?)

- 2X ? ?X

- OK if ? is small and ? is large, e.g., 0.2 and

95 - TLB Reach

- The amount of memory accessible from the TLB.

- TLB Reach (TLB Size) X (Page Size)

- Ideally, the working set of each process is

stored in the TLB - Examples

- 68030 - 22 entry TLB

- 80486 - 32 register TLB, claims 98 hit rate

25

Considerations

- Fragmentation vs Page table size and TLB hits

- Page size selection

- internal fragmentation gt smaller pages

- table size gt larger pages (32 bit address 4GB,

4KB frames gt 220 entries _at_ 4 bytes 4MB table!) - i386 - 4K pages

- 68030 - up to 32K pages

- Newer hardware tending to larger page sizes - to

16MB - Multiple Page Sizes. This allows applications

that require larger page sizes the opportunity to

use them without an increase in fragmentation. - UltraSparc supports 8K, 64K, 512K, and 4M

- Requires OS support

26

Two-Level Paging Example

- A logical address (on 32-bit machine (4GB

addresses) with 4K page size (12 bit offsets)) is

divided into - a page number consisting of 20 bits.

- a page offset consisting of 12 bits.

- 220 pages, 4 bytes per entry gt 4MB page table

- The page table is paged, the page number is

further divided into - a 10-bit page number.

- a 10-bit page offset.

- Thus, a logical address is as followswher

e pi is an index into the outer page table, and

p2 is the displacement within the page of the

outer page table.

page number

page offset

p2

pi

d

10

12

10

27

Address-Translation Scheme

- Address-translation scheme for a two-level paging

architecture - p1 and p2 may be found in a TLB

- Example from Geoffs notes

- Examples

- SPARC - 3 level hierarchy (32 bit)

- 68030 - 4 level hierarchy (32 bit)

- Multiple memory accesses, up to 7 for 64 bit

addressing

28

Hashed Page Tables

- Common in address spaces gt 32 bits.

- The page number is hashed into a page table. This

page table contains a chain of elements hashing

to the same location. - Page numbers are compared in this chain

- More efficient than multi-level for small

processes

29

Inverted Page Table

- For very large virtual address spaces (see VM)

- One entry for each frame of memory.

- Entry consists of the virtual address of the page

stored in that real memory location, with

information about the process that owns that

page, e.g., PID. - Virtual address may be duplicated, but PIDs are

unique - Decreases memory needed to store each page table,

but increases time needed to search the table

when a page reference occurs. - Use hash table to limit the search to one or at

most a few page-table entries. - Used by IBM RT and PowerPC

30

Inverted Page Table Architecture

31

Shared Pages

- Shared code

- One copy of read-only (reentrant) code shared

among processes (i.e., text editors, compilers,

window systems). - Shared code must appear in same location in the

logical address space of all processes. - Private code and data

- Each process keeps a separate copy of the code

and data. - The pages for the private code and data can

appear anywhere in the logical address space.

32

Shared Pages Example

33

Segmentation with Paging MULTICS

- The MULTICS system solved problems of external

fragmentation and lengthy search times by paging

the segments. - Solution differs from pure segmentation in that

the segment-table entry contains not the base

address of the segment, but rather the base

address of a page table for this segment.

34

MULTICS Address Translation Scheme

35

Intel 30386 Address Translation