Functional Programming PowerPoint PPT Presentation

1 / 123

Title: Functional Programming

1

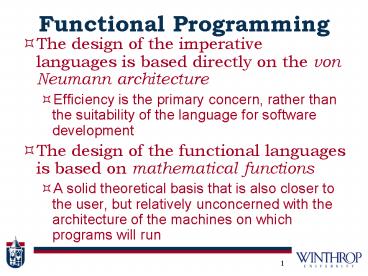

Functional Programming

- The design of the imperative languages is based

directly on the von Neumann architecture - Efficiency is the primary concern, rather than

the suitability of the language for software

development - The design of the functional languages is based

on mathematical functions - A solid theoretical basis that is also closer to

the user, but relatively unconcerned with the

architecture of the machines on which programs

will run

2

Mathematical Functions

- A mathematical function is a mapping of members

of one set, called the domain set, to another

set, called the range set - A lambda expression specifies the parameter(s)

and the mapping of a function in the following

form - ?(x) x x x

- for the function cube (x) xxx

3

Lambda Expressions

- Lambda expressions describe nameless functions

- Lambda expressions are applied to parameter(s) by

placing the parameter(s) after the expression - e.g., (?(x) x x x)(2)

- which evaluates to 8

4

Functional Forms

- A higher-order function, or functional form, is

one that either takes functions as parameters or

yields a function as its result, or both

5

Function Composition

- A functional form that takes two functions as

parameters and yields a function whose value is

the first actual parameter function applied to

the application of the second - Form h ? f g

- which means h (x) ? f ( g ( x))

- For f (x) ? x 2 and g (x) ? 3 x,

- h ? f g yields (3 x) 2

6

Apply-to-all

- A functional form that takes a single function as

a parameter and yields a list of values obtained

by applying the given function to each element of

a list of parameters - Form ?

- For h (x) ? x x

- ?( h, (2, 3, 4)) yields (4, 9, 16)

7

Fundamentals of Functional Programming Languages

- The objective of the design of a FPL is to mimic

mathematical functions to the greatest extent

possible

8

Fundamentals of Functional Programming Languages

- The basic process of computation is fundamentally

different in a FPL than in an imperative language - In an imperative language, operations are done

and the results are stored in variables for later

use - Management of variables is a constant concern and

source of complexity for imperative programming - In an FPL, variables are not necessary, as is the

case in mathematics

9

Referential Transparency

- In an FPL, the evaluation of a function always

produces the same result given the same parameters

10

Lexical and Syntax Analysis

- Chapter 4

11

Lexical analysis

- lexical analyzer strips off all the comments

- tokens smallest units of programming language

- tokens can be recognized by regular expressions

12

Lexical analysis

- integer constant regular expression

- 0 9 (one or more occurrences)

- ?

- 0, 1, 2, 3, 4, 5, 6, 7, 8, 9

- a regular expression matches a string of

characters (or not)

13

Regular expressions

- (all these are tokens)

- a (single letter)

- matches a and nothing else

- a b c (set)

- matches any style char inside the

brackets - a zA Z (ranges)

- matches all upper and lower case letters

14

Regular expressions

- if r and s are regular expressions, then so are

the following - (r) same as r

- rs (concatenation)

- matches something matching r,

immediately followed by something

matching s) - rs (or)

- matches anything matching either r or s

- r matches one or more matches of r in a

row - r matches zero or more matches of r in a

row - r? matches zero or one matches of r

15

Precedence in regular expressions

- Highest ( )

- , , ?

- rs ? juxtaposition

- rs

16

Regular expressions

- Variable names

- A Za z _ A Za z _ 0 9

- integer constants

- 0 9

- real constants

- 0 9.0 9(eE-?0 9) Pascal

- ? ? ? ? ? ?

- 3 . 14 e 0 3.14e0

- in C (this is allowed)

- .314e1

- 314.e2

- 314.e-2

17

Lexical analysis

- Language implementation systems must analyze

source code, regardless of the specific

implementation approach - Nearly all syntax analysis is based on a formal

description of the syntax of the source language

(BNF)

18

Syntax Analysis

- The syntax analysis portion of a language

processor nearly always consists of two parts - A low-level part called a lexical analyzer

(mathematically, a finite automaton based on a

regular grammar) - A high-level part called a syntax analyzer, or

parser (mathematically, a push-down automaton

based on a context-free grammar, or BNF)

19

Using BNF to Describe Syntax

- Provides a clear and concise syntax description

- The parser can be based directly on the BNF

- Parsers based on BNF are easy to maintain

20

Reasons to Separate Lexical and Syntax Analysis

- Simplicity - less complex approaches can be used

for lexical analysis separating them simplifies

the parser - Efficiency - separation allows optimization of

the lexical analyzer - Portability - parts of the lexical analyzer may

not be portable, but the parser always is portable

21

Lexical Analysis

- A lexical analyzer is a pattern matcher for

character strings - A lexical analyzer is a front-end for the

parser - Identifies substrings of the source program that

belong together - lexemes - Lexemes match a character pattern, which is

associated with a lexical category called a token - sum is a lexeme its token may be IDENT

22

Lexical Analysis

- The lexical analyzer is usually a function that

is called by the parser when it needs the next

token - Three approaches to building a lexical analyzer

- Write a formal description of the tokens and use

a software tool that constructs table-driven

lexical analyzers given such a description - Design a state diagram that describes the tokens

and write a program that implements the state

diagram - Design a state diagram that describes the tokens

and hand-construct a table-driven implementation

of the state diagram

23

State Diagram Design

- A naïve state diagram would have a transition

from every state on every character in the source

language - such a diagram would be very large!

24

Lexical Analysis (cont.)

- In many cases, transitions can be combined to

simplify the state diagram - When recognizing an identifier, all uppercase and

lowercase letters are equivalent - Use a character class that includes all letters

- When recognizing an integer literal, all digits

are equivalent - use a digit class

25

Lexical Analysis

- Reserved words and identifiers can be recognized

together (rather than having a part of the

diagram for each reserved word) - Use a table lookup to determine whether a

possible identifier is in fact a reserved word

26

Lexical Analysis

- Convenient utility subprograms

- getChar - gets the next character of input, puts

it in nextChar, determines its class and puts the

class in charClass - addChar - puts the character from nextChar into

the place the lexeme is being accumulated, lexeme - lookup - determines whether the string in lexeme

is a reserved word (returns a code)

27

State Diagram

28

Lexical Analysis

- Implementation (assume initialization)

- int lex()

- getChar()

- switch (charClass)

- case LETTER

- addChar()

- getChar()

- while (charClass LETTER charClass

DIGIT) - addChar()

- getChar()

- return lookup(lexeme)

- break

29

Lexical Analysis

- case DIGIT

- addChar()

- getChar()

- while (charClass DIGIT)

- addChar()

- getChar()

- return INT_LIT

- break

- / End of switch /

- / End of function lex /

30

The Parsing Problem

- Goals of the parser, given an input program

- Find all syntax errors for each, produce an

appropriate diagnostic message, and recover

quickly - Produce the parse tree, or at least a trace of

the parse tree, for the program

31

The Parsing Problem

- Two categories of parsers

- Top down - produce the parse tree, beginning at

the root - Order is that of a leftmost derivation

- Traces or builds the parse tree in preorder

- Bottom up - produce the parse tree, beginning at

the leaves - Order is that of the reverse of a rightmost

derivation - Parsers look only one token ahead in the input

32

Top-down parsing

- Builds the parse tree from the root down

- Easier to write a top-down parser by hand

- Root node ? leaves

- Abstract ? concrete

- Uses grammar left ? right

- Works by "guessing"

33

Bottom-up parsing

- Parse tree is built in bottom up order

(post-order) - yacc, bison bottom-up parser creator

- read from bottom

- Leaves ? root node

- Concrete ? abstract

- Uses grammar right ? left

- Works by "pattern matching"

34

Parsing

- A top down parser traces or builds the parse tree

in preorder. - A preorder traversal of a parse tree begins with

the root. - Each node is visited before its branches are

followed. Branches are followed in left-to-right

order. - Leftmost derivation.

- Right recursive

- A bottom-up parser constructs a parse tree by

beginning at the leaves and progressing toward

the root. - Shift-reduce

- Rightmost derivation.

- Left recursive

35

Parsing

- Gt S ? e Gb S ? e

- S ? aS S ? Sa

- S ? bS S ? Sb

S

S

b

S

a

S

a

S

a

S

b

S

b

S

Gt

a

S

Gb

a

S

a

S

S

b

e

e

36

The Parsing Problem

- Top-down Parsers

- Given a sentential form, xA? , the parser must

choose the correct A-rule to get the next

sentential form in the leftmost derivation, using

only the first token produced by A - The most common top-down parsing algorithms

- Recursive descent - a coded implementation

- LL parsers - table driven implementation

37

The Parsing Problem

- Bottom-up parsers

- Given a right sentential form, ?, determine what

substring of ? is the right-hand side of the rule

in the grammar that must be reduced to produce

the previous sentential form in the right

derivation - The most common bottom-up parsing algorithms are

in the LR family

38

The Parsing Problem

- The Complexity of Parsing

- Parsers that work for any unambiguous grammar are

complex and inefficient ( O(n3)), where n is the

length of the input ) - Compilers use parsers that only work for a subset

of all unambiguous grammars, but do it in linear

time ( O(n)), where n is the length of the input )

39

Recursive-Descent Parsing

- There is a subprogram for each nonterminal in the

grammar, which can parse sentences that can be

generated by that nonterminal - EBNF is ideally suited for being the basis for a

recursive-descent parser, because EBNF minimizes

the number of nonterminals

40

Recursive-Descent Parsing

- A grammar for simple expressions

- ltexprgt ? lttermgt ( -) lttermgt

- lttermgt ? ltfactorgt ( /) ltfactorgt

- ltfactorgt ? id ( ltexprgt )

41

Recursive-Descent Parsing

- Assume we have a lexical analyzer named lex,

which puts the next token code in nextToken - The coding process begins when there is only one

RHS - For each terminal symbol in the RHS, compare it

with the next input token if they match,

continue, else there is an error - For each nonterminal symbol in the RHS, call its

associated parsing subprogram

42

Recursive-Descent Parsing

- / Function expr

- Parses strings in the language

- generated by the rule

- ltexprgt ? lttermgt ( -) lttermgt

- /

- void expr()

- / Parse the first term /

- term()

43

Recursive-Descent Parsing

- / As long as the next token is or -, call

- lex to get the next token, and parse the

- next term /

- while (nextToken PLUS_CODE

- nextToken MINUS_CODE)

- lex()

- term()

- This particular routine does not detect errors

- Convention Every parsing routine leaves the next

token in nextToken

44

Recursive-Descent Parsing

- A nonterminal that has more than one RHS requires

an initial process to determine which RHS it is

to parse - The correct RHS is chosen on the basis of the

next token of input (the lookahead) - The next token is compared with the first token

that can be generated by each RHS until a match

is found - If no match is found, it is a syntax error

45

Recursive-Descent Parsing

- / Function factor

- Parses strings in the language

- generated by the rule

- ltfactorgt -gt id (ltexprgt) /

- void factor()

- / Determine which RHS /

- if (nextToken) ID_CODE)

- / For the RHS id, just call lex /

- lex()

46

Recursive-Descent Parsing

- / If the RHS is (ltexprgt) call lex to pass

- over the left parenthesis, call expr, and

- check for the right parenthesis /

- else if (nextToken LEFT_PAREN_CODE)

- lex()

- expr()

- if (nextToken RIGHT_PAREN_CODE)

- lex()

- else

- error()

- / End of else if (nextToken ... /

- else error() / Neither RHS matches /

47

Recursive-Descent Parsing

- The LL Grammar Class

- The Left Recursion Problem

- If a grammar has left recursion, either direct or

indirect, it cannot be the basis for a top-down

parser - A grammar can be modified to remove left recursion

48

Top Down Parsing

- ltexprgt ltexprgt lttermgt lttermgt

- lttermgt lttermgt ltfactorgt ltfactorgt

- ltfactorgt '(' ltexprgt ')' num ident

- Note Knowing something about lexical analysis we

can define num and ident as terminal symbols - Exact definition of num and ident are details

left to lexical analysis

49

Start

ltexprgt

1 2 3

50

First Guess

ltexprgt

lttermgt

ltexprgt

1 2 3

51

Second Guess

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

1 2 3

52

Third Guess

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

1 2 3

53

Fourth Guess

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

lttermgt

ltexprgt

1 2 3

54

Maybe we just guessed poorly?

55

Should have picked lttermgt

- ltexprgt ltexprgt lttermgt lttermgt

- lttermgt lttermgt ltfactorgt ltfactorgt

- ltfactorgt '(' ltexprgt ')' num ident

ltexprgt

lttermgt

When we reach a bad choice we just back up and

try again...

ltfactorgt

ltidentgt

1 2 3

56

Should have picked lttermgt

ltexprgt

lttermgt

- ltexprgt ltexprgt lttermgt lttermgt

- lttermgt lttermgt ltfactorgt ltfactorgt

- ltfactorgt '(' ltexprgt ')' num ident

ltfactorgt

ltnumgt

1 2 3

57

Problem

- Grammar as written is not type that can be used

successfully with top-down parsing - Grammar contains left-recursive productions

ltexprgt ltexprgt lttermgt lttermgt lttermgt

lttermgt ltfactorgt factorgt ltfactorgt'('ltexprgt')

' num ident

58

Recursion

- Recall recursion

- Check for terminating condition

- Recurse

- Not

- Recurse

- Check for terminating condition

- Fix is to make grammar right-recursive.

59

Making it right-recursive

- ltexprgt ltexprgt lttermgt lttermgt

ltexprgt

ltexprgt

ltexprgt

lttermgt

lttermgt

ltexprgt

lttermgt

ltexprgt

lttermgt

lttermgt

ltexprgt

lttermgt

lttermgt

lttermgt lttermgt

lttermgt lttermgt lttermgt

60

Parsing Problem

- G3 ltexpr, term, factor, 0, 1, .., 9, , -,

, /, (, ), expr, Pgt where P is as follows - ltexprgt ? ltexprgt lttermgt ltexprgt lttermgt

lttermgt - lttermgt ? lttermgt ltfactorgt lttermgt / ltfactorgt

ltfactorgt - ltfactorgt ? id num ( ltexprgt )

61

Parsing Problem

- Top-down grammar for arithmetic expression

- G4 ltexpr, e_tail, term, t_tail, F, 0, 1, ..,

9, , -, , /, (, ), expr, Pgt where P is as

follows - ltexprgt ? lttermgtlte_tailgt

- lte_tailgt ? e lttermgtlte_tailgt - lttermgt

lte_tailgt e_tail means possibly more terms - lttermgt ? ltfactorgtltt_tailgt

- ltt_tailgt ? e ltfactorgtltt_tailgt / ltfactorgt

ltt_tailgt t_tail means possibly more factors - ltfactorgt ? id num (ltexprgt )

62

Parsing Problem

- 2 3 4 5 eof

- 7 eof

E

E

T

E

T

T

F

E

-

T

T

2

F

T

F

e

F

T

e

4

e

3

5

e

63

Parsing Problem

E

- 2 / (3 5) eof

E

T

e

F

T

2

F

/

T

E

(

)

e

T

E

F

T

E

-

T

e

e

F

T

3

e

5

64

Recursive-Descent Parsing

- The other characteristic of grammars that

disallows top-down parsing is the lack of

pairwise disjointness - The inability to determine the correct RHS on the

basis of one token of lookahead - Def FIRST(?) a ? gt a?

- (If ? gt ?, ? is in FIRST(?))

65

Recursive-Descent Parsing

- Pairwise Disjointness Test

- For each nonterminal, A, in the grammar that has

more than one RHS, for each pair of rules, A ? ?i

and A ? ?j, it must be true that - FIRST(?i) FIRST(?j) ?

- Examples

- A ? a bB cAb

- A ? a aB

66

Recursive-Descent Parsing

- Left factoring can resolve the problem

- Replace

- ltvariablegt ? identifier identifier

ltexpressiongt - with

- ltvariablegt ? identifier ltnewgt

- ltnewgt ? ? ltexpressiongt

- or

- ltvariablegt ? identifier ltexpressiongt

- (the outer brackets are metasymbols of EBNF)

67

Parser Classification

- Parsers are broadly broken down into

- LL - Top down parsers

- L - Scan Left to Right

- L - Traces leftmost derivation of input string

- LR - Bottom up parsers

- L - Scan left to right

- R - Traces rightmost derivation of input string

- Typical notation

- LL(1), LL(0), LR(1), LR(k)

- Number (k) refers to maximum look ahead

- Lower is better!

68

Parser Classification

- Thus k is maximum height of stack

- k 0, No stack k 1, single variable k gt 1,

stack - Writing grammar with small k is not easy!

69

Tradeoff

- Grammar ? Parser

- LL Parsers are a subset of LR Parsers

- Anything parsable with LL is parsable with LR.

Reverse is not true.

LR

LL

70

expr

1 2 3

71

expr

term

1 2 3

72

expr

term

factor

1 2 3

73

expr

term

factor

Finds num

1 2 3

74

expr

term

Success

factor

1 2 3

75

expr

term

Success

factor

t_tail

Finds nothing!

1 2 3

76

expr

term

Success

Success

factor

t_tail

1 2 3

77

expr

Success

term

1 2 3

78

expr

Success

term

e_tail

1 2 3

79

expr

Success

term

e_tail

Finds

1 2 3

80

expr

Success

term

e_tail

Finds

term

1 2 3

81

expr

Success

term

e_tail

Finds

term

factor

1 2 3

82

expr

Success

term

e_tail

Finds

term

factor

Finds num

1 2 3

83

expr

Success

term

e_tail

Finds

term

factor

1 2 3

84

expr

Success

term

e_tail

Finds

term

Success

factor

1 2 3

85

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

1 2 3

86

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

Finds

1 2 3

87

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

Finds

factor

1 2 3

88

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

Finds

factor

Finds num

1 2 3

89

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

Finds

factor

t_tail

Finds nothing

1 2 3

90

expr

Success

term

e_tail

Finds

term

Success

factor

t_tail

Success

Success

Finds

factor

t_tail

1 2 3

91

expr

Success

term

e_tail

Finds

term

Success

Success

factor

t_tail

1 2 3

92

expr

Success

term

e_tail

Success

Finds

term

1 2 3

93

expr

Success

Success

term

e_tail

1 2 3

94

expr

Success

1 2 3

95

What happened?

ltexprgt

lttermgt

lte_tailgt

lttermgt

lte_tailgt

ltfactorgt

ltt-tailgt

?

ltfactorgt

ltt-tailgt

?

num

ltfactorgt

ltt_tailgt

num

num

?

1

2

3

96

Bottom-up Parsing

- The parsing problem is finding the correct RHS in

a right-sentential form to reduce to get the

previous right-sentential form in the derivation

97

Bottom-up Parsing

- Intuition about handles

- Def ? is the handle of the right sentential form

- ? ??w if and only if S gtrm ?Aw gtrm

??w - Def ? is a phrase of the right sentential form

- ? if and only if S gt ? ?1A?2 gt

?1??2 - Def ? is a simple phrase of the right sentential

form ? if and only if S gt ? ?1A?2 gt ?1??2

98

Bottom-up Parsing

- Intuition about handles

- The handle of a right sentential form is its

leftmost simple phrase - Given a parse tree, it is now easy to find the

handle - Parsing can be thought of as handle pruning

99

Bottom-up Parsing

- Shift-Reduce Algorithms

- Reduce is the action of replacing the handle on

the top of the parse stack with its corresponding

LHS - Shift is the action of moving the next token to

the top of the parse stack

100

Bottom-up Parsing

- A parser table can be generated from a given

grammar with a tool, e.g., yacc

101

Introduction

- Shift-reduce parsing

- A general style of bottom-up parsing

- Attempts to construct a parse tree for an input

string - Beginning at the leaves (the bottom)

- Working up towards the root (the top)

- A process of reducing the input string to the

start symbol

102

Introduction

- At each reduction step, a substring matching the

RHS of a production is replaced by the symbol on

the LHS - If the substring is chosen correctly at each

step, a rightmost derivation is traced in reverse

103

Example

- Consider the following grammar

- S ? aABe A ? Abc b B ? d

- The sentence abbcde can be reduced to S by the

following steps - abbcde ? aAbcde ? aAde ? aABe ? S

- rightmost derivation (in reverse)

104

Introduction

- Two problems to be solved

- How to locate the substring to be reduced?

- What production to choose if there are many

productions with that substring on the RHS?

105

Stack Implementation (of Shift-Reduce Parsing)

- A stack is used to hold grammar symbols and an

input buffer to hold the input string - used to mark bottom of stack end of input

- Initially stack is empty and we have a string,

say w, as input ( is end of string) - STACK INPUT

- w

106

Stack Implementation

- The parser shifts zero or more input symbols onto

the stack until a substring ß (called a handle)

is on top of the stack - Then ß is reduced to the LHS of a production

- Repeat until (an error is seen or) the stack has

the start symbol and input is empty - STACK INPUT

- S

At this point, parser halts with success

107

Example

- Suppose we have the (ambiguous) CFG

- E ? E E E E (E) id

- Consider the input string id1 id2 id3 that

can be derived (rightmost) as - E ? E E ? E E E ? E E id3 ? E id2

id3 ? id1 id2 id3 - Show the actions of a shift-reduce parser

108

(No Transcript)

109

Actions

- Shift shift next symbol onto top of stack

- Reduce locate the left end of a handle within

the stack (right end is the top) and decide the

non-terminal to replace handle - Accept announce successful completion

- Error discover syntax error and call error

recovery routine

110

Conflicts during Parsing

- Shift-reduce conflict

- Cannot decide whether to shift or reduce

- Reduce-reduce conflict

- Cannot decide which of the several reductions to

make (multiple productions to choose from)

111

Conflict Resolution

- Conflict resolution by adapting the parsing

algorithm (e.g., in parser generators) - Shift-reduce conflict

- Resolve in favor of shift

- Reduce-reduce conflict

- Use the production that appears earlier

112

Bottom-up Parsing

- Advantages of LR parsers

- They will work for nearly all grammars that

describe programming languages. - They work on a larger class of grammars than

other bottom-up algorithms, but are as efficient

as any other bottom-up parser. - They can detect syntax errors as soon as it is

possible. - The LR class of grammars is a superset of the

class parsable by LL parsers.

113

Bottom-up Parsing

- LR parsers must be constructed with a tool

- Knuths insight A bottom-up parser could use the

entire history of the parse, up to the current

point, to make parsing decisions - There were only a finite and relatively small

number of different parse situations that could

have occurred, so the history could be stored in

a parser state, on the parse stack

114

Bottom-up Parsing

- An LR configuration stores the state of an LR

parser - (S0X1S1X2S2XmSm, aiai1an)

115

Bottom-up Parsing

- LR parsers are table driven, where the table has

two components, an ACTION table and a GOTO table - The ACTION table specifies the action of the

parser, given the parser state and the next token - Rows are state names columns are terminals

- The GOTO table specifies which state to put on

top of the parse stack after a reduction action

is done - Rows are state names columns are nonterminals

116

Structure of An LR Parser

117

Bottom-up Parsing

- Initial configuration (S0, a1an)

- Parser actions

- If ACTIONSm, ai Shift S, the next

configuration is - (S0X1S1X2S2XmSmaiS, ai1an)

- If ACTIONSm, ai Reduce A ? ? and S

GOTOSm-r, A, where r the length of ?, the

next configuration is - (S0X1S1X2S2Xm-rSm-rAS, aiai1an)

118

Bottom-up Parsing

- Parser actions (continued)

- If ACTIONSm, ai Accept, the parse is complete

and no errors were found. - If ACTIONSm, ai Error, the parser calls an

error-handling routine.

119

LR Parsing Table

120

LR Parsing Introduction

- An efficient bottom-up parsing technique

- Can parse a large set of CFGs

- Technique is called LR(k) parsing

- L for left-to-right scanning of input

- R for rightmost derivation (in reverse)

- k for number of tokens of look-ahead

- (when k is omitted, it implies k1)

121

LL(k) vs. LR(k)

- LL(k) must predict which production to use

having seen only first k tokens of RHS - Works only with some grammars

- But simple algorithm (can construct by hand)

- LR(k) more powerful

- Can postpone decision until seen tokens of entire

RHS of a production k more beyond

122

LR(k)

- Can recognize virtually all programming language

constructs (if CFG can be given) - Most general non-backtracking shift-reduce method

known, but can be implemented efficiently - Class of grammars can be parsed is a superset of

grammars parsed by LL(k) - Can detect syntax errors as soon as possible

123

LR(k)

- Main drawback too tedious to do by hand for

typical programming lang. grammars - We need a LR parser generator

- Many available

- Yacc (yet another compiler compiler) or bison

for C/C environment - CUP (Construction of Useful Parsers) for Java

environment JavaCC is another example - We write the grammar and the generator produces

the parser for that grammar