Tony Doyle University of Glasgow - PowerPoint PPT Presentation

1 / 20

Title: Tony Doyle University of Glasgow

1

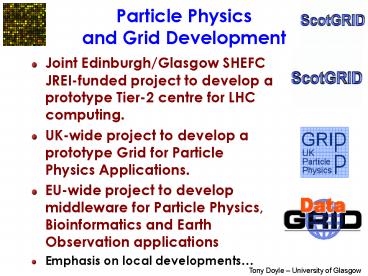

Particle Physics and Grid Development

- Joint Edinburgh/Glasgow SHEFC JREI-funded project

to develop a prototype Tier-2 centre for LHC

computing. - UK-wide project to develop a prototype Grid for

Particle Physics Applications. - EU-wide project to develop middleware for

Particle Physics, Bioinformatics and Earth

Observation applications - Emphasis on local developments

2

Outline

- Introduction

- ScotGrid

- Motivation and Overview

- Data Structures in Particle Physics

- How Does the Grid Work?

- Middleware Development

- Grid Data Management

- Testbed Status

- Is the Middleware Robust?

- Tier-1 and -2 Centre Resources

- GridPP Testbed to Production

- Summary

3

ScotGRID

- ScotGRID Processing nodes at Glasgow

- 59 IBM X Series 330 dual 1 GHz Pentium III with

2GB memory - 2 IBM X Series 340 dual 1 GHz Pentium III with

2GB memory and dual ethernet - 3 IBM X Series 340 dual 1 GHz Pentium III with

2GB memory and 100 1000 Mbit/s ethernet - 1TB disk

- LTO/Ultrium Tape Library

- Cisco ethernet switches

- ScotGRID Storage at Edinburgh

- IBM X Series 370 PIII Xeon with 512 MB memory 32

x 512 MB RAM - 70 x 73.4 GB IBM FC Hot-Swap HDD

Applications

Middleware

Hardware

4

Starting Point (Dec 2000)

2001 2002 2003

5

Rare Phenomena Huge Background

All interactions

9 orders of magnitude

The HIGGS

6

Data Hierarchy

RAW, ESD, AOD, TAG

RAW

Recorded by DAQ Triggered events

Detector digitisation

2 MB/event

7

LHC Computing Challenge

PBytes/sec

Online System

100 MBytes/sec

Offline Farm20 TIPS

- One bunch crossing per 25 ns

- 100 triggers per second

- Each event is 1 MByte

100 MBytes/sec

Tier 0

CERN Computer Centre gt20 TIPS

Gbits/sec

or Air Freight

HPSS

Tier 1

RAL Regional Centre

US Regional Centre

French Regional Centre

Italian Regional Centre

HPSS

HPSS

HPSS

HPSS

Tier 2

Tier2 Centre 1 TIPS

Tier2 Centre 1 TIPS

Tier2 Centre 1 TIPS

Gbits/sec

Tier 3

Physicists work on analysis channels Each

institute has 10 physicists working on one or

more channels Data for these channels should be

cached by the institute server

Institute 0.25TIPS

Institute

Institute

Institute

Physics data cache

100 - 1000 Mbits/sec

Tier 4

Workstations

8

Events.. to Files.. to Events

Event 1 Event 2 Event 3

Data Files

Data Files

Data Files

RAW

Tier-0 (International)

RAW

RAW

Data Files

RAW Data File

ESD

Tier-1 (National)

Data Files

ESD

ESD

Data Files

Data Files

ESD Data

AOD

Tier-2 (Regional)

AOD

AOD

Data Files

Data Files

Data Files

AOD Data

TAG

Tier-3 (Local)

TAG

TAG

TAG Data

Interesting Events List

9

Application Interfacesunder development

10

How Does theGrid Work?

1. Authentication grid-proxy-init 2. Job

submission dg-job-submit 3. Monitoring and

control dg-job-status dg-job-cancel dg-job-get-

output 4. Data publication and replication globus

-url-copy, GDMP 5. Resource scheduling use of

Mass Storage Systems JDL, sandboxes, storage

elements

0. Web User Interface

11

Middleware Development

12

Grid Data Management

- Secure access to metadata

- metadata where are the files on the grid?

- database client interface

- grid service using standard web services

- develop with UK e-science programme

- Input to OGSA-DAI

- Optimised file replication

- simulations required

- economic models using CPU, disk, network inputs

- OptorSim

13

Is the Middleware Robust?

- Code Base (1/3 Mloc)

- Software Evaluation Process

- Testbed Infrastructure Unit Test

?Build ?Integration ?Certification?Production - Code Development Platforms

14

Testbed Status16th May 2003

UK-wide development using EU-DataGrid tools

(v1.47). Not yet robust, but sufficient for

prototype development. See http//www.gridpp.ac.uk

/map/

15

Tier-2 Web-BasedMonitoring

Prototype

Accumulated CPU Use

Total Disk Use

ScotGrid reached its 300,000th processing hour on

Sunday 30th March 2002.

Documentation

Instantaneous CPU Use

16

use in 2003 by week

17

Tier-1 and -2 Centres

- Estimated resources at start

- of GridPP2 (Sept. 2004)

Tier-1

Tier-2 e(6000 CPUs 500 TB) Tier-1 1000 CPUs

500 TB

Shared distributed resources required to meet

experiment requirements Connected by

network and grid

18

Testbed to Production

- Dynamic Grid Optimisation e.g. OptorSim

- Automatic data replication to improve data access

19

- 50/50 Edin/GU funding model, funded by SHEFC

- compute-intensive jobs performed at GU

- data-intensive jobs performed at Edin

- Leading RD in Grid Data Management in UK

- Open policy on usage and target shares

- Open monitoring system

- Meeting real requirements of applications

currently HEP (Experiment and Theory),

Bioinformatics, Computing Science - open source research (all code)

- open source systems (IBM linux-based system)

- part of a worldwide grid infrastructure through

GridPP - GridPP Project (17m over three years -gt Sep 04)

- Dedicated people actively developing a Grid All

with personal certificates - Using the largest UK grid testbed (16 sites and

more than 100 servers) - Deployed within EU-wide programme

- Linked to Worldwide Grid testbeds

- LHC Grid Deployment Programme Defined First

International testbed in July - Active Tier-1/A Production Centre already meeting

International Requirements - Latent Tier-2 resources being monitored

ScotGRID recognised as leading developments - Significant middleware development programme

importance of Grid Data Management

20

Summary

Hardware

Middleware

Applications

- Universities strategic investment

- Software prototyping (Grid Data Management) and

stress-testing (Applications) - Long-term commitment (LHC era)

- Partnership of Bioinformatics, Computing Science,

Edinburgh, Glasgow, IBM, Particle Physics - Working locally as part of National, European and

International Grid development - middleware testbed linked to real applications

via ScotGrid - Development/deployment

ScotGRID