Prsentation PowerPoint - PowerPoint PPT Presentation

1 / 31

Title:

Prsentation PowerPoint

Description:

2d strategy (lower variance): consider an underlying indicator-vector that estimates the ... Extra error comes from the variance of around its mean. ... – PowerPoint PPT presentation

Number of Views:82

Avg rating:3.0/5.0

Title: Prsentation PowerPoint

1

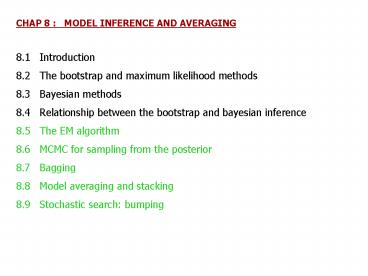

CHAP 8 MODEL INFERENCE AND AVERAGING 8.1

Introduction 8.2 The bootstrap and maximum

likelihood methods 8.3 Bayesian methods 8.4

Relationship between the bootstrap and bayesian

inference 8.5 The EM algorithm 8.6 MCMC for

sampling from the posterior 8.7 Bagging 8.8

Model averaging and stacking 8.9 Stochastic

search bumping

2

1

8.5 The EM algorithm The EM algorithm 1 is

a popular tool for simplifying difficult maximum

likelihood problems.

Y is an observed random variable, modeled by one

of g(y?)???. We want to estimate ? with

maximum likelihood Difficult to solve

transcendental equation (gt approximation /

numerical solutions) The constraint ??? may

complicate the derivative condition at boundaries

of ?

1 A. Dempster, N. Laird, and D. Rubin.

Maximum likelihood from incomplete data via

the EM algorithm Jour. Royal Stat. Soc. Ser.B,

pp. 1-39, 1997

3

2

8.5 The EM algorithm Maximum likelihood from

incomplete data via the EM algorithm.

Imagine 2 sample spaces, X and Y, and a mapping

function h X -gt Y

h

X

Y

Complete data

Incomplete data

X(y)

y

g(y?)???

f(x?)???

Occurrence of x ? X implies occurrence of

yh(x) ? Y but only y is actually observed.

We only know that x ? X(y) The sampling

densities g and f are related by In a

given problem Y is fixed but X can be chosen

4

3

8.5 The EM algorithm The EM algorithm.

Idea as f(x?)??? is unknown, define the

complete-data log-likelihood given the

incomplete data y and the current parameter

estimates ?

- The EM algorithm

- Choose X to simplify the EM steps

- Let ?(0) ? ? be any first estimate of ?. Repeat

the 2 next steps till convergence - Expectation step compute

- Maximization step

- Advantages of the EM algorithm

- The constraint ? ? ? can be incorporated into

the M-step. - The likelihood g(y?(p)) of the estimates is

nondecreasing

5

4

8.5 The EM algorithm The EM algorithm why

does it work

The conditional density of X given Yy is So on

X(y), g(y?) f(x?) / k(xy,?) gt log

g(y?) log f(x?) log k(xy,?) gt Elog

g(y?) y,?(p) Elog f(x?) y,?(p)

Elog k(xy,?) y,?(p) gt log g(y?)

Q(??(p)) Elog k(xy,?) y,?(p) The

increase in likelihood between iterations is log

g(y?(p1)) - log g(y?(p)) Q(?(p1)?(p)) -

Q(?(p)?(p)) Elog k(xy,?(p)) - log

k(xy,?(p1)) y,?(p) Q(?(p1)?(p)) -

Q(?(p)?(p)) - Elog k(xy,?(p1)) - log

k(xy,?(p)) y,?(p) Q(?(p1)?(p)) -

Q(?(p)?(p)) - Elog k(xy,?(p1))/k(xy,?(p)

) y,?(p)

? - logE k(xy,?(p1))/k(xy,?(p))

y,?(p) - log ? X k(xy,?(p1)) dx 0

? 0 Because ?(p1) maximizes Q(.?(p))

6

5

8.5 The EM algorithm The EM algorithm a two

component Gaussian mixture

Presentation of the data

7

6

8.5 The EM algorithm The EM algorithm a two

component Gaussian mixture

Model mixture of 2 normal

distributions where and

? ? 0,1 with Pr(?1) ? Density where ?

(?1, ?12, ?2, ?22, ?) and ??i(x) is the pdf of a

normal with parameters ?i (?i, ?i2)

Likelihood function Log-likelihood function

? Direct maximization is difficult

8

7

8.5 The EM algorithm The EM algorithm a two

component Gaussian mixture

The EM algorithm Choose X to simplify the

EM steps consider latent variables ?i taking

values 0 or 1 according to normal distribution

from which yi is generated Let ?(0) ? ? be

any first estimate of ? for µ1 and µ2 choose

2 of the yi at random for ?12 and ?22 choose the

overall sample variance and for ? choose ½.

9

8

8.5 The EM algorithm The EM algorithm a two

component Gaussian mixture

The EM algorithm Repeat the 2 next steps

till convergence - Expectation step

estimate the responsability of the 2d normal

distribution for the ith observation ?i(?(p))

E(?i?(p),Y) Pr(?i1 ?(p),Y) by -

Maximization step gt compute the weighted

means and variances, as well as the mixing

probability.

10

9

8.5 The EM algorithm The EM algorithm a two

component Gaussian mixture

The EM algorithm results

11

10

8.5 The EM algorithm The EM algorithm a

multinomial example (Rao, 1973)

There are n197 animals classified into one

of 4 categories (y1,y2,y3,y4)(125,18,20,34) In

fact y1 is divised into 2 categories x1 and x2

not observed Y(x1,x2,y2,y3,y4) with x1x2y1 Y

is assumed to follow a multinomial distribution

with the parameters that are the probability

associated to each category ?(1/2 , ?/4 ,

(1- ?)/4 , (1- ?)/4 , ?/4) We want to estimate ?

by maximum likelihood. The likelihood function is

the following The log-likelihood function is

This function is impossible to maximise

because x1 and x2 are unknown! gt EM

algorithm!

12

11

8.5 The EM algorithm The EM algorithm a

multinomial example (Rao, 1973)

The EM algorithm Let ?(0) 0.5 be a first

estimate of ? Repeat the 2 next steps till

convergence - Expectation step estimate

E(x1 y1 ,?(p)) and E(x2 y1 ,?(p))

by ?1(?(p)) nPr(x1y1 ,?(p)) and

?2(?(p)) nPr(x2y1 ,?(p)) -

Maximization step gt EM algorithm

converges in 4 iterations to .

And we estimate E(x1 y1) and E(x2 y1) by

95.2 et 29.8.

13

12

8.5 The EM algorithm The EM algorithm as a

Maximization Maximization procedure

The E-step is equivalent to maximizing the

log-likelihood over the parameters of the latent

data distribution. The M-step maximizes it over

the parameters of the likelihood.

14

13

8.6 MCMC for sampling from the Posterior

MCMC Markov Chain Monte Carlo

posterior density

échantillons?

Soit K vairables aléatoires

échantillons à partir de leur distribution

conjointe?

GIBBS sampling

15

14

Gibbs sampling

- prendre des valeurs initiales

- Répéter pour t1,2,

- Pour k1,2,,K générer

- à partir de

- 3. Continuer létape 2 jusquà ce que

- ne change plus.

On obtient une chaîne de Markov dont la

distribution stationnaire est la vraie

distribution conjointe.

16

15

Application de Gibbs sampling pour une mixture

- prendre des valeurs initiales

- Répéter pour t1,2,

- (a) Pour i1,2,,N générer

- avec

- (b) Prendre

- et générer et .

- 3. Continuer létape 2 jusquà ce que

- ne change plus.

17

16

Lien entre Gibbs sampling et EM algorithm

EM algorithm

Gibbs sampling

augmentation des données

paramètres

maximum likelihood responsabilities

simule les variables latentes à partir de

la distribution

maximisation

échantillonnage

maximum de la posterior

simule à partir de la distribution conditionnelle

18

17

Exemple mixture

19

18

8.7 Bagging

Bagging exploits the connections between

bootstrap and Bayes approach to improve the

estimate or prediction itself the bootstrap mean

is approximately a posterior average. Bagging

averages the prediction over a collection of

bootstrap sample, thereby reducing its variance.

BAGGING IN A REGRESSION PROBLEM Data Z

(x1,y1), (x2,y2),, (xN,yN) For b 1, 2, ,

B - select a bootstrap sample Zb - fit the

regression model and obtain the prediction

f(b)(x) at input x. The bagging estimate is

is a Monte Carlo estimate of the true

bagging estimate where the boostrap is done

with (xi,yi) , the empirical

distribution. ? ? only when

is nonlinear or adaptive function of the data

20

19

8.7 Bagging

BAGGING IN REGRESSION TREE Data Z (x1,y1),

(x2,y2),, (xN,yN) For b 1, 2, , B -

select a bootstrap sample Zb - fit the

regression tree and obtain the prediction

f(b)(x) at input x. The bagging estimate is

(no longer a tree!!!) BAGGING IN A

CLASSIFICATION TREE Data Z (x1,y1),

(x2,y2),, (xN,yN) where yi is one of the K

classes for Y 1st strategy consider an

underlying indicator-vector with a single

1 and K-1 0 such that . The bagging

estimate is where is a

K-vector (p1,p2,,pK) where pk is the proportion

of trees predicting class k at x. 2d strategy

(lower variance) consider an underlying

indicator-vector that estimates the class

probabilities at x. The bagging estimate is the

average of those bootstrap probabilities.

21

20

8.7 Bagging Example tree with simulated data

Simulations Generate a sample of size N30, with

2 classes (Y0 or 1) and p5 features having each

a standard Gaussian distribution with pairwise

correlation 0.95. Y was generated according to

Pr(Y1x1?0.5)0.2 and Pr(Y1x1gt0.5)0.8. A

test sample of size 2000 was also generated from

the same population. They fit a classification

tree to the training sample and to each of 200

bootstrap samples using both strategies. Results

The trees have high variance due to the

correlation in predictors. Bagging succeeds in

smoothing out this variance and hence reduces

variance and leaves bias unchanged.

22

21

8.7 Bagging Example tree with simulated data

23

22

- 8.7 Bagging

- Example tree with simulated data

- Why does it work?

- Extra error comes from the variance of

around its mean . - True population aggregation never increases mean

squared error. This suggests that - bagging drawing samples from the training data

will often decrease mean squared error.

24

23

- 8.7 Bagging

- Example tree with simulated data

- Why does it not work under 0-1 loss?

- Because of the non additivity of bias and

variance - Bagging a good classifier can make it better but

bagging a bad classifier can make it worse! - Simulations

- Suppose Y1 for all x.

- We have a classifier that predict Y1

with probability 0.4 and Y0 with probability

0.6. - The misclassification error is 0.6 for

- and 1 for the bagged classifier

25

24

- 8.7 Bagging

- Example tree with simulated data

- Bagging does not help with examples where a

greater enlargement of the model class is - needed. Solution boosting (chap 10)!

26

25

8.8 Model averaging and stacking

une quantité dintérêt (par ex, une prédiction

f(x) à une valeur fixée de x)

posterior distribution

prediction bayesienne moyenne pondérée des

prédictions individuelles

différentes stratégies par exemple, 1.

Committee methods

moyenne non pondérée des prédictions de chaque

modèle

27

26

2. utiliser le critère BIC

Si tous les modèles ont le même modèle

paramétrique avec des paramètres différents

BIC donne le poids de chaque modèle poids

qualité de lajustement et du nombre de

paramètres

3. utiliser la méthode bayesienne en entier

priors

28

27

predictions

poids

tels que

solution

tel que

29

28

régression complète plus petites erreurs que

chaque modèle séparé

Comment tenir compte de la complexité des modèles?

Stacked generalisation ou Stacking

où

est la prédiction sans lobservation i

prédiction finale

lien avec cross-validation si

et

30

29

- 8.9 Stochastic Search Bumping

- Bumping is a technique for finding a better

single model using bootstrap sampling to move - randomly through model space.

- Principles

- Data Z (x1,y1), (x2,y2),, (xN,yN)

- For b 1, 2, , B

- - select a bootstrap sample Zb

- - fit the model and obtain the prediction

f(b)(x) at input x. - - compute prediction error averaged over the

original training set. - Select the model obtained from bootstrap sample

where - (the one that produces the smallest prediction

error) - Bumping is useful for problems where it is

difficult to optimize the fitting criterion.

31

30

- 8.9 Stochastic Search Bumping

- Exclusive or (XOR) problem 2 classes 2

interacting features - CART algorithm find the best split on either

feature and then splits the resulting strata gt

The first vertical split is useless because of

the balance nature of the data! - Bumping by bootstrap sampling from the data,

it breaks the balance in the classes and with 20

bootstrap samples, it will by chance found the

near optimal split.