Overview of the SCEC Community Modeling Environment: Cyberinfrastructure for Earthquake Science - PowerPoint PPT Presentation

1 / 32

Title:

Overview of the SCEC Community Modeling Environment: Cyberinfrastructure for Earthquake Science

Description:

Overview of the SCEC Community Modeling Environment: Cyberinfrastructure for Earthquake Science – PowerPoint PPT presentation

Number of Views:70

Avg rating:3.0/5.0

Title: Overview of the SCEC Community Modeling Environment: Cyberinfrastructure for Earthquake Science

1

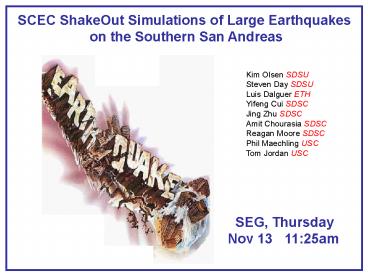

SCEC ShakeOut Simulations of Large Earthquakes

on the Southern San Andreas

Kim Olsen SDSU Steven Day SDSU Luis Dalguer

ETH Yifeng Cui SDSC Jing Zhu SDSC Amit Chourasia

SDSC Reagan Moore SDSC Phil Maechling USC Tom

Jordan USC

SEG, Thursday Nov 13 1125am

2

SCEC CME Collaborations

- Intended to advance physics-based seismic hazard

analysis using HPC

NSF OCI EAR

SCEC CME Project

USGS

ISI

SDSC/PSC

IRIS

SCEC Institutions

Information Science

Source SCEC

3

Seismic Hazard Analysis

4

The FEMA 366 Report

HAZUS99 Estimates of Annual Earthquake Losses

for the United States, 2000

- U.S. annualized earthquake loss (AEL) is about

4.4 billion/yr - 74 of the total is concentrated in California

- 25 is in Los Angeles County alone

5

1994 Northridge

When 17 Jan 1994 Where San Fernando

Valley Damage 20 billion Deaths

57 Injured gt9000

6

Slip deficit on the southern SAF since last

event (1690)315 years x 16 mm/year 5.04 m

-gt Mw7.7

Major Earthquakes on the San Andreas Fault,

1690-present

14691-60 yrs 22013 yrs

1906 M 7.8

1690 M 7.7

1857 M 7.9

7

Southern California in 1857 The most

recent big one in southern California

8

Southern California in 2008

- Over 23 million people

- Fastest growing areas are close to the San

Andreas

9

HPC Aspects

10

CME Key Numerical Modeling Code

- Structured 3D 4th-order staggered-grid

velocity-stress FD developed by Olsen, enhanced

and optimized at SDSC, extensively validated for

a wide range of problems - Multi-axial perfectly matched layers absorbing

boundary conditions on the side and bottom of the

grid, zero-stress flat free surface condition at

the top - Fortran 90, MPI using domain decomposition, I/O

using MPI-IO, point-to-point and collective

communication - Staggered-grid split-node dynamic rupture

boundary condition

11

Parallelization Strategy

- Each processor responsible for performing stress

and velocity calculations for its portion of the

grid, as well as boundary conditions for each

volume - Ghost cells two-point-thick padding layer - the

most recently updated wavefield parameters

exchanged from the edge of the neighboring

sub-grid - Parallel I/O

12

Strong Scaling Performance (BGW)

13

Weak Scaling (BGW)

?h 0.4 km 0.2 km

0.13 km 0.1 km

?h 0.2 km 0.1 km

0.07 km 0.05 km

14

NSF Track2 Ranger

- 504 Tflops Sun Constellation Linux Cluster

- 3,936 16-way compute-nodes (blades)

- 123 TBs of total memory and 1.73PB of global disk

space - Largest computing system for open science

research - The first of the new NSF Track2 HPC acquisition

15

Geoscience Results ShakeOut

16

ShakeOut M7.8

- Golden Guardian

- ShakeOut M7.8

- First Earthquake drill

- based on simulation

- 5 million people

- expected to join (today,

- 10am PCT),

- Motivated changes to

- So Cal disaster plan

17

3D Velocity Model

600 km x 300 km x 80 km

San Bernardino Coachella Valley

18

Rupture Histories for Ensemble of Dynamic Sources

(ShakeOut-D)

Rupture Histories for ShakeOut-D Sources

19

Wave Propagation for Selected ShakeOut-D Source

(g3d7) 0-1Hz 14.4 Billion Grid Points

(?h100m)

Wave Propagation

20

3s-SA for ShakeOut-D (Ensemble of Source Models)

21

3-sec SA for ShakeOut-D

Mean ShakeOut-D (7 realizations)

ShakeOut-D 1-?

22

Socio-economic Effects of ShakeOut-D (using HAZUS)

23

Verification and Validation

24

Verification of ShakeOut Simulations

25

Verification of Numerical Codes

26

Comparison of 3s SAs for ShakeOut-D and ARs (Rock

Sites)

27

Simulation Storage and Dissemination

- Browser-based SCEC digital library (168 TB)

28

Science Summary

- NW-directed rupture on southern San Andreas Fault

is highly efficient in exciting L.A. Basin - Good agreement between ShakeOut simulations using

various methods - Ground motion predictions for ShakeOut-D agree

with NGA GMPEs - Ensemble of scenarios decreases uncertainty in

seismic hazards - The Nov 13 earthquake drill based on the ShakeOut

simulations, a first - ShakeOut prompted changes to disaster plan for

southern California

29

HPC Summary

- Code enhanced to deal with 32 billion mesh nodes

- Excellent speed-up to 40k cores

- Checkpoints/restart/checksum capabilities

- Input/output data transfer between SDSC disk/HPSS

to Ranger disk at 450 MB/s using Globus GridFTP - 150 TBs generated on Ranger, organized as a

separate sub-collection - Sub-collections published through SCEC digital

library (168 TB) - Ported to p650, BG/L, IA-64, XT3/4, Sun Linux,

other platforms

30

(No Transcript)

31

Comparison of 3s SAs for ShakeOut-D and ARs

(selected soil sites)

32

3-sec SA for ShakeOut-D

mean ShakeOut-D (7 realizations)

ShakeOut-D 1-?