Soft Computing - PowerPoint PPT Presentation

1 / 229

Title:

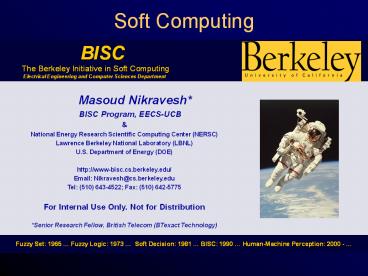

Soft Computing

Description:

National Energy Research Scientific Computing Center (NERSC) ... 1979: CLANCEY'S GUIDON. 1980: SEARLE'S CHINESE ROOM ARTICLE. 1980: MCDERMOTT'S XCON ... – PowerPoint PPT presentation

Number of Views:8166

Avg rating:3.0/5.0

Title: Soft Computing

1

Soft Computing

BISC The Berkeley Initiative in Soft Computing Electrical Engineering and Computer Sciences Department BISC The Berkeley Initiative in Soft Computing Electrical Engineering and Computer Sciences Department

Masoud Nikravesh BISC Program, EECS-UCB National Energy Research Scientific Computing Center (NERSC) Lawrence Berkeley National Laboratory (LBNL) U.S. Department of Energy (DOE) http//www-bisc.cs.berkeley.edu/ Email Nikravesh_at_cs.berkeley.edu Tel (510) 643-4522 Fax (510) 642-5775 For Internal Use Only. Not for Distribution Senior Research Fellow, British Telecom (BTexact Technology)

Masoud Nikravesh BISC Program, EECS-UCB National Energy Research Scientific Computing Center (NERSC) Lawrence Berkeley National Laboratory (LBNL) U.S. Department of Energy (DOE) http//www-bisc.cs.berkeley.edu/ Email Nikravesh_at_cs.berkeley.edu Tel (510) 643-4522 Fax (510) 642-5775 For Internal Use Only. Not for Distribution Senior Research Fellow, British Telecom (BTexact Technology)

Fuzzy Set 1965 Fuzzy Logic 1973 Soft Decision 1981 BISC 1990 Human-Machine Perception 2000 - Fuzzy Set 1965 Fuzzy Logic 1973 Soft Decision 1981 BISC 1990 Human-Machine Perception 2000 -

2

Outline

- Intelligent Systems/Historical Perspective

- Foundation of Soft Computing

- Evolution of Soft Computing

- Neural Network / Neuro Computing

- Fuzzy Logic / Fuzzy Computing

- Genetic Algorithm / Evolutionary Computing

- Hybrid Systems

- BISC Decision Support System

- Demo

- Conclusions

3

Computation?

- Traditional Sense Manipulation of Numbers

- Human Uses Word for Computation and Reasoning

- Conclusions lt Word lt Natural Language

4

Intelligent System?

The role model for intelligent system is Human

Mind.

- Dreyfus

- Minds do not use a theory about the everyday

world - Know-how vs know that

- Winograd

- Intelligent systems act, don't think

5

Artificial Intelligence

- Knowledge Representation

- Predicates

- Production rules

- Semantic networks

- Frames

- Inference Engine

- Learning

- Common Sense Heuristics

- Uncertainty

6

Artificial Intelligence

- Applications

- Expert tasks

- the algorithm does not exist

- A medical encyclopedia is not equivalent to a

physician - Heuristics

- There is an algorithm but it is useless

- Uncertainty

- The algorithm is not possible

- Complex problems

- The algorithm is too complicated

- Technologies

- Expert systems

- Natural language processing

- Symbolic processing

- Knowledge engineering

7

COMMON SENSE

- Deduction is a method of exact inference

(classical logic) - All Greeks are humans and Socrates is a Greek,

therefore Socrates is a human - Induction infers generalizations from a set of

events (science) - Water boils at 100 degrees

- Abduction infers plausible causes of an effect

(medicine) - You have the symptoms of a flue

8

COMMON SENSE

- Uncertainty

- Maybe I will go shopping

- Probability

- Probability measures "how often" an event occurs

- Principle of incompatibility (Pierre Duhem)

- The certainty that a proposition is true

decreases with any increase of its precision - The power of a vague assertion rests in its being

vague (I am not tall) - A very precise assertion is almost never certain

(I am 1.71cm tall)

9

COMMON SENSE

- The Frame Problem

- Classical logic deducts all that is possible from

all that is available - In the real world the amount of information that

is available is infinite - It is not possible to represent what does not

change in the universe as a result of an action - Infinite things change, because one can go into

greater and greater detail of description - The number of preconditions to the execution of

any action is also infinite, as the number of

things that can go wrong is infinite

10

COMMON SENSE

- Classical Logic is inadequate for ordinary life

- Intuitionism

- Non- Monotonic Logic

- Second thoughts

- Plausible reasoning

- Quick, efficient response to problems when an

exact solution is not necessary

Heuristics Rules of thumbs George Polya

Heuretics"

The World Of Objects The Measure

Space Qualitative Reasoning

11

COMMON SENSE

- Fuzzy Logic

- Not just zero and one, true and false

- Things can belong to more than one category, and

they can even belong to opposite categories, and

that they can belong to a category only partially - The degree of membership can assume any value

between zero and one

12

Cost

As complexity rises, precise statements lose

meaning, and meaningful statements lose

precision. (L.A. Zadeh)

Principle of incompatibility (Pierre Duhem) The

certainty that a proposition is true decreases

with any increase of its precision The power of a

vague assertion rests in its being vague (I am

not tall) A very precise assertion is almost

never certain (I am 1.71cm tall)

Uncertainty

Precision

13

TURINGs TEST

- Turing A computer can be said to be intelligent

if its answers are indistinguishable from the

answers of a human being

?

?

Computer

14

A new age?

1929 HUBBLES EXPANDING UNIVERSE 1930

TELEVISION 1940 MODERN SYNTHESIS 1940

SEMIOTICS 1944 DNA 1945 ATOMIC BOMB 1946

COMPUTER 1948 TRANSISTOR 1949 HEBB'S LAW 1953

DOUBLE-HELIX 1955 ARTIFICIAL INTELLIGENCE 1958

LINGUISTICS 1961 SPACE TRAVEL 1967 QUARKS 1974

SUPERSTRING 1978 NEURAL DARWINISM 1982 PERSONAL

COMPUTER 1988 SELF-ORGANIZING SYSTEMS

- 1859 THEORY OF EVOLUTION

- 1854 RIEMANNS GEOMETRY

- 1862 AUTOMOBILE

- 1865 HEREDITARITY

- 1870 THERMODYNAMICS

- 1876 TELEPHONE

- 1877 GRAMOPHONE

- 1888 ELECTROMAGNETISM

- 1894 CINEMA

- 1900 PLANCKS QUANTUM

- 1903 AIRPLANE

- 1905 EINSTEINS SPECIAL RELATIVITY

- 1907 RADIO

- 1916 EINSTEINS GENERAL RELATIVITY

- 1920 POPULATION GENETICS

- 1923 WAVE-PARTICLE DUALISM

- 1926 QUANTUM MECHANICS

15

Different Historical Paths

- PHILOSOPHY (SINCE BEGINNING)

- PSYCHOLOGY (SINCE BEGINNING)

- MATH (SINCE FREGE)

- BIOLOGY (SINCE DISCOVERY OF NEURONS)

- COMPUTER SCIENCE (SINCE A.I., 1955)

- LINGUISTICS (SINCE CHOMSKY, 1960s)

- PHYSICS (RECENTLY, 1980s)

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

16

The Nature of MindThe Contribution of

Information Science

- The mind as a symbol processor

- Formal study of human knowledge

- Knowledge processing

- Common-sense knowledge

- Neural Networks

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

17

The Nature of MindThe Contribution of Linguistics

- Competence over performance

- Pragmatics

- Metaphor

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

18

The Nature of MindThe Contribution of Psychology

- The mind as a processor of concepts

- Reconstructive memory

- Memory is learning and is reasoning.

- Fundamental unity of cognition

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

19

The Nature of Mind The Contribution of

Neurophysiology

- The brain is an evolutionary system

- Mind shaped mainly by genes and experience

- Neural-level competition

- Connectionism

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

20

The Nature of Mind The Contribution of Physics

- Living beings create order from disorder

- Non-equilibrium thermodynamics

- Self-organizing systems

- The mind as a self-organizing system

- Theories of consciousness based on quantum

relativity physics

Thinking About Thought The Nature of Mind Piero

Scaruffi (Oct 8 - Dec 10, 2002)

21

MACHINE INTELLIGENCE History

- Make it idiot proof and someone will make a

better idiot

22

- DAVID HILBERT (1928)

- MATHEMATICS BLIND MANIPULATION OF SYMBOLS

- FORMAL SYSTEM A SET OF AXIOMS AND A SET OF

INFERENCE RULES - PROPOSITIONS AND PREDICATES

- DEDUCTION EXACT REASONING

- KURT GOEDEL (1931)

- A CONCEPT OF TRUTH CANNOT BE DEFINED WITHIN A

FORMAL SYSTEM

- ALFRED TARSKI (1935)

- DEFINITION OF TRUTH A STATEMENT IS TRUE IF IT

CORRESPONDS TO REALITY (CORRESPONDENCE THEORY OF

TRUTH)

ALFRED TARSKI (1935) BUILD MODELS OF THE WORLD

WHICH YIELD INTERPRETATIONS OF SENTENCES IN THAT

WORLD TRUTH CAN ONLY BE RELATIVE TO

SOMETHING META-THEORY

23

- ALAN TURING (1936)

- COMPUTATION THE FORMAL MANIPULATION OF SYMBOLS

THROUGH THE APPLICATION OF FORMAL RULES - HILBERTS PROGRAM REDUCED TO MANIPULATION OF

SYMBOLS - LOGIC SYMBOL PROCESSING

- EACH PREDICATE IS DEFINED BY A FUNCTION, EACH

FUNCTION IS DEFINED BY AN ALGORITHM

- NORBERT WIENER (1947)

- CYBERNETICS

- BRIDGE BETWEEN MACHINES AND NATURE, BETWEEN

"ARTIFICIAL" SYSTEMS AND NATURAL SYSTEMS - FEEDBACK, HOMEOSTASIS, MESSAGE, NOISE,

INFORMATION - PARADIGM SHIFT FROM THE WORLD OF CONTINUOUS LAWS

TO THE WORLD OF ALGORITHMS, DIGITAL VS ANALOG

WORLD

24

- CLAUDE SHANNON AND WARREN WEAVER (1949)

- INFORMATION THEORY

- ENTROPY A MEASURE OF DISORDER A MEASURE OF

THE LACK OF INFORMATION - LEON BRILLOUIN'S NEGENTROPY PRINCIPLE OF

INFORMATION

ANDREI KOLMOGOROV (1960) ALGORITHMIC INFORMATION

THEORY COMPLEXITY QUANTITY OF

INFORMATION CAPACITY OF THE HUMAN BRAIN 10 TO

THE 15TH POWER MAXIMUM AMOUNT OF INFORMATION

STORED IN A HUMAN BEING 10 TO THE 45TH ENTROPY

OF A HUMAN BEING 10 TO THE 23TH

- LOTFI A. ZADEH (1965)

- FUZZY SET

- Stated informally, the essence of this

principle is that as the complexity of a

system increases, our ability to make precise and

yet significant statements about its behavior

diminishes until a threshold is reached beyond

which precision and significance (or

relevance) become almost mutually exclusive

characteristics.

25

MACHINE INTELLIGENCE History

- 1936 TURING MACHINE

- 1940 VON NEUMANNS DISTINCTION BETWEEN DATA AND

INSTRUCTIONS - 1943 FIRST COMPUTER

- 1943 MCCULLOUCH PITTS NEURON

- 1947 VON NEUMANNs SELF-REPRODUCING AUTOMATA

- 1948 WIENERS CYBERNETICS

- 1950 TURINGS TEST

- 1956 DARTMOUTH CONFERENCE ON ARTIFICIAL

INTELLIGENCE - 1957 NEWELL SIMONS GENERAL PROBLEM SOLVER

- 1957 ROSENBLATTS PERCEPTRON

- 1958 SELFRIDGES PANDEMONIUM

- 1957 CHOMSKYS GRAMMAR

- 1959 SAMUELS CHECKERS

- 1960 PUTNAMS COMPUTATIONAL FUNCTIONALISM

- 1960 WIDROWS ADALINE

- 1965 FEIGENBAUMS DENDRAL

26

MACHINE INTELLIGENCE History

- 1965 ZADEHS FUZZY LOGIC

- 1966 WEIZENBAUMS ELIZA

- 1967 HAYES-ROTHS HEARSAY

- 1967 FILLMORES CASE FRAME GRAMMAR

- 1969 MINSKY PAPERTS PAPER ON NEURAL NETWORKS

- 1970 WOODS ATN

- 1972 BUCHANANS MYCIN

- 1972 WINOGRADS SHRDLU

- 1974 MINSKYS FRAME

- 1975 SCHANKS SCRIPT

- 1975 HOLLANDS GENETIC ALGORITHMS

- 1979 CLANCEYS GUIDON

- 1980 SEARLES CHINESE ROOM ARTICLE

- 1980 MCDERMOTTS XCON

- 1982 HOPFIELDS NEURAL NET

- 1986 RUMELHART MCCLELLANDS PDP

- 1990 ZADEHS SOFT COMPUTING

- 2000 ZADEHS COMPUTING WITH WORDS AND

PERCEPTIONS PNL

27

What is Soft Computing?

BISC The Berkeley Initiative in Soft Computing Electrical Engineering and Computer Sciences Department BISC The Berkeley Initiative in Soft Computing Electrical Engineering and Computer Sciences Department

The basic ideas underlying soft computing in its current incarnation have links to many earlier influences, among them Prof. Zadehs 1965 paper on fuzzy sets the 1973 paper on the analysis of complex systems and decision processes and the 1979 report (1981 paper) on possibility theory and soft data analysis. The principal constituents of soft computing (SC) are fuzzy logic (FL), neural network theory (NN) and probabilistic reasoning (PR), with the latter subsuming belief networks, evolutionary computing including DNA computing, chaos theory and parts of learning theory.

The basic ideas underlying soft computing in its current incarnation have links to many earlier influences, among them Prof. Zadehs 1965 paper on fuzzy sets the 1973 paper on the analysis of complex systems and decision processes and the 1979 report (1981 paper) on possibility theory and soft data analysis. The principal constituents of soft computing (SC) are fuzzy logic (FL), neural network theory (NN) and probabilistic reasoning (PR), with the latter subsuming belief networks, evolutionary computing including DNA computing, chaos theory and parts of learning theory.

Fuzzy Set 1965 Fuzzy Logic 1973 Soft Decision 1981 BISC 1990 Human-Machine Perception 2000 - Fuzzy Set 1965 Fuzzy Logic 1973 Soft Decision 1981 BISC 1990 Human-Machine Perception 2000 -

28

SOFT COMPUTING

SC

29

SOFT COMPUTING

Soft computing is consortium of computing

methodologies which collectively provide a

foundation for the Conception, Design and

Deployment of Intelligent Systems. L.A.

Zadeh "...in contrast to traditional hard

computing, soft computing exploits the tolerance

for imprecision, uncertainty, and partial truth

to achieve tractability, robustness, low

solution-cost, and better rapport with

reality L.A. Zadeh The role model for

Soft Computing is the Human Mind.

30

SOFT COMPUTING

- Neuro-Computing (NC)

- Fuzzy Logic (GL)

- Genetic Computing (GC)

- Probabilistic Reasoning (PR)

- Chaotic Systems (CS), Belief Networks (BN),

Learning Theory (LT) - Related Technologies

- Statistics (Stat.)

- Artificial Intelligence (AI)

- Case-Based Reasoning (CBR)

- Rule-Based Expert Systems (RBR)

- Machine Learning (Induction Trees)

- Bayesian Belief Networks (BBN)

31

SOFT COMPUTING

- Neural Networks

- create complicated models without knowing their

structure - gradually adapt existing models using training

data - Fuzzy Logic

- Fuzzy Rules are easy and intuitively

understandable - Genetic Algorithms

- find parameters through evolution(usually when a

direct algorithm is unknown)

32

Neural Networks

- Ensemble of simple processing units

- Connection weights define functionality

- Derive weights from training data(usually

gradient descent based algorithms)

33

Fuzzy Logic

- Allow partial membership to sets

- Express knowledge through linguistic terms and

rules (Computing with Words) - Derive sets of Fuzzy Rules from data (usually

based on heuristics)

34

Evolutionary Algorithms

- Finding an optimal structure (parameters) for a

model is often complicated (due to large search

space, complex structure) - Find structure (parameters) through evolution

(generate population, evaluate, breed new pop.)

35

Why Fuzzy Logic?

- Uncertainty in the data and laws of nature

- Imprecision due to measurement human error

- Incomplete and sparse information

- Subjective and Linguistic rules

- So far as the laws of mathematics refer to

reality, they are not certain and so far as they

are certain, they dont refer to reality - Albert Einstein

36

Words are less precise than numbers!

- When information is too imprecise

- Close to reality

- Complex problem

- As complexity rises, precise statements lose

meaning, and meaningful statements lose

precision. - Lotfi A. Zadeh

37

Why Neural Network?

- Structure Free Nonlinear Mapping

- Multivariable Systems

- Trains Easily Based on Historical Data

- Parallel Processing Fault Tolerance

- Much Like Human Brain

38

Why Evolutionary Computing?

- For Multi-objectives and Multi-Criteria

Optimization Purposes - Resolving Conflict

- Capability to learn. Adapt, and to be self-aware

- Darwinian's law

39

Why Fuzzy Evolutionary Computing?

- To Extract Fuzzy Rules

- The Tune Fuzzy Membership

40

Neural Network Fuzzy Logic Models

- A Neural Network capturing presence of Fuzzy

Rules - Ideal for Knowledge Acquisition / Discovery

- Introduces fuzzy weights to NN internal

structure

41

SOFT COMPUTINGNeural Network

NNet

42

Biological Neuron

43

Biological Neuron

44

(No Transcript)

45

Biological vs. Artificial Neuron

46

Analogy between biological and artificial neural

networks

47

Artificial Neuron

48

Schematic Diagram for Single Neuron

b

1

w22

w1

yf(s)

s?xiwi

x1

y

wk

xk

y f b w1 x1 w2 x2 wk xk . w22

49

Activation Functions

f(s)

sgn(s)

1

s

-1

50

Multilayer perceptron with two hidden layers

i g n a l s

Input Signals

O u t p u t S

First

Second

Input

hidden

hidden

Output

layer

layer

layer

layer

Artificial Neural Network (Feedforward)

51

Multi Layer Perceptron ANN

52

Mapping Functions

- One hidden layer (two adaptive layers) networks

can approximate any functional continuous mapping

from one finite dimensional space to another - One-to-one mapping

- Many-to-one mapping

- Many-to-many mapping

53

Artificial Neural Network vs. Human Brain

- Largest neural computer

- 20,000 neurons

- Worms brain

- 1,000 neurons

- But the worms brain outperforms neural computers

- Its the connections, not the neurons!

- Human brain

- 100,000,000,000 neurons

- 200,000,000,000,000 connections

54

Brain vs. Computer Processing

Processing Speed Milliseconds VS

Nanoseconds. Processing Order Massively

parallel.VS serially. Abundance and

Complexity 1011 and 1014 of neurons operate in

parallel in the brain at any given moment,

each with between 103 and 104 abutting

connections per neuron. Knowledge Storage

Adaptable VS New information destroys old

information. Fault Tolerance Knowledge is

retained through the redundant, distributed

encoding information VS the corruption of a

conventional computer's memory is irretrievevable

and leads to failure as well.

Cesare Pianese

55

NEURAL NETWORK

PERCEPTRON

56

History of Neurocomputing

Cesare Pianese

57

- First Attempts

- Simple neurons which are binary devices with

fixed thresholds simple logic functions like

and, or McCulloch and Pitts (1943) - Promising Emerging Technology

- Perceptron three layers network which can learn

to connect or associate a given input to a random

output - Rosenblatt (1958) - ADALINE (ADAptive LInear Element) an analogue

electronic device which uses least-mean-squares

(LMS) learning rule Widrow Hoff (1960) - 1962 Rosenblatt proved the convergence of the

perceptron training rule. - Period of Frustration Disrepute

- Minsky Paperts book in 1969 in which they

generalised the limitations of single layer

Perceptrons to multilayered systems. - ...our intuitive judgment that the extension (to

multilayer systems) is sterile - Innovation

- Grossberg's (Steve Grossberg and Gail Carpenter

in 1988) ART (Adaptive Resonance Theory) networks

based on biologically plausible models. - Anderson and Kohonen developed associative

techniques - Klopf (A. Henry Klopf) in 1972, developed a basis

for learning in artificial neurons based on a

biological principle for neuronal learning called

heterostasis. - Werbos (Paul Werbos 1974) developed and used the

back-propagation learning method - 1970-1985 Very little research on Neural Nets

- Fukushimas (F. Kunihiko) cognitron (a step wise

trained multilayered neural network for

interpretation of handwritten characters). - Re-Emergence

- 1986 Invention of Backpropagation Rumelhart and

McClelland, but also Parker and earlier on

Werbos which can learn from nonlinearly-separable

data sets. - Since 1985 A lot of research in Neural Nets!

58

Hecht-Nielsens MLP/backprop

Theory of backpropagation neural network, IEE

Proc., Int. Conf. NNet, Washignton, DC, 1989

Robert Hecht-Nielsen 18-07-47, San Francisco HNC

Software/UCSD electrical engineering computer

science Neurocomputing Addison/Wesley, 1991

59

Different Non-LinearlySeparable Problems

Types of Decision Regions

Exclusive-OR Problem

Classes with Meshed regions

Most General Region Shapes

Structure

Single-Layer

Half Plane Bounded By Hyperplane

Two-Layer

Convex Open Or Closed Regions

Arbitrary (Complexity Limited by No. of Nodes)

Three-Layer

60

Architecture of Neural Networks

- Layers

- Input, hidden and output layers

- Number of units per layer

- Connections Flow of Information

- Feedforward

- Recurrent

- Fully connected

- Laterally connected

- Modular Networks

61

Topology

Layers

Connection Weights

Architecture

Modular

Feedforward

Hetero- associative

Recurrent

Autoassociative

Cesare Pianese

62

Learning Paradigms

- Supervised learning

- network trained by showing a set of input and

output patterns - Unsupervised learning

- network is shown only the input patterns

- Reinforcement learning

- Information on quality of response is available

63

Learning/Training

Supervised Learning Batch learning The

network parameters (weights and biases) are

adjusted once all the training examples have been

presented to the network. The parameters change

is based on the global error made during the

training examples (inputs) classification.

Adaptive learning The weights are modified

after each example has been presented to the

network. The network tries to satisfy all the

examples one at a time. Unsupervised

Learning The network has no feedback about the

output accuracy and learns through a

self-arrangement of its structure similar inputs

activate similar neurons while different inputs

activate other neurons.

64

Neural Network Classification

Cesare Pianese

65

Neural Network Models

- Neural network Models are Characterised by

- Type of Neurons(units, nodes, neurodes)

- Connectionist architecture

- Learning algorithm

- Recall algorithm

66

Multilayer Perceptron

Kohonen

Radial Basis Functions

Neural Network Models

Generalised Regression

Probabilistic

ART

Recurrent

Cesare Pianese

67

Neural Network Classification

Cesare Pianese

68

Unit delay operator

Recurrent network without hidden units

Recurrent network with hidden units

Artificial Neural Network (Feedforward)

69

Other Types of Neural Networks

SOM

RBFN

Committee Machines

ART1

70

Output layer

Hidden layer

Input layer

Artificial Neural Network (recurrent)

71

A Common Framework for Neural Networks and

Multivariate Statistical Method

Y (X1, X2, , Xp) ? ?k ?k (?k X1, X2, , Xp)

72

Input Transformation

Kernel-Based Method

Linear

Nonlinear

73

(No Transcript)

74

(No Transcript)

75

BACKPROBAGATION NEURAL NETWORK

BNNet

76

1974 Werbos Backprop

math economics (by lack of brain

theory) Optimization A Foundation for

Understanding Consciousness In Optimality in

Biological and Artificial Neural Networks,

Levine and Elsberry eds, Erlbaum 1997

Paul J. Werbos 04-09-47, Philadelphia NSF,

Arlington VA 1974 Ph.D. dissertation at Harvard

University

77

Typical Neural Network

- A neural network is a set of interconnected

neurons (simple processing units) - Each neuron receives signals from other neurons

and sends an output to other neurons - The signals are amplified by the strength of

the connection - The strength of the connection changes over time

according to a feedback mechanism - The net can be trained

78

Three-layer back-propagation neural network

Input signals

1

x

1

y

1

1

1

2

x

2

y

2

2

2

i

w

w

j

jk

ij

y

x

k

k

i

m

n

y

l

l

x

n

Hidden

Input

Output

layer

layer

layer

Error signals

79

Backpropagation - Gradient Descent

- Weight initialisation

- saturation of units

- random values

- Error surface characteristics

- local and global minima

- multi-dimensional plateaus

- narrow valleys or ravines

- Learning rate

- Momentum term

80

Fixed step size too small

Fixed step size too large

Steepest descent with line minimization

Fixed step size too large

81

DFP

start

BFGS

start

End

End

Broyden-Fletcher-Goldfarb-Shanno

(Unconstrained quasi-Newton

minimization)

Davidon-Fletcher-Powell

(Unconstrained quasi-Newton

minimization)

Steepest

Simplex

start

start

End

End

Nelder-Mead

(Unconstrained simplex

minimization)

Steepest Descent

(Unconstrained minimization)

L-M

G-N

start

start

End

End

Levenberg-Marquardt

(Least squares

minimization)

Gauss-Newton

(Least squares

minimization)

82

NEURAL NETWORK

NNet

83

NEURAL NETWORK ADAptive LInear Neuron ADAptive

LInear Element

ADALIN / MADLIN

84

1960 WIDROWS ADALINE

1960 ADALINE (ADAptive LInear Element) an

analogue electronic device which uses

least-mean-squares (LMS) learning rule Widrow

Hoff 1962 ADALINE (ADAptive LInear Neuron)

Generalization and information storage in

networks of ADALINE neurons

- Bernard Widrow and Ted Hoff introduced the

Least-Mean-Square algorithm (a.k.a. - delta-rule or Widrow-Hoff rule) and used it to

train the ADALINE (ADAptive Linear Neuron) - The ADALINE was similar to the perceptron, except

that it used a linear activation function instead

of the threshold - The LMS algorithm is still heavily used in

adaptive signal processing

Bernard Widrow 24-01-29, Norwich CT electrical

engineering, Stanford

85

Widrow and Hoff, 1960

Bernard Widrow and Ted Hoff introduced the

Least-Mean-Square algorithm (a.k.a. delta-rule or

Widrow-Hoff rule) and used it to train the

Adaline (ADAptive Linear Neuron) --The Adaline

was similar to the perceptron, except that it

used a linear activation function instead of the

threshold --The LMS algorithm is still heavily

used in adaptive signal processing

MADALINE Many ADALINEs Network of ADALINEs

86

Perceptron vs. ADALINE

Percptron LTU Emperical Hebbian

Assumption ADALINE LGU Gradient-Decent

f(s)

sgn(s)

1

linear(s)

s

LTU sign function /- (Positive/Negative) LGU

Continuous and Differentiable Activation function

including Linear function

-1

MADALINE Many ADALINEs Network of ADALINEs

87

Linearly non-Separable

Linearly Separable

88

NEURAL NETWORKSupport Vector Machine

SVM

89

From basic trigonometry, the distance between a

point x and a plane (w,b) is

Cherkassky and Mulier, 1998

Noticing that the optimal hyperplane has infinite

solutions by simply scaling weight vector and

bias, we choose the solution for which the

discriminant function becomes one for the

training examples closest to the boundary

This is known as the canonical hyperplane

Ricardo Gutierrez-Osuna

90

Therefore, the distance from the closest example

to the boundary is

And the margin becomes

Ricardo Gutierrez-Osuna

91

Cherkassky and Mulier, 1998 Haykin, 1999

Schölkopf, 2002 _at_ http//kernel-machines.org/

92

Burges, 1998 Kaykin, 1999

93

1982 Kohonens SOM

presents self-organizing maps using one- and

two-dimensional lattice structures. Kohonen SOMs

have received far more attention than van der

Malsburgs work and have become the benchmark for

innovations in self-organization

Unsupervised Hebbian learning Hebb -gt

support/strengthen activity learns only iff

input data redundant Find/recognize

patterns/structures

Teuvo Kohonen born 11-07-34 Finland TU Helsinki

SOFM self-organized feature mapping, or SOM

self-organized mapping

Self-organizing maps. Springer (1997)

94

(No Transcript)

95

(No Transcript)

96

NEURAL NETWORKLearning Vector Quantization

LVQ

97

Learning Vector Quantization (LVQ)

VQ can be considered a special case of SOFM. The

treatment will overlap at several points. Many

researchers have contributed to the area, notably

Kohonen and coworkers.

Although the SOM can be used for classification

as such, one has to remember that it does not

utilize class information at all, and thus its

results are inherently suboptimal. However, with

small modifications, the network can take the

class into account. The function SOM_SUPERVISED

does this. Learning vector quantization (LVQ) is

an algorithm that is very similar to the SOM in

many aspects. However, it is specifically

designed for classification.

98

Learning Vector Quantization (LVQ)

Single element from a competitive layer coupling

within layer, such that the best wins, or the

winner takes all. Achieved by positive feedback

to itself and inhibition of others. The winning

node have weight vector is called VQ vectors.

- often Euclidian distance, or some squared error

measure is used to find the minimum distance

Duifhuis

99

SOM, Unsupervised

Although the SOM can be used for classification

as such, one has to remember that it does not

utilize class information at all, and thus its

results are inherently suboptimal.

Learning vector quantization (LVQ) is an

algorithm that is very similar to the SOM in many

aspects. However, it is specifically designed for

classification.

LVQ, Supervised

100

NEURAL NETWORKAuto Resonance Theory

ART

101

1988 Grossberg's ART

- (Steve Grossberg and Gail Carpenter in 1988) ART

(Adaptive Resonance Theory) networks based on

biologically plausible models.

- Carpenter (1997) Distributed learning,

recognition, and prediction by ART and ARTMAP

neural networks, Neural Networks 10, 1473 -1494. - Grossberg (1995) The attentive brain, American

Scientist 88, 438 - 449

Gail Carpenter and Stephen Grossberg Boston

University Cognitive neural systems,

mathematical psychology

102

yj

output categories

-

-

-

-

lateral connections

F2 layer, N nodes template choosing

top-down wji bottom-up vij

xi

F1 layer, M nodes template matching

ii

binary inputs

- Single element from an ART1 network lateral

connections here limited to output layer, where

the best wins, or the winner takes all.

Examples of a set of bottom-up connections and a

set of top-down connections. - In some versions of ART1 also lateral

connections in F1

Duifhuis

103

The ART2 network architecture, dynamics

output

xi

Layer F2

j

?

yj

distributed AGCs

wji

vij

Top-down path

ri

?, vigilance

F1

Layer F1

-

pi

qi

ui

si

Bottom-up path

ti

xi

Expanded node I in layer F1

inputs

Duifhuis

104

(1994) CBD Signal Function

SIMULATIONS Average of 10 runs, 1 training

epoch Training points 1,000 (DIAG) or 10,000

(CIS), Testing points 10,000

Circle-in-Square (CIS)

DIAGONAL

91.70 correct 15.2 coding nodes

94.41 correct 40.7 coding nodes

105

NEW Graded Signal Function

DIAGONAL

Circle-in-Square (CIS)

95.26 correct (h1) 17.8 coding nodes

96.73 correct (h0.5) 46.4 coding nodes

- Smoother boundaries

- Improved correct but slightly more nodes

106

Point ARTMAP

98.72 correct 275 coding nodes

97.8 correct 59.2 coding nodes

107

NEURAL NETWORKRadial Basis Function

RBF

108

Radial Basis Function

A hidden layer of radial kernels The hidden layer

performs a non-linear transformation of input

space The resulting hidden space is typically of

higher dimensionality than the input space An

output layer of linear neurons The output layer

performs linear regression to predict the desired

targets Dimension of hidden layer is much larger

than that of input layer Covers theorem on the

separability of patterns A complex

pattern-classification problem cast in a

high-dimensional space non-linearly is more

likely to be linearly separable than in a

low-dimensional space Support Vector Machines.

RBFs are one of the kernel functions

most commonly used in SVMs!

109

Radial Basis Function

RBFs have their origins in techniques for

performing exact function interpolation Bishop,

1995

110

Ricardo Gutierrez-Osuna

111

RBFs Training Haykin, 1999

Unsupervised selection of RBF centers RBF centers

are selected so as to match the distribution of

training examples in the input feature

space Supervised computation of output

vectors Hidden-to-output weight vectors are

determined so as to minimize the sum squared

error between the RBF outputs and the desired

targets Since the outputs are linear, the optimal

weights can be computed using fast, linear matrix

inversion

Once the RBF centers have been selected,

hidden-to-output weights are computed so as to

minimize the MSE error at the output

112

RBFs Training Haykin, 1999

Once the RBF centers have been selected,

hidden-to-output weights are computed so as to

minimize the MSE error at the output

Now, since the hidden activation patterns are

fixed, the optimum weight vector W can be

obtained directly from the conventional

pseudo-inverse solution

113

Nikravesh and Nikravesh et al. (1994-2003) --

Functional NNet (1993-1994)-- Faster and more

Robust Training (1993-1994) -- Better

Uncertainty Analysis (1994-2000)-- As A Basis

for Computing with Words and Perceptions (CWP)

(2000-2003)

114

NEURAL NETWORK

PNN

115

Probabilistic Neural Networks

GRNN and PNN are more computationally intense

than RBF

Generalized regression (GRNN) and probabilistic

(PNN) networks are variants of the radial basis

function (RBF) network. Unlike the standard RBF,

the weights of theses networks can be calculated

analytically. In this case, the number of cluster

centers is by definition equal to the number of

exemplars, and they are all set to the same

variance. Use this type of RBF only when the

number of exemplars is so small (lt100) or so

dispersed that clustering is ill-defined.

116

RBF Networks

117

NEURAL NETWORKGeneralized Regression Neural

Network

GRNN

118

K-Nearest Neighbors

KNN

119

K-Nearest Neighbors

The K Nearest Neighbor Rule (k-NNR) is a very

intuitive method that classifies unlabeled

examples based on their similarity with examples

in the training set For a given unlabeled

example xu?.D, find the k closest labeled

examples in the training data set and assign xu

to the class that appears most frequently within

the k-subset The k-NNR only requires An integer

k A set of labeled examples (training data) A

metric to measure closeness

Example In the example below we have three

classesand the goal is to find a class label for

the unknown example xu In this case we use the

Euclidean distance and a value of k5

neighbors Of the 5 closest neighbors, 4 belong to

?1 and 1 belongs to ?3, so xu is assigned to ?1,

the predominant class

Ricardo Gutierrez-Osuna

120

K-Nearest Neighbors

Data a 2-dimensional 3- class problem, where the

class-conditional densities are multi-modal, and

non-linearly separable, as illustrated in the

figure Use k-NNR with k five Metric

Euclidean distance The resulting decision

boundaries and decision regions are shown below

Ricardo Gutierrez-Osuna

121

1-NNR versus k-NNR The use of large values of k

has two main advantages Yields smoother decision

regions Provides probabilistic information The

ratio of examples for each class gives

information about the ambiguity of the

decision However, too large values of k are

detrimental It destroys the locality of the

estimation since farther examples are taken into

account In addition, it increases the

computational burden

Ricardo Gutierrez-Osuna

122

NEURAL NETWORKBoltzmann Machine

BM

123

- The Boltzmann Machine is related to the Hopfield

network. It is an extension in that it can have

input units, hidden units and output units. - supervised learning in general networks

- stochastic network (Hinton, Sejnowski, ..)

- symmetric weight, like Hopfield, but with hidden

units - special training to avoid local minima, and reach

global minima simulated annealing. - different uses

- associative memory

- hetero associative mapping

- solve optimization problems

- Partial, or noisy input patterns as inputs gt

complete patterns as outputs. Similar to previous

figure (called heteroassociative). This is called

autoassociative. No separate in / out, only

visible units.

124

SOFT COMPUTINGFuzzy Logic

FL

125

VARIABLES AND LINGUISTIC VARIABLES

- one of the most basic concepts in science is that

of a variable - variable -numerical (X5 X(3, 2) )

- -linguistic (X is small (X, Y) is much

larger) - a linguistic variable is a variable whose values

are words or sentences in a natural or synthetic

language (Zadeh 1973) - the concept of a linguistic variable plays a

central role in fuzzy logic and underlies most of

its applications

126

Fuzzy Sets

Fuzzy Logic Element x belongs to set A with a

certain degree of membership ?(x)?0,1

Classical Logic Element x belongs to set A or it

does not ?(x)?0,1

?A(x)

?A(x)

Ayoung

Ayoung

1

1

0

0

x years

x years

127

Membership Functions

Predicate Old

Predicate Old

Crisp Set

Fuzzy Set

128

EXAMPLES OF F-GRANULATION (LINGUISTIC VARIABLES)

color red, blue, green, yellow, age young,

middle-aged, old, very old size small, big, very

big, distance near, far, very, not very far,

µ

young

old

middle-aged

1

very old

0

Age

129

LINGUISTIC VARIABLES AND F-GRANULATION (Zadeh,

1973)

example Age primary terms young, middle-aged,

old modifiers not, very, quite, rather,

linguistic values young, very young, not very

young and not very old,

µ

young

old

middle-aged

1

very old

0

Age

130

Fuzzy Logic

Linguistic Rule Knowledge Base

Defuzzifier Module

Fuzzy Inference Engine

Fuzzifier Module

Crisp Input

Crisp Output

Fuzzy Sets Fuzzy Numbers Fuzzification Fuzzy

Operators Fuzzy Rules Fuzzy Inference Defuzzificat

ion

131

Observation

132

A Discrete Fuzzy Set

Age Young0.25, Middle-Aged0.75

Membership of Young to the set Age is 0.25

Membership of Middle-Aged to the set Age is 0.75

133

Fuzzy Rule Base

If Age is old then Roya is 70

If Age is milddle-Aged then Roya is 45

If Age is Young then Roya is 20

134

Inferencing

Decision 200, 450.75, 700.25

?

Age

135

Defuzzification

Output (20?0 45?0.75 70?0.25) ? (0 0.75

0.25)

Output 51.2 ? Middle-Aged

136

WHAT IS FUZZY LOGIC (FL) ?

fuzzy logic (FL) has four principal facets

logical (narrow sense FL)

FL/L

F

F.G

FL/E

FL/S

set-theoretic

epistemic

G

FL/R

relational

F fuzziness/ fuzzification G granularity/

granulation F.G F and G

137

Schema of a Fuzzy Decision

Inference

Fuzzification

Defuzzification

rule-base

if temp is cold then valve is open

?cold

?warm

?hot

?open

?half

?close

?cold 0.7

0.7

0.7

if temp is warm then valve is half

0.2

0.2

?warm 0.2

t

v

measured temperature

if temp is hot then valve is close

crisp output for valve-setting

?hot 0.0

138

SOFT COMPUTINGFuzzy Logic

FL

139

Fuzzy Logic Genealogy

- Origins MVL for treatment of imprecision and

vagueness - 1930s Post, Kleene, and Lukasiewicz attempted to

represent undetermined, unknown, and other

possible intermediate truth-values. - 1937 Max Black suggested the use of a

consistency profile to represent vague

(ambiguous) concepts - 1965 Zadeh proposed a complete theory of fuzzy

sets (and its isomorphic fuzzy logic), to

represent and manipulate ill-defined concepts

140

1965 ZADEHS FUZZY LOGIC

- Stated informally, the essence of this

principle is that as the complexity of a

system increases, our ability to make precise and

yet significant statements about its behavior

diminishes until a threshold is reached beyond

which precision and significance (or

relevance) become almost mutually exclusive

characteristics. - "...in contrast to traditional hard computing,

soft computing exploits the tolerance for

imprecision, uncertainty, and partial truth to

achieve tractability, robustness, low

solution-cost, and better rapport with reality - 1949 The concept of a time-varying transfer

function- - 1950 with J. R. Ragazzini generalization of

Wiener's theory of prediction, generalization of

Wiener's theory of prediction - 1952 with J. R. Ragazzini pioneered in the

development of the z-transform - 1953 design of nonlinear filters and constructed

a hierarchy of nonlinear systems based on the

Volterra-Wiener representation - 1963 with Charles Desoer Classic text on the

state-space theory of linear systems

Lotfi A. Zadeh Electrical Engineering, Columbia

University An alumnus of the University of

Teheran, MIT, and Columbia University.

Fuzzy Set 1965 Fuzzy Logic 1973 Soft

Decision 1981 BISC 1990 Human-Machine

Perception 2000 -

141

WHAT IS FUZZY LOGIC?

- fuzzy logic has been and still is, though to a

lesser degree, an object of controversy - for the most part, the controversies are rooted

in misperceptions, especially a misperception of

the relation between fuzzy logic and probability

theory - a source of confusion is that the label fuzzy

logic is used in two different senses - (a) narrow sense fuzzy logic is a logical system

- (b) wide sense fuzzy logic is coextensive with

fuzzy set theory - today, the label fuzzy logic (FL) is used for

the most part in its wide sense

142

EVOLUTION OF LOGIC

generality

two-valued

multi-valued

fuzzy

LAZ 10-26-00

143

EVOLUTION OF LOGIC

- two-valued (Aristotelian) nothing is a matter of

degree - multi-valued truth is a matter of degree

- fuzzy everything is a matter of degree

- principle of the excluded middle every

proposition is either true or false

144

STATISTICS

Count of papers containing the word fuzzy in

the title, as cited in INSPEC and MATH.SCI.NET

databases. (data for 2001 are not

complete) Compiled by Camille Wanat, Head,

Engineering Library, UC Berkeley, June 21, 2002

INSPEC/fuzzy

Math.Sci.Net/fuzzy

1970-1979 570 1980-1989 2,383 1990-1999 23,121

2000-present 5,940 1970-present 32,014

441 2,463 5,459 1,670 10,033

145

(No Transcript)

146

PRINCIPAL APPLICATIONS OF FUZZY LOGIC

FL

- control

- consumer products

- industrial systems

- automotive

- decision analysis

- medicine

- geology

- pattern recognition

- robotics

CFR

CFR calculus of fuzzy rules

147

EMERGING APPLICATIONS OF FUZZY LOGIC

- computational theory of perceptions

- natural language processing

- financial engineering

- biomedicine

- legal reasoning

- forecasting

148

NEW Tools

149

Dividing the Input Space

Grid based

Individual membership functions

150

Mamdani Inference System

Output Z

Input MF

A1

B1

C1

X

Y

Z1

A2

B2

C2

Z (centroid of area)

X

Y

Z2

x

y

Output MF

Input (x,y)

151

First-Order Takagi Sugeno FIS

- Fuzzy Rule base

- If X is A1 and Y is B1 then Z p1x q1y r1

- If X is A2 and Y is B2 then Z p2x q2y r2

- Fuzzy reasoning

152

SOFT COMPUTINGNeuro-Fuzzy Computing

NFC

153

Neuro-Fuzzy Modeling

Hybrid Model

154

Adaptive Neuro-Fuzzy Inference System (ANFIS )

- Takagi Sugeno FIS

- Input partitioning

- LSE gradient descent training

nonlinear parameters

linear parameters

w1

A1

P

w1z1

x

A2

P

S

Swizi

B1

P

/

z

y

B2

P

w4z4

Swi

w4

S

Forward pass

Backward pass

fixed

steepest descent

MF parameter (nonlinear)

least-squares

fixed

Coefficient parameter (linear)

155

NEFCON (NEuro Fuzzy CONtroller)

- Mamdani FIS

- Weights represent fuzzy

- sets.

- Intelligent neurons

- Shared weights to preserve

- semantical characteristics.

- Nodes R1, R2 represent

- the rules.

Output node

- Learning two stages

- Learning fuzzy rules

- Learning fuzzy sets

Input Nodes

156

NEFCON Learning

- Learning fuzzy sets

- Fuzzy error backpropagation (reinforcement ) -

FEBP - 1. Fuzzy goodness measure

- 2. Extended rule based fuzzy error

- 3. Determine the contribution of each

rule - 4. Modify membership functions

- Learning fuzzy Rules

- Incremental learning

- Decremental learning

NEFPROX ( Function approximation supervised

learning) NEFCLASS ( Classification problems

winner takes all interpretation)

157

Fuzzy Adaptive learning Control Network (FALCON)

- Linguistic nodes for each

- output variable.

- One is for training data

- (desired output) and the

- other is for the actual output

- Hybrid-learning algorithm

- comprising of unsupervised

- learning to locate initial

- membership functions/ rule

- base and a gradient descent

- learning to optimally adjust the

- parameters of the MF to

- produce the desired outputs

158

Generalized Approximate Reasoning based

Intelligent Control (GARIC)

- Implements a neuro-

- fuzzy controller by using

- two neural network

- modules, the ASN (Action

- Selection Network) and the

- AEN (Action State

- Evaluation Network).

- The AEN is an adaptive critic that evaluates the

- actions of the ASN.

- GARIC uses a mixture of gradient descent and

- reinforcement learning to fine-tune the node

- parameters.

- Not easily interpretable

159

Self Constructing Neural Fuzzy Inference Network

(SONFIN)

- Takagi Sugeno inference system

- Input space partitioned by

- clustering algorithm related to

- required accuracy

- Projection based correlation

- measure using Gram-Schmidt

- Orthogonalization algorithm for

- rule consequent parts

- Learning

- Consequent parameters

- Least mean squares algorithm

- Pre-condition parameters

- Backpropagation

160

Evolving Fuzzy Neural Network- EFuNN

- Five layered Architecture

- Mamdani type FIS

- Nodes are created during

- learning.New neurons are added

- if MF of input variable lt

- threshold

- Rule base layer learning of

- temporal relationships

- (supervised / unsupervised -

- hybrid learning)

- Performance depends on the

- selection of network parameters

- (error threshold, sensitivity

- threshold etc)

161

Fuzzy Inference Environment Software with Tuning

- FINEST

- Parameterization of the inference procedure

- Tuning of fuzzy predicates, combination

implication - functions

- Using backpropagation algorithm

162

Fuzzy Net - FUN

- Network initialized with a

- rule base and MFs

- Each layer has different

- activation functions

- Triangular MFs

- Learning

- Rules pure stochastic

- search

- MF Gradient descent or

- reinforcement stochastic

- search

- Neuro-Fuzzy system ?

163

Evolutionary Design of Neuro-Fuzzy Systems

164

Performance of Some Neuro-Fuzzy systems

165

(No Transcript)

166

(No Transcript)

167

(No Transcript)

168

Compactification Algorithm InterpretationA

Simple Algorithm for Qualitative AnalysisRule

Extraction and Building Decision TreeDr.

Nikravesh and Prof. Zadeh

169

Compactification Algorithm Interpretation

A1 A2 o An F1

a11 a21 o am1 a12 a22 o am2 O O O a1n a2n o amn a1 a2 o am

Test Attribute Set

a1 a2 o an ?b

170

Table 1 (intermediate results)

A1 A2 A3 F1

a11 a11 a21 a31 a31 a11 a21 a31 a12 a22 a22 a22 a12 a22 a22 a22 a13 a13 a13 a13 a23 a23 a23 a23 a1 a1 a1 a1 a1 a1 a1 a1

a22 a22 a13 a23 a1 a1

a31 a23 a1

a11 a21 a31 a22 a22 a22 a22 a1 a1 a1 a1

Group 1(initial)

Pass (1)

Pass (2)

Pass (3)

171

MAXIMALLY COMPACT REPRESENTATION

172

THE CONCEPT OF PSEUDO-X

n

Pseudo-Number

(non precisiable granule)

n

f

Pseudo-Function

Y

(non-precisiable)

X

- if f is a function for reals to reals, f is a

function from reals to pseudo-numbers

173

PERCEPTION-BASED VS. MEASUREMENT-BASED

INFORMATION

Y

Y

f

f

Y2 X X2

large

0

0

medium

X

X

f if X is small then Y is small if X is

medium then Y is large if X is large then Y

is small

(X,Y) is (small x small medium x large large

x small)

LAZ 11-6-00

174

PERCEPTION OF A FUNCTION

Y

f

0

Y

medium x large

f (fuzzy graph)

perception

f f

if X is small then Y is small if X is

medium then Y is large if X is large then Y

is small

0

X

LAZ 7-22-02

175

INTERPOLATION

Y is B1 if X is A1 Y is B2 if X is A2 .. Y is

Bn if X is An Y is ?B if X is A

A?A1, , An

Conjuctive approach (Zadeh 1973) Disjunctive

approach (Zadeh 1971, Zadeh 1973,

Mamdani 1974)

176

DEFINITION OF p ABOUT 20-25

?

1

c-definition

v

0

20

25

?

1

f-de