5.1%20Length%20and%20Dot%20Product%20in%20Rn - PowerPoint PPT Presentation

Title:

5.1%20Length%20and%20Dot%20Product%20in%20Rn

Description:

Chapter 5 Inner Product Spaces 5.1 Length and Dot Product in Rn Notes: The length of a vector is also called its norm. Notes: is called a unit vector. – PowerPoint PPT presentation

Number of Views:75

Avg rating:3.0/5.0

Title: 5.1%20Length%20and%20Dot%20Product%20in%20Rn

1

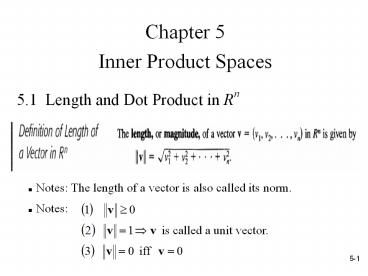

5.1 Length and Dot Product in Rn

Chapter 5 Inner Product Spaces

- Notes The length of a vector is also called its

norm.

- Notes

is called a unit vector.

2

(No Transcript)

3

(No Transcript)

4

- Notes

- The process of finding the unit vector in

the direction of v is called normalizing the

vector v.

- A standard unit vector in Rn

- Ex

- the standard unit vector in R2

- the standard unit vector in R3

5

(No Transcript)

6

(No Transcript)

7

- Euclidean n-space

- Rn was defined to be the set of all order

n-tuples of real numbers. When Rn is combined

with the standard operations of vector addition,

scalar multiplication, vector length, and the dot

product, the resulting vector space is called

Euclidean n-space.

8

- Dot product and matrix multiplication

(A vector

in Rn is represented as an n1 column matrix)

9

- Note The angle between the zero vector and

another vector - is not defined.

10

- Note The vector 0 is said to be orthogonal to

every vector.

11

- Note

- Equality occurs in the triangle inequality if and

only if - the vectors u and v have the same direction.

12

5.2 Inner Product Spaces

- Note

13

(No Transcript)

14

(No Transcript)

15

- Note

16

- Properties of norm

- (1)

- (2) if and only if

- (3)

17

- Properties of distance

- (1)

- (2) if and only if

- (3)

18

- Note

- If v is a init vector, then

. - The formula for the orthogonal projection of u

onto v takes the following simpler form.

19

(No Transcript)

20

5.3 Orthonormal Bases Gram-Schmidt Process

- Note

- If S is a basis, then it is called an orthogonal

basis or an orthonormal basis.

21

(No Transcript)

22

(No Transcript)

23

(No Transcript)

24

(No Transcript)

25

(No Transcript)

26

5.4 Mathematical Models and Least Squares

Analysis

27

- Orthogonal complement of W

Let W be a subspace of an inner product space

V. (a) A vector u in V is said to orthogonal to

W, if u is orthogonal to every vector in

W. (b) The set of all vectors in V that are

orthogonal to W is called the orthogonal

complement of W.

- Notes

28

- Notes

- Ex

29

(No Transcript)

30

(No Transcript)

31

(No Transcript)

32

- Notes

- (1) Among all the scalar multiples of a vector u,

the - orthogonal projection of v onto u is the

one that is - closest to v.

- (2) Among all the vectors in the subspace W, the

vector - is the closest vector to v.

33

- The four fundamental subspaces of the matrix A

- N(A) nullspace of A N(AT)

nullspace of AT - R(A) column space of A R(AT) column

space of AT

34

(No Transcript)

35

- Least squares problem

- (A system of

linear equations) - (1) When the system is consistent, we can use the

Gaussian elimination with back-substitution to

solve for x

(2) When the system is inconsistent, how to

find the best possible solution of the system.

That is, the value of x for which the difference

between Ax and b is small.

- Least squares solution

- Given a system Ax b of m linear equations in n

unknowns, the least squares problem is to find a

vector x in Rn that minimizes

with respect to the Euclidean inner product on

Rn. Such a vector is called a least squares

solution of Ax b.

36

(No Transcript)

37

- Note

- The problem of finding the least squares solution

of - is equal to he problem of finding an exact

solution of the - associated normal system .

- Thm

- For any linear system , the

associated normal system - is consistent, and all solutions of the normal

system are least squares solution of Ax b.

Moreover, if W is the column space of A, and x is

any least squares solution of Ax b, then the

orthogonal projection of b on W is

38

- Thm

- If A is an mn matrix with linearly independent

column vectors, then for every m1 matrix b, the

linear system Ax b has a unique least squares

solution. This solution is given by - Moreover, if W is the column space of A, then the

orthogonal projection of b on W is

39

5.5 Applications of Inner Product Spaces

40

(No Transcript)

41

- Note Ca, b is the inner product space of all

continuous - functions on a, b.

42

(No Transcript)