Partitioning Algorithms: Basic Concepts - PowerPoint PPT Presentation

Title:

Partitioning Algorithms: Basic Concepts

Description:

Partitioning Algorithms: Basic Concepts Partition n objects into k clusters Optimize the chosen partitioning criterion Example: minimize the Squared Error – PowerPoint PPT presentation

Number of Views:1631

Avg rating:3.0/5.0

Title: Partitioning Algorithms: Basic Concepts

1

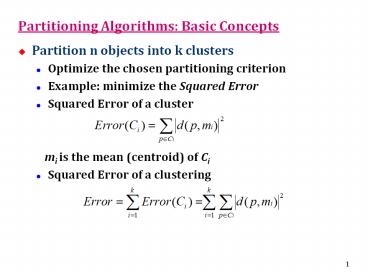

Partitioning Algorithms Basic Concepts

- Partition n objects into k clusters

- Optimize the chosen partitioning criterion

- Example minimize the Squared Error

- Squared Error of a cluster

- mi is the mean (centroid) of Ci

- Squared Error of a clustering

2

Example of Square Error of Cluster

CiP1, P2, P3 P1 (3, 7) P2 (2, 3) P3 (7,

5) mi (4, 5) d(P1, mi)2 (3-4)2(7-5)25 d(P

2, mi)28 d(P3, mi)29 Error (Ci)58922

10 9 8 7 6 5 4 3 2 1

P1

P3

P2

mi

0 1 2 3 4 5 6 7 8 9 10

3

Example of Square Error of Cluster

CjP4, P5, P6 P4 (4, 6) P5 (5, 5) P6 (3,

4) mj (4, 5) d(P4, mj)2 (4-4)2(6-5)21 d(P

5, mj)21 d(P6, mj)21 Error (Cj)1113

10 9 8 7 6 5 4 3 2 1

P4

P5

mj

P6

0 1 2 3 4 5 6 7 8 9 10

4

Partitioning Algorithms Basic Concepts

- Global optimal examine all possible partitions

- kn possible partitions, too expensive!

- Heuristic methods k-means and k-medoids

- k-means (MacQueen67) Each cluster is

represented by center of cluster - k-medoids (Kaufman Rousseeuw87) Each cluster

is represented by one of the objects (medoid) in

cluster

5

K-means

- Initialization

- Arbitrarily choose k objects as the initial

cluster centers (centroids) - Iteration until no change

- For each object Oi

- Calculate the distances between Oi and the k

centroids - (Re)assign Oi to the cluster whose centroid is

the closest to Oi - Update the cluster centroids based on current

assignment

6

k-Means Clustering Method

cluster mean

current clusters

objects relocated

new clusters

7

Example

- For simplicity, 1 dimensional objects and k2.

- Objects 1, 2, 5, 6,7

- K-means

- Randomly select 5 and 6 as initial centroids

- gt Two clusters 1,2,5 and 6,7 meanC18/3,

meanC26.5 - gt 1,2, 5,6,7 meanC11.5, meanC26

- gt no change.

- Aggregate dissimilarity 0.52 0.52 12

12 2.5

8

Variations of k-Means Method

- Aspects of variants of k-means

- Selection of initial k centroids

- E.g., choose k farthest points

- Dissimilarity calculations

- E.g., use Manhattan distance

- Strategies to calculate cluster means

- E.g., update the means incrementally

9

Strengths of k-Means Method

- Strength

- Relatively efficient for large datasets

- O(tkn) where n is objects, k is clusters,

and t is iterations normally, k, t ltltn - Often terminates at a local optimum

- global optimum may be found using techniques

such as deterministic annealing and genetic

algorithms

10

Weakness of k-Means Method

- Weakness

- Applicable only when mean is defined, then what

about categorical data? - k-modes algorithm

- Unable to handle noisy data and outliers

- k-medoids algorithm

- Need to specify k, number of clusters, in advance

- Hierarchical algorithms

- Density-based algorithms

11

k-modes Algorithm

- Handling categorical data k-modes (Huang98)

- Replacing means of clusters with modes

- Given n records in cluster, mode is record made

up of most frequent attribute values

- In the example cluster, mode (lt30, medium,

yes, fair) - Using new dissimilarity measures to deal with

categorical objects

12

A Problem of K-means

- Sensitive to outliers

- Outlier objects with extremely large (or small)

values - May substantially distort the distribution of the

data

Outlier

13

k-Medoids Clustering Method

- k-medoids Find k representative objects, called

medoids - PAM (Partitioning Around Medoids, 1987)

- CLARA (Kaufmann Rousseeuw, 1990)

- CLARANS (Ng Han, 1994) Randomized sampling

k-means

k-medoids

14

PAM (Partitioning Around Medoids) (1987)

- PAM (Kaufman and Rousseeuw, 1987)

- Arbitrarily choose k objects as the initial

medoids - Until no change, do

- (Re)assign each object to the cluster with the

nearest medoid - Improve the quality of the k-medoids

- (Randomly select a nonmedoid object, Orandom,

- compute the total cost of swapping a medoid

with - Orandom)

- Work for small data sets (100 objects in 5

clusters) - Not efficient for medium and large data sets

15

Swapping Cost

- For each pair of a medoid m and a non-medoid

object h, measure whether h is better than m as a

medoid - Use the squared-error criterion

- Compute Eh-Em

- Negative swapping brings benefit

- Choose the minimum swapping cost

16

Four Swapping Cases

- When a medoid m is to be swapped with a

non-medoid object h, check each of other

non-medoid objects j - j is in cluster of m? reassign j

- Case 1 j is closer to some k than to h after

swapping m and h, j relocates to cluster

represented by k - Case 2 j is closer to h than to k after

swapping m and h, j is in cluster represented by

h - j is in cluster of some k, not m ? compare k with

h - Case 3 j is closer to some k than to h after

swapping m and h, j remains in cluster

represented by k - Case 4 j is closer to h than to k after

swapping m and h, j is in cluster represented by

h

17

PAM Clustering Total swapping cost TCmh?jCjmh

Case 1

Case 3

j

k

h

j

h

m

k

m

Case 2

Case 4

k

h

j

m

m

h

j

k

18

Complexity of PAM

- Arbitrarily choose k objects as the initial

medoids - Until no change, do

- (Re)assign each object to the cluster with the

nearest medoid - Improve the quality of the k-medoids

- For each pair of medoid m and non-medoid object

h - Calculate the swapping cost TCmh ?jCjmh

-

O(1)

O((n-k)2k)

O((n-k)k)

O((n-k)2k)

(n-k)k times

O(n-k)

19

Strength and Weakness of PAM

- PAM is more robust than k-means in the presence

of outliers because a medoid is less influenced

by outliers or other extreme values than a mean - PAM works efficiently for small data sets but

does not scale well for large data sets - O(k(n-k)2 ) for each iteration

- where n is of data objects, k is of

clusters - Can we find the medoids faster?

20

CLARA (Clustering Large Applications) (1990)

- CLARA (Kaufmann and Rousseeuw in 1990)

- Built in statistical analysis packages, such as

S - It draws multiple samples of data set, applies

PAM on each sample, gives best clustering as

output - Handle larger data sets than PAM (1,000 objects

in 10 clusters) - Efficiency and effectiveness depends on the

sampling

21

CLARA - Algorithm

- Set mincost to MAXIMUM

- Repeat q times // draws q samples

- Create S by drawing s objects randomly from D

- Generate the set of medoids M from S by applying

the PAM algorithm - Compute cost(M,D)

- If cost(M, D)ltmincost

- Mincost cost(M, D)

- Bestset M

- Endif

- Endrepeat

- Return Bestset

22

Complexity of CLARA

O(1)

- Set mincost to MAXIMUM

- Repeat q times

- Create S by drawing s objects randomly from D

- Generate the set of medoids M from S by applying

the PAM algorithm - Compute cost(M,D)

- If cost(M, D)ltmincost

- Mincost cost(M, D)

- Bestset M

- Endif

- Endrepeat

- Return Bestset

O((s-k)2k(n-k)k)

O(1)

O((s-k)2k)

O((n-k)k)

O(1)

23

Strengths and Weaknesses of CLARA

- Strength

- Handle larger data sets than PAM (1,000 objects

in 10 clusters) - Weakness

- Efficiency depends on sample size

- A good clustering based on samples will not

necessarily represent a good clustering of whole

data set if sample is biased

24

CLARANS (Randomized CLARA) (1994)

- CLARANS (A Clustering Algorithm based on

Randomized Search) (Ng and Han94) - CLARANS draws sample in solution space

dynamically - A solution is a set of k medoids

- The solutions space contains solutions

in total - The solution space can be represented by a graph

where every node is a potential solution, i.e., a

set of k medoids

25

Graph Abstraction

- Every node is a potential solution (k-medoid)

- Every node is associated with a squared error

- Two nodes are adjacent if they differ by one

medoid - Every node has k(n?k) adjacent nodes

O1,O2,,Ok

k(n? k) neighbors for one node

Ok1,O2,,Ok

Okn,O2,,Ok

n-k neighbors for one medoid

26

Graph Abstraction CLARANS

- Start with a randomly selected node, check at

most m neighbors randomly - If a better adjacent node is found, moves to node

and continue otherwise, current node is local

optimum re-starts with another randomly selected

node to search for another local optimum - When h local optimum have been found, returns

best result as overall result

27

CLARANS

Compare no more than maxneighbor times

lt

Best Node

28

CLARANS - Algorithm

- Set mincost to MAXIMUM

- For i1 to h do // find h local optimum

- Randomly select a node as the current node C in

the graph - J 1 // counter of neighbors

- Repeat

- Randomly select a neighbor N of C

- If Cost(N,D)ltCost(C,D)

- Assign N as the current node C

- J 1

- Else J

- Endif

- Until J gt m

- Update mincost with Cost(C,D) if applicableEnd

for - End For

- Return bestnode

29

Graph Abstraction (k-means, k-modes, k-medoids)

- Each vertex is a set of k-representative objects

(means, modes, medoids) - Each iteration produces a new set of

k-representative objects with lower overall

dissimilarity - Iterations correspond to a hill descent process

in a landscape (graph) of vertices

30

Comparison with PAM

- Search for minimum in graph (landscape)

- At each step, all adjacent vertices are examined

the one with deepest descent is chosen as next

k-medoids - Search continues until minimum is reached

- For large n and k values (n1,000, k10),

examining all k(n?k) adjacent vertices is time

consuming inefficient for large data sets - CLARANS vs PAM

- For large and medium data sets, it is obvious

that CLARANS is much more efficient than PAM - For small data sets, CLARANS outperforms PAM

significantly

31

(No Transcript)

32

Comparision with CLARA

- CLARANS vs CLARA

- CLARANS is always able to find clusterings of

better quality than those found by CLARA CLARANS

may use much more time than CLARA - When the time used is the same, CLARANS is still

better than CLARA

33

(No Transcript)

34

Hierarchies of Co-expressed Genes and Coherent

Patterns

The interpretation of co-expressed genes and

coherent patterns mainly depends on the domain

knowledge

35

A Subtle Situation

- To split or not to split? Its a question.

group A2