Course Outline - PowerPoint PPT Presentation

Title:

Course Outline

Description:

Title: The IC Wall Collaboration between Computer science + Physics Last modified by: bal Document presentation format: Custom Other titles: Times New Roman Arial ... – PowerPoint PPT presentation

Number of Views:40

Avg rating:3.0/5.0

Title: Course Outline

1

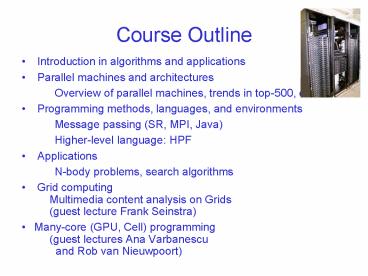

Course Outline

- Introduction in algorithms and applications

- Parallel machines and architectures

- Overview of parallel machines, trends in

top-500, clusters - Programming methods, languages, and environments

- Message passing (SR, MPI, Java)

- Higher-level language HPF

- Applications

- N-body problems, search algorithms

- Grid computing Multimedia content analysis

on Grids (guest lecture Frank Seinstra) - Many-core (GPU, Cell) programming (guest

lectures Ana Varbanescu and Rob van

Nieuwpoort)

2

Parallel Machines

- Parallel Computing Techniques and Applications

UsingNetworked Workstations and Parallel

Computers (2/e) - Section 1.3 (part of) 1.4

- Barry Wilkinson and Michael Allen

- Pearson, 2005

3

Overview

- Processor organizations

- Types of parallel machines

- Processor arrays

- Shared-memory multiprocessors

- Distributed-memory multicomputers

- Cluster computers

- Blue Gene

4

Processor Organization

- Network topology is a graph

- A node is a processor

- An edge is a communication path

- Evaluation criteria

- Diameter (maximum distance)

- Bisection width (minimum number of edges that

should be removed to splitthe graph into 2

-almost- equal halves) - Number of edges per node

5

Key issues in network design

- Bandwidth

- Number of bits transferred per second

- Latency

- Network latency time to make a message transfer

through the network - Communication latency total time to send the

message, including software overhead and

interface delays - Message latency (startup time) time to send a

zero-length message - Diameter influences latency

- Bisection width influences bisection bandwidth

(collective bandwidth over the removed edges)

6

Mesh

- q-dimensional lattice

- q2 -gt 2-D grid

- Number of nodes k² (k SQRT(p))

- Diameter 2(k - 1)

- Bisection width k

- Edges per node 4

7

Binary Tree

- Number of nodes 2k 1 (k 2LOG(P))

- Diameter 2 (k -1)

- Bisection width 1

- Edges per node 3

8

Hypertree

- Tree with multiple roots, gives better bisection

width - 4-ary tree

- Number of nodes 2k ( 2 k1 - 1)

- Diameter 2k

- Bisection width 2 k1

- Edges per node 6

9

Engineering solution fat tree

- Tree with more bandwidth at links near the root

- CM-5

- CM-5

10

Hypercube

- k-dimensional cube, each node has binary value,

nodes that differ in 1 bit are connected - Number of nodes 2k

- Diameter k

- Bisection width 2k-1

- Edges per node k

11

Hypercube

- Label nodes with binary value, connect nodes that

differ in 1 coordinate - Number of nodes 2k

- Diameter k

- Bisection width 2k-1

- Edges per node k

12

Comparison

Mesh Tree Hypercube

Diameter o

Bisection width o -

edges 4 3 unlimited

13

Types of parallel machines

- Processor arrays

- Shared-memory multiprocessors

- Distributed-memory multicomputers

14

Processor Arrays

- Instructions operate on scalars or vectors

- Processor array front-end synchronized

processing elements

15

Processor Arrays

- Front-end

- Sequential machine that executes program

- Vector operations are broadcast to PEs

- Processing element

- Performs operation on its part of the vector

- Communicates with other PEs through a network

16

Examples of Processor Arrays

- CM-200, Maspar MP-1, MP-2, ICL DAP (1970s)

- Earth Simulator (Japan, 2002, former 1 of

top-500) - Ideas are now applied in GPUs and CPU-extensions

like MMX

17

Shared-Memory Multiprocessors

- Bus easily gets saturated gt add caches to CPUs

- Central problem cache coherency

- Snooping cache monitor bus, invalidate copy on

write - Write-through or copy-back

- Bus-based multiprocessors do not scale

18

Other Multiprocessor Designs (1/2)

- Switch-based multiprocessors (e.g., crossbar)

- Expensive (requires many very fast components)

19

Other Multiprocessor Designs (2/2)

- Non-Uniform Memory Access (NUMA) multiprocessors

- Memory is distributed

- Some memory is faster toaccess than other memory

- Example

- Teras at Sara, Dutch NationalSupercomputer

(1024-node SGI) - Ideas now applied in multi-cores

20

Distributed-Memory Multicomputers

- Each processor only has a local memory

- Processors communicate by sending messages over a

network - Routing of messages

- Packet-switched message routing split message

into packets, buffered at intermediate nodes - Store-and-forward

- Circuit-switched message routing establish path

between source and destination

21

Packet-switched Message Routing

- Messages are forwarded one node at a time

- Forwarding is done in software

- Every processor on path from source to

destination is involved - Latency linear to distance x message length

- Old examples Parsytec GCel (T800 transputers),

Intel Ipsc

22

Circuit-switched Message Routing

- Each node has a routing module

- Circuit set up between source and destination

- Latency linear to distance message length

- Example Intel iPSC/2

23

Modern routing techniques

- Circuit switching needs to reserve all links in

the path (cf. old telephone system) - Packet switching high latency, buffering space

(cf. postal mail) - Cut-through routing packet switching, but

immediately forward (without buffering) packets

if outgoing link is available - Wormhole routing transmit head (few bits) of

message, rest follows like a worm

24

Performance

Packet switching

Network latency

Wormhole routingCircuit switching

Distance (number of hops)

25

Distributed Shared Memory

- Shared memory is easier to program, but doesnt

scale - Distributed memory is hard to program, but does

scale - Distributed Shared Memory (DSM) provide

shared-memory programming model on top of

distributed memory hardware - Shared Virtual Memory (SVM) use memory

management hardware (paging), copy pages over the

network - Object-based provide replicated shared objects

(Orca language) - Was hot research topic in 1990s, but performance

remained the bottleneck

26

Flynn's Taxonomy

Single Data Multiple Data

Single Instruction SISD SIMD

Multiple Instruction MISD MIMD

- Instruction stream sequence of instructions

- Data stream sequence of data manipulated by

instructions

27

Flynn's Taxonomy

Single Data Multiple Data

Single Instruction SISD SIMD

Multiple Instruction MISD MIMD

SISD Single Instruction Single

Data Traditional uniprocessors SIMD Single

Instruction Multiple Data Processor arrays MISD

Multiple Instruction Single Data Nonexistent? MIM

D Multiple Instruction Multiple Data

Multiprocessors and multicomputers