Question Answering - PowerPoint PPT Presentation

Title:

Question Answering

Description:

Question Answering Lecture 1 (two weeks ago): Introduction; History of QA; Architecture of a QA system; Evaluation. Lecture 2 (last week): Question Classification ... – PowerPoint PPT presentation

Number of Views:297

Avg rating:3.0/5.0

Title: Question Answering

1

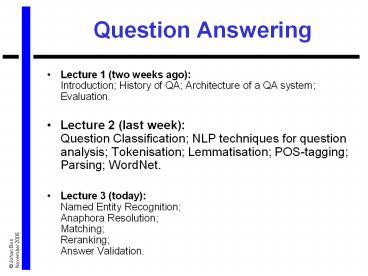

Question Answering

- Lecture 1 (two weeks ago)Introduction History

of QA Architecture of a QA system Evaluation. - Lecture 2 (last week)Question Classification

NLP techniques for question analysis

Tokenisation Lemmatisation POS-tagging

Parsing WordNet. - Lecture 3 (today)Named Entity Recognition

Anaphora Resolution Matching Reranking

Answer Validation.

2

The Panda

3

A panda

- A panda walks into a cafe.

- He orders a sandwich, eats it, then draws a gun

and fires two shots in the air.

4

A panda

- Why? asks the confused waiter, as the panda

makes towards the exit. - The panda produces a dictionary and tosses it

over his shoulder. - I am a panda, he says. Look it up.

5

The pandas dictionary

- Panda. Large black-and-white bear-like mammal,

native to China. Eats, shoots and leaves.

6

Ambiguities

- Eats, shoots and leaves. VBZ VBZ

CC VBZ

7

Ambiguities

- Eats shoots and leaves. VBZ NNS

CC NNS

8

Question Answering

- Lecture 1 (two weeks ago)Introduction History

of QA Architecture of a QA system Evaluation. - Lecture 2 (last week)Question Classification

NLP techniques for question analysis

Tokenisation Lemmatisation POS-tagging

Parsing WordNet. - Lecture 3 (today)Named Entity Recognition

Anaphora Resolution Matching

RerankingAnswer Validation.

9

Architecture of a QA system

corpus

IR

query

Question Analysis

question

documents/passages

Document Analysis

answer-type

question representation

passage representation

Answer Extraction

answers

10

Architecture of a QA system

corpus

IR

query

Question Analysis

question

documents/passages

Document Analysis

answer-type

question representation

passage representation

Answer Extraction

answers

11

Recall the Answer-Type Taxonomy

- We divided questions according to their expected

answer type - Simple Answer-Type Typology

PERSON NUMERAL DATE MEASURE LOCATION ORGANISATION

ENTITY

12

Named Entity Recognition

- In order to make use of the answer types, we need

to be able to recognise named entities of the

same types in the corpus

PERSON NUMERAL DATE MEASURE LOCATION ORGANISATION

ENTITY

13

Example Text

- Italys business world was rocked by the

announcement last Thursday that Mr. Verdi would

leave his job as vice-president of Music Masters

of Milan, Inc to become operations director of

Arthur Andersen.

14

Named Entity Recognition

- ltENAMEX TYPELOCATIONgtItalylt/ENAMEgts

business world was rocked by the announcement

ltTIMEX TYPEDATEgtlast Thursdaylt/TIMEXgt that Mr.

ltENAMEX TYPEPERSONgtVerdilt/ENAMEXgt would leave

his job as vice-president of ltENAMEX

TYPEORGANIZATIONgtMusic Masters of Milan,

Inclt/ENAMEXgt to become operations director of

ltENAMEX TYPEORGANIZATIONgtArthur

Andersenlt/ENAMEXgt.

15

NER difficulties

- Several types of entities are too numerous to

include in dictionaries - New names turn up every day

- Different forms of same entities in same text

- Brian Jones Mr. Jones

- Capitalisation

16

NER approaches

- Rule-based approach

- Hand-crafted rules

- Help from databases of known named entities

- Statistical approach

- Features

- Machine learning

17

Anaphora

18

What is anaphora?

- Relation between a pronoun and another element in

the same or earlier sentence - Anaphoric pronouns

- he, she, it, they

- Anaphoric noun phrases

- the country,

- that idiot,

- his hat, her dress

19

Anaphora (pronouns)

- QuestionWhat is the biggest sector in Andorras

economy? - CorpusAndorra is a tiny land-locked country in

southwestern Europe, between France and Spain.

Tourism, the largest sector of its tiny,

well-to-do economy, accounts for roughly 80 of

the GDP. - Answer ?

20

Anaphora (definite descriptions)

- QuestionWhat is the biggest sector in Andorras

economy? - CorpusAndorra is a tiny land-locked country in

southwestern Europe, between France and Spain.

Tourism, the largest sector of the countrys

tiny, well-to-do economy, accounts for roughly

80 of the GDP. - Answer ?

21

Anaphora Resolution

- Anaphora Resolution is the task of finding the

antecedents of anaphoric expressions - Example system

- Mitkov, Evans Orasan (2002)

- http//clg.wlv.ac.uk/MARS/

22

Anaphora (pronouns)

- QuestionWhat is the biggest sector in Andorras

economy? - CorpusAndorra is a tiny land-locked country in

southwestern Europe, between France and Spain.

Tourism, the largest sector of Andorras tiny,

well-to-do economy, accounts for roughly 80 of

the GDP. - Answer Tourism

23

Architecture of a QA system

corpus

IR

query

Question Analysis

question

documents/passages

Document Analysis

answer-type

question representation

passage representation

Answer Extraction

answers

24

Matching

- Given a question and an expression with a

potential answer, calculate a matching score

S match(Q,A) that indicates how well Q

matches A - Example

- Q When was Franz Kafka born?

- A1 Franz Kafka died in 1924.

- A2 Kafka was born in 1883.

25

Semantic Matching

- answer(X)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,X)

franz(x1) kafka(x1) die(x3) agent(x3,x1) in(x3,x2)

1924(x2)

Q

A1

26

Semantic Matching

- answer(X)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,X)

franz(x1) kafka(x1) die(x3) agent(x3,x1) in(x3,x2)

1924(x2)

Q

A1

Xx2

27

Semantic Matching

- answer(x2)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,x2)

franz(x1) kafka(x1) die(x3) agent(x3,x1) in(x3,x2)

1924(x2)

Q

A1

Yx1

28

Semantic Matching

- answer(x2)

- franz(x1)

- kafka(x1)

- born(E)

- patient(E,Y)

- temp(E,x2)

franz(x1) kafka(x1) die(x3) agent(x3,x1) in(x3,x2)

1924(x2)

Q

A1

Yx1

29

Semantic Matching

- answer(x2)

- franz(x1)

- kafka(x1)

- born(E)

- patient(E,Y)

- temp(E,x2)

Q

A1

franz(x1) kafka(x1) die(x3) agent(x3,x1) in(x3,x2)

1924(x2)

Match score 3/6 0.50

30

Semantic Matching

- answer(X)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,X)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

31

Semantic Matching

- answer(X)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,X)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

Xx2

32

Semantic Matching

- answer(x2)

- franz(Y)

- kafka(Y)

- born(E)

- patient(E,Y)

- temp(E,x2)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

Yx1

33

Semantic Matching

- answer(x2)

- franz(x1)

- kafka(x1)

- born(E)

- patient(E,x1)

- temp(E,x2)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

Ex3

34

Semantic Matching

- answer(x2)

- franz(x1)

- kafka(x1)

- born(x3)

- patient(x3,x1)

- temp(x3,x2)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

Ex3

35

Semantic Matching

- answer(x2)

- franz(x1)

- kafka(x1)

- born(x3)

- patient(x3,x1)

- temp(x3,x2)

kafka(x1) born(x3) patient(x3,x1) in(x3,x2) 1883(x

2)

Q

A2

Match score 4/6 0.67

36

Matching Techniques

- Weighted matching

- Higher weight for named entities

- WordNet

- Hyponyms

- Inferences rules

- Example

- BORN(E) IN(E,Y) DATE(Y) ? TEMP(E,Y)

37

Reranking

38

Reranking

- Most QA systems first produce a list of possible

answers - This is usually followed by a process called

reranking - Reranking promotes correct answers to a higher

rank

39

Factors in reranking

- Matching score

- The better the match with the question, the more

likely the answers - Frequency

- If the same answer occurs many times, it is

likely to be correct

40

Sanity Checking

- Answer should be informative

- Q Who is Tom Cruise married to?

- A Tom Cruise

- Q Where was Florence Nightingale born?

- A Florence

41

Answer Validation

- Given a ranked list of answers, some of these

might not make sense at all - Promote answers that make sense

- How?

- Use even a larger corpus!

- Sloppy approach

- Strict approach

42

The World Wide Web

43

Answer validation (sloppy)

- Given a question Q and a set of answers A1An

- For each i, generate query Q Ai

- Count the number of hits for each i

- Choose Ai with most number of hits

- Use existing search engines

- Google, AltaVista

- Magnini et al. 2002 (CCP)

44

Corrected Conditional Probability

- Treat Q and A as a bag of words

- Q content words question

- A answer

- hits(A NEAR

Q) - CCP(Qsp,Asp) ------------------------------

hits(A) x

hits(Q) - Accept answers above a certain CCP threshold

45

Answer validation (strict)

- Given a question Q and a set of answers A1An

- Create a declarative sentence with the focus of

the question replaced by Ai - Use the strict search option in Google

- High precision

- Low recall

- Any terms of the target not in the sentence as

added to the query

46

Example

- TREC 99.3Target Woody Guthrie.Question Where

was Guthrie born? - Top-5 Answers

- 1) Britain

- 2) Okemah, Okla.3) Newport

- 4) Oklahoma5) New York

47

Example generate queries

- TREC 99.3Target Woody Guthrie.Question Where

was Guthrie born? - Generated queries

- 1) Guthrie was born in Britain

- 2) Guthrie was born in Okemah, Okla.3)

Guthrie was born in Newport4) Guthrie was

born in Oklahoma5) Guthrie was born in New

York

48

Example add target words

- TREC 99.3Target Woody Guthrie.Question Where

was Guthrie born? - Generated queries

- 1) Guthrie was born in Britain Woody

- 2) Guthrie was born in Okemah, Okla.

Woody3) Guthrie was born in Newport Woody4)

Guthrie was born in Oklahoma Woody5) Guthrie

was born in New York Woody

49

Example morphological variants

- TREC 99.3

- Target Woody Guthrie.

- Question Where was Guthrie born?

- Generated queries

- Guthrie is OR was OR are OR were born in

Britain Woody - Guthrie is OR was OR are OR were born in Okemah,

Okla. Woody - Guthrie is OR was OR are OR were born in

Newport Woody - Guthrie is OR was OR are OR were born in

Oklahoma Woody - Guthrie is OR was OR are OR were born in New

York Woody

50

Example google hits

- TREC 99.3

- Target Woody Guthrie.

- Question Where was Guthrie born?

- Generated queries

- Guthrie is OR was OR are OR were born in

Britain Woody 0 - Guthrie is OR was OR are OR were born in Okemah,

Okla. Woody 10 - Guthrie is OR was OR are OR were born in

Newport Woody 0 - Guthrie is OR was OR are OR were born in

Oklahoma Woody 42 - Guthrie is OR was OR are OR were born in New

York Woody 2

51

Example reranked answers

- TREC 99.3Target Woody Guthrie.Question

Where was Guthrie born?

Original answers 1) Britain 2) Okemah,

Okla.3) Newport 4) Oklahoma5) New York

Reranked answers 4) Oklahoma 2) Okemah,

Okla.5) New York 1) Britain3) Newport

52

Summary

- Introduction to QA

- Typical Architecture, Evaluation

- Types of Questions and Answers

- Use of general NLP techniques

- Tokenisation, POS tagging, Parsing

- NER, Anaphora Resolution

- QA Techniques

- Matching

- Reranking

- Answer Validation

53

Where to go from here

- Producing answers in real-time

- Improve accuracy

- Answer explanation

- User modelling

- Speech interfaces

- Dialogue (interactive QA)

- Multi-lingual QA

54

Video (Robot)