Introduction: Robot Vision Philippe Martinet - PowerPoint PPT Presentation

1 / 41

Title:

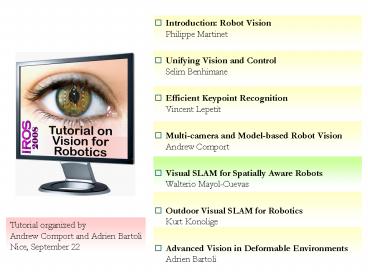

Introduction: Robot Vision Philippe Martinet

Description:

Multi-camera and Model-based Robot Vision. Andrew Comport. Visual SLAM for Spatially Aware Robots ... Andrew Comport and Adrien Bartoli. Nice, September 22 ... – PowerPoint PPT presentation

Number of Views:171

Avg rating:3.0/5.0

Title: Introduction: Robot Vision Philippe Martinet

1

- Introduction Robot VisionPhilippe Martinet

- Unifying Vision and ControlSelim Benhimane

- Efficient Keypoint RecognitionVincent Lepetit

- Multi-camera and Model-based Robot VisionAndrew

Comport - Visual SLAM for Spatially Aware RobotsWalterio

Mayol-Cuevas - Outdoor Visual SLAM for RoboticsKurt Konolige

- Advanced Vision in Deformable EnvironmentsAdrien

Bartoli

Tutorial organized by Andrew Comport and Adrien

Bartoli Nice, September 22

2

Visual SLAM and Spatial Awareness

- SLAM Simultaneous Localisation and Mapping

- An overview of some methods currently used for

SLAM using computer vision. - Recent work on enabling more stable and/or robust

mapping in real-time. - Work aiming to provide better scene understanding

in the context of SLAM Spatial Awareness. - Here we concentrate on Small working areas

where GPS, odometry and other traditional sensors

are not operational or available.

3

Spatial Awareness

- SA A key cognitive competence that permits

efficient motion and task planning. - Even from early age we use spatial awareness the

toy has not vanished it is behind the sofa. - I can point to where the entrance to the building

is but cant tell how many doors are from here to

there.

SLAM offers a rigorous way to implement and

manage SA

4

Wearable personal assistants

Video at http//www.robots.ox.ac.uk/ActiveVision/P

rojects/Vslam/vslam.02/Videos/wearableslam2.mpg

Mayol, Davison and Murray 2003

5

SLAM

- Key historical reference

- Smith, R.C.and Cheeseman, P. "On the

Representation and Estimation of Spatial

Uncertainty". The International Journal of

Robotics Research 5 (4) 56-68. 1986. - Proposed a stochastic framework to maintain the

relationship (uncertainties) between features in

the map. - Our knowledge of the spatial relationships among

objects is inherently uncertain. A manmade object

does not match its geometric model exactly

because of manufacturing tolerances. Even if it

did, a sensor could not measure the geometric

features, and thus locate the object exactly,

because of measurement errors. And even if it

could, a robot using the sensor cannot manipulate

the object exactly as intended, because of hand

positioning errorsSmith,Self,Cheesman 1986

6

SLAM

- A problem that has been identified for several

years, central in mobile robot navigation and

branching into other fields like wearable

computing and augmented reality.

7

SLAM Simultaneous Localisation And Mapping

3D points (features)

update positions

- Aim to

- Localise camera (6DOF Rotation and Translation

from reference view) - Simultaneously estimate 3D map of features (e.g.

3D points)

perspective projection

update location

predict location

Implemented using Extended Kalman Filter,

Particle filters, SIFT, Edglets, etc.

camera moved

camera

8

State representation

as in Davison 2003

9

SLAM with first order uncertainty representation

as in Davison 2003

10

(No Transcript)

11

Challenges for visual SLAM

- On the computer vision side, improving data

association - Ensuring a match is a true positive.

- Representations and parameterizations to enhance

mapping while within real-time. - Alternative frameworks for mapping

- Can we extend area of operation?

- Better scene understanding.

12

For data association, earlier approach

- Small (e.g. 11x11) image patches around salient

points to represent features. - Normalized Cross Correlation (NCC) to detect

features. - Small patches accurate search regions lead to

fast camera pose estimation. - Depth by projecting hypothesis at different

depths.

See A. Davison, Real-Time Simultaneous

Localisation and Mapping with a Single Camera,

ICCV 2003.

13

However

- Simple patches are insufficient for large view

point or scale variations. - Small patches help speed but prone to mismatch.

- Search regions cant always be trusted (camera

occlusion, motion blur).

Possible solutions Use better feature

description or Other types of features e.g. edge

information.

14

SIFT D. Lowe, IJCV 2004

Find maxima in scale space to locate keypoint.

Around keypoint, build invariant local descriptor

using gradient histograms.

15

Chekhlov, Pupilli, Mayol and Calway,

ISVC06/CVPR07

- Uses SIFT-like descriptors (histogram of

gradients) around Harris corners. - Get scale from SLAM predictive SIFT.

16

Chekhlov, Pupilli, Mayol and Calway,

ISVC06/CVPR07

Video at http//www.cs.bris.ac.uk/Publications/att

achment-delivery.jsp?id9

17

Eade and Drummond, BMVC2006

Video at http//mi.eng.cam.ac.uk/ee231/bmvcmovie.

avi

- Edglets

- Locally straight section of gradient Image.

- Parameterized as 3D point direction.

- Avoid regions of conflict (e.g. close parallel

edges). - Deal with multiple matches through robust

estimation.

18

RANSAC Fischler and Bolles 1981

RANSAC fit

Random Sampling ANd Consensus

Least squares fit

Gross outliers

- Select random sample of points.

- Propose a model (hypothesis) based on sample.

- Assess fitness of hypothesis to rest of data.

- Repeat until max number of iterations or fitness

threshold reached. - Keep best hypothesis and potentially refine

hypothesis with all inliers.

19

OK but

- Having rich descriptors or even multiple kinds of

features may still lead to wrong data

associations (mismatches). - If we pass to the SLAM system every measurement

we think is good it can be catastrophic. - Better to be able to recover from failure than to

think it wont fail!

20

Williams, Smith and Reid ICRA2007

Use 3 point algorithm -gt up to 4 possible poses.

Verify using Matas Td,d test.

- Camera relocalization using small 2D patches

RANSAC to compute pose. - Adds a supervisor between visual measurements

and SLAM system.

21

Williams, Smith and Reid ICRA2007

Video at http//www.robots.ox.ac.uk/ActiveVision/P

rojects/Vslam/vslam.04/Videos/relocalisation_icra_

07.mpg

In brief, while within real-time limit do

Carry on

Also see recent work Williams, Klein and Reid

ICCV2007 using randomised trees rather than

simple 2D patches.

22

Relocalisation based on appearance hashing

- Use a hash function to index similar descriptors

(Brown et al 2005). - Fast and memory efficient (only an index needs to

be saved per descriptor).

Quantize result of Haar masks

Chekhlov et al 2008

Video at http//www.cs.bris.ac.uk/Publications/pu

b_master.jsp?id2000939

23

Parallel Tracking and Mapping

- Klein and Murray, Parallel Tracking and Mapping

for Small AR Workspaces Proc. International

Symposium on Mixed and Augmented Reality. 2007 - Decouple Mapping from Tracking, run them in

separate threads on multi-core CPU. - Mapping is based on key-frames, processed using

batch Bundle Adjustment. - Map is intialised from a stereo pair (using

5-Point Algorithm). - Initialised new points with epipolar search.

- Large numbers (thousands) of points can be mapped

in a small workspace.

24

Parallel Tracking and Mapping

CPU1

CPU2

Video at http//www.robots.ox.ac.uk/ActiveVision/V

ideos/index.html

- Klein and Murray, 2007

25

So far we have mentioned that

- Maps are sparse collections of low-level

features - Points (Davison et al., Chekhlov et al.)

- Edgelets (Eade and Drummond)

- Lines (Smith et al., Gee and Mayol-Cuevas)

- Full correlation between features and camera

- Maintain full covariance matrix

- Loop closure effects of measurements propagated

to all features in map - Increase in state size limits number of features

26

Commonly in Visual SLAM

- Emphasis on localization and less on the mapping

output. - SLAM should avoid making beautiful maps (there

are other better methods for that!). - Very few examples exist on improving the

awareness element, e.g. Castle and Murray BMVC 07

on known object recognition within SLAM.

27

Better spatial awareness through higher level

structural inference

- Types of Structure

- Coplanar points ? planes

- Collinear edgelets ? lines

- Intersecting lines ? junctions

- Our Contribution

- Method for augmenting SLAM map with planar and

line structures. - Evaluation of method in simulated scene discover

trade-off between efficiency and accuracy.

28

Discovering structure within SLAM

Gee, Checkhlov, Calway and Mayol-Cuevas, 2008

29

Plane Representation

Plane Parameters

Camera

normal

Basis vectors

(x,y,z)

c(?2,f2)

c(?1,f1)

Plane

Gee et al 2007

30

Plane Initialisation

- Discover planes using RANSAC over thresholded

subset of map - Initialise plane in state using best-fit plane

parameters found from SVD of inliers - Augment state covariance, P, with new plane

O

Append measurement covariance R0 to covariance

matrix

Multiplication with Jacobian populates

cross-covariance terms

State size increases by 7 after adding plane

P

Gee et al 2007

31

Adding Points to Plane

- Decide whether point lies on plane

- Add point by projecting onto plane and

transforming state and covariance - Decide whether to fix point on plane

smax

d

s

O

State size decreases by 1 after adding point to

plane

Fix points in plane reduces state size by 2 for

each fixed point

Add point to plane

Add other points to plane

State size is smaller than original state if gt7

points are added to plane

Gee et al 2007

32

Plane Observation

- Cannot make direct observation of plane

- Transform points to 3D world space

- Project points into image and match with

predicted observations - Covariance matrix embodies constraints between

plane, camera and points

Gee et al 2007

33

Discovering planes in SLAM

Video at http//www.cs.bris.ac.uk/gee

Gee et al. 2007

34

Discovering planes in SLAM

Gee et al. 2007

Video at http//www.cs.bris.ac.uk/gee

35

Mean error State reduction, planes

Average 30 runs

Gee at al 2008

36

Discovering 3D lines

Video at http//www.cs.bris.ac.uk/gee

37

An example application

Video at http//www.cs.bris.ac.uk/Publications/pu

b_master.jsp?id2000745

Chekhlov et al. 2007

38

Other interesting recent work

- Active search and matching or know what to

measure. - Davison ICCV 2005 and Chli and Davison ECCV 2008

- Submapping managing better the scalability

problem. - Clemente et al RSS 2007

- Eade and Drummond BMVC 2008

- And the work presented in this tutorial

- Randomised trees Vincent Lepetit

- SFM Andrew Comport

39

Software tools

- http//www.doc.ic.ac.uk/ajd/Scene/index.html

- ltMonoSLAM code for Linux, works out of the boxgt

- http//www.robots.ox.ac.uk/gk/PTAM/

- ltParallel tracking and mappinggt

- http//www.openslam.org/

- ltfor SLAM algorithms mainly from robotics

communitygt - http//www.robots.ox.ac.uk/SSS06/

- ltSLAM literature and some software in Matlabgt

40

Recommended intro reading

- Yaakov Bar-Shalom, X. Rong Li, Thiagalingam

Kirubarajan, Estimation with Applications to

Tracking and Navigation, Wiley-Interscience,

2001. - Hugh Durrant-Whyte and Tim Bailey, Simultaneous

Localisation and Mapping (SLAM) Part I The

Essential Algorithms. Robotics and Automation

Magazine, June, 2006. - Tim Bailey and Hugh Durrant-Whyte, Simultaneous

Localisation and Mapping (SLAM) Part II State of

the Art. Robotics and Automation Magazine,

September, 2006. - Andrew Davison, Ian Reid, Nicholas Molton and

Olivier Stasse MonoSLAM Real-Time Single Camera

SLAM, IEEE Trans. PAMI 2007. - Andrew Calway, Andrew Davison and Walterio

Mayol-Cuevas, Slides of Tutorial on Visual SLAM,

BMVC 2007 avaliable at - http//www.cs.bris.ac.uk/Research/Vision/Realtime

/bmvctutorial/

41

Some Challenges

- Deal with larger maps.

- Obtain maps that are task-meaningful

(manipulation, AR, metrology). - Use different feature kinds on an informed way.

- Benefit from other approaches such as SFM but

keep efficiency. - Incorporate semantics and beyond-geometric scene

understanding.

Fin