ECE5610CSC6220 Introduction to Parallel and Distribution Computing - PowerPoint PPT Presentation

1 / 62

Title:

ECE5610CSC6220 Introduction to Parallel and Distribution Computing

Description:

Chip Multiprocessor (CMP) a.k.a, multi-core processor ... IBM Roadrunner (6,562 dual-core AMD Opteron chips and 12,240 Cell chips) 7. Course Coverage ... – PowerPoint PPT presentation

Number of Views:83

Avg rating:3.0/5.0

Title: ECE5610CSC6220 Introduction to Parallel and Distribution Computing

1

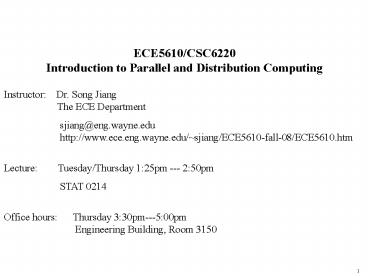

ECE5610/CSC6220Introduction to Parallel and

Distribution Computing

Instructor Dr. Song Jiang

The ECE Department

sjiang_at_eng.wayne.edu

http//www.ece.eng.wayne.edu/sjiang/ECE5610-fall-

08/ECE5610.htm Lecture Tuesday/Thursday

125pm --- 250pm STAT 0214

Office hours Thursday 330pm---500pm

Engineering Building,

Room 3150

2

Outline

- Introduction

- What is parallel computing?

- Why you should care?

- Course administration

- Course coverage

- Workload and grading

- Inevitability of parallel computing

- Application demands

- Technology and architecture trends

- Economics

- Convergence of parallel architecture

- Shared address space, message passing, data

parallel, data flow - A generic parallel architecture

3

What is Parallel Computer?

A parallel computer is a collection of

processing elements that can communicate and

cooperate to solve large problems fast

------

Almasi/Gottlieb

- communicate and cooperate

- Node and interconnect architecture

- Problem partitioning and orchestration

- large problems fast

- Programming model

- Match of model and architecture

- Focus of this course

- Parallel architecture

- Parallel programming models

- Interaction between models and architecture

4

What is Parallel Computer? (contd)

- Some broad issues

- Resource Allocation

- how large a collection?

- how powerful are the elements?

- Data access, Communication and Synchronization

- how are data transmitted between processors?

- how do the elements cooperate and communicate?

- what are the abstractions and primitives for

cooperation? - Performance and Scalability

- how does it all translate into performance?

- how does it scale?

5

Why study Parallel Computing

- Inevitability of parallel computing

- Fueled by application demand for performance

- Scientific weather forecasting, pharmaceutical

design, genomics - Commercial OLTP, search engine, decision

support, data mining - Scalable web servers

- Enabled by technology and architecture trends

- limits to sequential CPU, memory, storage

performance - parallelism is an effective way of utilizing

growing number of transistors. - low incremental cost of supporting parallelism

- Convergence of parallel computer organizations

- driven by technology constraints and economies

of scale - laptops and supercomputers share the same

building block - growing consensus on fundamental principles and

design tradeoffs

6

Why study Parallel Computing (contd)

- Parallel computing is ubiquitous

- Multithreading

- Simultaneous multithreading (SMT) a.k.a.

hyper-threading - e.g., Intel Pentium 4 Xeon

- Chip Multiprocessor (CMP) a.k.a, multi-core

processor - Intel Core Duo, Xbox 360 (triple cores, each

with SMTs), AMD Quad-core Opteron. - IBM Cell processor with as many as 9 cores used

in Sony PlayStation 3, Toshiba HD sets, and IBM

Roadrunner HPC. - Symmetrical Multiprocessor (SMP) a.k.a, shared

memory multiprocessor - e.g. Intel Pentium Pro Quad

- Cluster-based supercomputer

- IBM Bluegene/L (65,536 modified PowerPC 400,

each with two cores) - IBM Roadrunner (6,562 dual-core AMD Opteron

chips and 12,240 Cell chips)

7

Course Coverage

- Parallel architectures

- Q which are the dominant architectures?

- A small-scale shared memory (SMPs), large-scale

distributed memory - Programming model

- Q how to program these architectures?

- A Message passing and shared memory models

- Programming for performance

- Q how are programming models mapped to the

underlying architecture, and how can this mapping

be exploited for performance?

8

Course Administration

- Course prerequisites

- Course textbooks

- Class attendance

- Required work and grading policy

- Late policy

- Extra credit

- Academic honesty

9

Outline

- Introduction

- Why is parallel computing?

- Why you should care?

- Course administration

- Course coverage

- Workload and grading

- Inevitability of parallel computing

- Application demands

- Technology and architecture trends

- Economics

- Convergence of parallel architecture

- Shared address space, message passing data

parallel, data flow, systolic - A generic parallel architecture

10

Inevitability of Parallel Computing

- Application demands

- Our insatiable need for computing cycles in

challenge applications - Technology Trends

- Number of transistors on chip growing rapidly

- Clock rates expected to go up only slowly

- Architecture Trends

- Instruction-level parallelism valuable but

limited - Coarser-level parallelism, as in MPs, the most

viable approach - Economics

- Low incremental cost of supporting parallelism

11

Application Demands Scientific Computing

- Large parallel machines are a mainstay in many

industries - Petroleum

- Reservoir analysis

- Automotive

- Crash simulation, combustion efficiency

- Aeronautics

- Airflow analysis, structural mechanics,

electromegnetism - Computer-aided design

- Pharmaceuticals

- Molecular modeling

- Visualization

- Entertainment

- Architecture

- Financial modeling

- Yield and derivative analysis

2,300 CPU years (2.8 GHz Intel Xeon) at a rate of

approximately one hour per frame.

12

Simulation The Third Pillar of Science

- Traditional scientific and engineering paradigm

- Do theory or paper design.

- Perform experiments or build system.

- Limitations

- Too difficult -- build large wind tunnels.

- Too expensive -- build a throw-away passenger

jet. - Too slow -- wait for climate or galactic

evolution. - Too dangerous -- weapons, drug design, climate

experimentation. - Computational science paradigm

- Use high performance computer systems to simulate

the phenomenon - Based on known physical laws and efficient

numerical methods.

13

Challenge Computation Examples

- Science

- Global climate modeling

- Astrophysical modeling

- Biology genomics protein folding drug design

- Computational Chemistry

- Computational Material Sciences and Nanosciences

- Engineering

- Crash simulation

- Semiconductor design

- Earthquake and structural modeling

- Computation fluid dynamics (airplane design)

- Business

- Financial and economic modeling

- Defense

- Nuclear weapons -- test by simulation

- Cryptography

14

Units of Measure in HPC

- High Performance Computing (HPC) units are

- Flop/s floating point operations

- Bytes size of data

- Typical sizes are millions, billions, trillions

- Mega Mflop/s 106 flop/sec Mbyte 106 byte

- (also 220 1048576)

- Giga Gflop/s 109 flop/sec Gbyte 109 byte

- (also 230 1073741824)

- Tera Tflop/s 1012 flop/sec Tbyte 1012 byte

- (also 240 10995211627776)

- Peta Pflop/s 1015 flop/sec Pbyte 1015 byte

- (also 250 1125899906842624)

15

Global Climate Modeling Problem

- Problem is to compute

- f(latitude, longitude, elevation, time) ?

- temperature, pressure, humidity,

wind velocity - Approach

- Discretize the domain, e.g., a measurement point

every 1km - Devise an algorithm to predict weather at time

t1 given t

Source http//www.epm.ornl.gov/chammp/chammp.html

16

Example Numerical Climate Modeling at NASA

- Weather forecasting over US landmass 3000 x 3000

x 11 miles - Assuming 0.1 mile cubic element ---gt 1011 cells

- Assuming 2 day prediction _at_ 30 min ---gt 100 steps

in time scale - Computation Partial Differential Equation and

Finite Element Approach - Single element computation takes 100 Flops

- Total number of flops 1011 x 100 x 100 1015

(i.e., one peta-flops) - Current uniprocessor power 109 flops/sec

(Giga-flops) - It takes 106 seconds or 280 hours. (Forecast nine

days late!) - 1000 processors at 10 efficiency ? around 3

hours - IBM Roadrunner ? 1 second ?!

- State of the art models require integration of

atmosphere, ocean, sea-ice, land models, and

more Models demanding more computation

resources will be applied.

17

High Resolution Climate Modeling on NERSC-3 P.

Duffy, et al., LLNL

18

Commercial Computing

- Parallelism benefits many applications

- Database and Web servers for online transaction

processing - Decision support

- Data mining and data warehousing

- Financial modeling

- Scale not as large, but more widely used

- Computational power determines scale of business

that can be handled.

19

Outline

- Introduction

- Why is parallel computing?

- Why you should care?

- Course administration

- Course coverage

- Workload and grading

- Inevitability of parallel computing

- Application demands

- Technology and architecture trends

- Economics

- Convergence of parallel architecture

- Shared address space, message passing data

parallel, data flow, systolic - A generic parallel architecture

WA book Chapter 1, GGKK book Chapter 1

20

Tunnel Vision by Experts

- I think there is a world market for maybe five

computers. - Thomas Watson, chairman of IBM, 1943.

- There is no reason for any individual to have a

computer in their home - Ken Olson, president and founder of Digital

Equipment Corporation, 1977. - 640K of memory ought to be enough for

anybody. - Bill Gates, chairman of Microsoft,1981.

Slide source Warfield et al.

21

Technology Trends ?-processor Capacity

Moores Law

Gordon Moore (co-founder of Intel) predicted in

1965 that the transistor density of semiconductor

chips would double roughly every 18 months.

?Moores Law

Microprocessors have become smaller, denser, and

more powerful.

Slide source Jack Dongarra

22

Technology TrendsTransistor Count

- 40 more functions can be performed by a CPU

per year

23

Technology Trends Clock Rate

- 30 per year ---gt todays PC is yesterdays

Supercomputer

24

Technology Trends

- Microprocessor exhibits astonishing progress!

- Natural building block for parallel computers

are also state-of-art microprocessors.

25

Technology Trends Similar Story for Memory and

Disk

- Divergence between memory capacity and speed more

pronounced - Capacity increased by 1000X from 1980-95, speed

only 2X - Larger memories are slower, while processors get

faster ? memory wall - Need to transfer more data in parallel

- Need deeper cache hierarchies

- Parallelism helps hide memory latency

- Parallelism within memory systems too

- New designs fetch many bits within memory chip,

follow with fast pipelined transfer across

narrower interface

26

Technology Trends Unbalanced system improvements

The disks in 2000 are more than 57 times SLOWER

than their ancestors in 1980.

? Redundant Inexpensive Array of Disk (RAID)

27

Architecture Trend Role of Architecture

- Clock rate increases 30 per year, while the

overall CPU - performance increases 50 to 100 per year

- Where is the rest coming from?

- Parallelism likely to contribute more to

performance improvements

28

Architectural Trends

- Greatest trend in VLSI is an increase in the

exploited parallelism - Up to 1985 bit level parallelism 4-bit -gt 8 bit

-gt 16-bit - slows after 32 bit

- adoption of 64-bit now under way

- Mid 80s to mid 90s Instruction Level Parallelism

(ILP) - pipelining and simple instruction sets (RISC)

- on-chip caches and functional units gt

superscalar execution - Greater sophistication out of order execution,

speculation - Nowadays

- Hyper-threading

- Multi-core

29

Phase in VLSI Generation

Instruction-Level Parallelism

Thread-Level Parallelism

Bit-Level Parallelism

30

ILP Ideal Potential

- Limited parallelism inherent in one stream of

instructions - Pentium Pro 3 instructions,

- PowerPC 604 4 instructions

- Need to Look across threads for more parallelism

31

Architectural TrendsParallel Computers

No. of processors in fully configured commercial

shared-memory systems

32

Why Parallel Computing Economics

- Commodity means CHEAP

- Development cost (5 100M) amortized over

volumes of millions - Building block offers significant

cost-performance benefits - Multiprocessors being pushed by software vendors

(e.g. database) as well as hardware vendors - Standardization by Intel makes small, bus-based

SMPs commodity - Multiprocessing on the desktop (laptop) is a

reality - Example How economics affect platforms for

scientific computing? - Large scale cluster systems replace vector

supercomputers - A supercomputer and a desktop share the same

building block

33

Supercomputers

http//www.top500.org/lists/2008/06

TOP 10 Sites released in June 2008

34

Supercomputers

35

Supercomputers

Japanese Earth Simulator machine

- Parallel Computing Today

IBM BlueGene/L

36

Evolution of Architectural Models

- Historically (1970s - early 1990s), each parallel

machine was unique, along with its programming

model and language - Architecture prog. model comm.

abstraction machine organization - Throw away software start over with each new

kind of machine - Dead Supercomputer Society http//www.paralogos.

com/DeadSuper/ - Nowadays we separate the programming model from

the underlying parallel machine architecture. - 3 or 4 dominant programming models

- Dominant shared address space, message passing,

data parallel - Others data flow, systolic arrays

37

Programming Model for Various Architectures

- Programming models specify communication and

synchronization - Multiprogramming no communication/synchronization

- Shared address space like bulletin board

- Message passing like phone calls

- Data parallel more regimented, global actions on

data - Communication abstraction primitives for

implementing the model - Play the role like the instruction set in a

uniprocessor computer. - Supported by HW, by OS or by user-level software

- Programming models are the abstraction presented

to programmers - Can write portably correct code that runs on many

machines - Writing fast code requires tuning for the

architecture - Not always worthy of it sometimes programmer

time is more precious

38

Aspects of a parallel programming model

- Control

- How is parallelism created?

- In what order should operations take place?

- How are different threads of control

synchronized? - Naming

- What data is private vs. shared?

- How is shared data accessed?

- Operations

- What operations are atomic?

- Cost

- How do we account for the cost of operations?

39

Programming Models Shared Address Space

Machine physical address space

Virtual address spaces for a collection of

processes communicating via shared addresses

P

p

r

i

v

a

t

e

n

L

o

a

d

P

n

Common physical addresses

P

2

P

1

P

0

S

t

o

r

e

P

p

r

i

v

a

t

e

2

Shared portion of address space

P

p

r

i

v

a

t

e

Private portion of address space

1

P

p

r

i

v

a

t

e

0

- Programming model

- Process virtual address space plus one or more

threads of control - Portions of address spaces of processes are

shared - Writes to shared address visible to all threads

(in other processes as well) - Natural extension of uniprocess model

- conventional memory operations for communication

- special atomic operations for synchronization

40

SAS Machine Architecture

- Motivation Programming convenience

- Location transparency

- Any processor can directly reference any memory

location - Communication occurs implicitly as result of

loads and stores - Extended from time-sharing on uni-processors

- Processes can run on different processors

- Improved throughput on multi-programmed

workloads - Communication hardware also natural extension of

uniprocessor - Addition of processors similar to memory

modules, I/O controllers

41

SAS Machine Architecture (Contd)

- One representative architecture SMP

- Used to mean Symmetric MultiProcessor ?All CPUs

had equal capabilities in every area, e.g. in

terms of I/O as well as memory access - Evolved to mean Shared Memory Processor ?

non-message-passing machines (included crossbar

as well as bus based systems) - Now it tends to refer to bus-based shared memory

machines (define exactly what you mean by SMP!)

?Small scale lt 32 processors typically

P1

Pn

network

memory

42

Example Intel Pentium Pro Quad

- All coherence and multiprocessing glue in

processor module - Highly integrated, targeted at high volume

- Low latency and high bandwidth

43

Example SUN Enterprise

- 16 cards of either type processors memory, or

I/O - All memory accessed over bus, so symmetric

- Higher bandwidth, higher latency bus

44

Scaling Up More SAS Machine Architectures

Dance hall

Distributed Shared memory

- Dance-hall

- Problem interconnect cost (crossbar) or

bandwidth (bus) - Solution scalable interconnection network

?Bandwidth scalable - latencies to memory uniform, but uniformly large

(Uniform Memory Access (UMA)) - Caching is key coherence problem

45

Scaling Up More SAS Machine Architectures

- Distributed shared memory (DSM) or non-uniform

memory access (NUMA) - Non-uniform time for the access to data in local

memory and remote memory - Caching of non-local data is key

- Coherence cost

46

Example Cray T3E

- Scale up to 1024 processors, 480MB/s links

- Memory controller generates comm. request for

nonlocal references - No hardware mechanism for coherence (SGI Origin

etc. provide this)

47

Programming Models Message Passing

- Programming model

- Directly access only private address space (local

memory), communicate via explicit messages - Send specifies data in a buffer to transmit to

the receiving process - Recv specifies sending process and buffer to

receive data - In the simplest form, the send/recv match

achieves pair-wise synchronization - Model is separated from basic hardware operations

- Library or OS support for copying, buffer

management, protection - Potential high overhead large messages to

amortize the cost

48

Message Passing Architectures

- Complete processing node (computer) as building

block, including I/O - Communication via explicit I/O operations

- Processor/Memory/IO form a processing node that

cannot directly access another processors

memory. - Each node has a network interface (NI) for

communication and synchronization.

49

DSM vs Message Passing

- High-level block diagrams are similar

- Programming paradigms that theoretically can be

supported on various parallel architectures - Implication of DSM and MP on architectures

- Fine-grained hardware supports for DSM

- Communication integrated at I/O level for MP,

neednt be into memory system - MP can be implemented as middleware (library)

- MP has better scalability.

- MP machines are easier to build than scalable

address space machines

50

Example IBM SP-2

- Each node is a essentially complete RS6000

workstation - Network interface integrated in I/O bus (bw

limited by I/O bus).

51

Example Intel Paragon

52

Toward Architectural Convergence

- Convergence in hardware organizations

- Tighter NI integration for MP

- Hardware SAS passes messages at lower level

- Cluster of workstations/SMP become the most

popular parallel architecture for parallel

systems - Programming models distinct, but organizations

converging - Nodes connected by general network and

communication assists - Implementations also converging, at least in

high-end machines

53

Programming Model Data Parallel

- Operations performed in parallel on each element

of data structure - Logically single thread of control (sequential

program) - Conceptually, a processor associated with each

data element - Coordination is implicit statements executed

synchronously - Example

float x100

for (i0 ilt100 i) xi xi 1

x x 1

?

54

Programming Model Data Parallel

- Architectural model

- A control processor issues instructions

- Array of many simple cheap processorsprocessing

element (PE)each with little memory - A interconnect network that broadcasts data to

PEs, communication among PEs, and cheap

synchronization. - Motivation

- Give up flexibility (different instructions in

different processors) to allow a much larger

number of processors - Target at limited scope of applications.

- Applications

- Finite differences, linear algebra.

- Document searching, graphics, image processing, .

55

A Case of DP Vector Machine

An example vector instruction

- Vector machine

- Multiple functional units

- All performing the same operation

- Instructions may be of very high parallelism

(e.g., 64-way) but hardware executes only a

subset in parallel at a time - Historically important, but overtaken by MPPs in

the 90s - Re-emerging in recent years

- At a large scale in the Earth Simulator (NEC

SX6) and Cray X1 - At a small sale in SIMD media extensions to

microprocessors - SSE (Streaming SIMD Extensions) , SSE2 (Intel

Pentium/IA64) - Altivec (IBM/Motorola/Apple PowerPC)

- VIS (Sun Sparc)

- Enabling technique

- Compiler does some of the difficult work of

finding parallelism

(logically, performs elts adds in parallel)

56

Flynn's Taxonomy

- A classification of computer architectures based

on the number of streams of instructions and

data - Single instruction/single data stream (SISD)

- - a sequential computer.

- Multiple instruction/single data stream (MISD)

- - unusual.

- Single instruction/multiple data streams (SIMD)

- - e.g. a vector processor.

- Multiple instruction/multiple data streams

(MIMD) - - multiple autonomous processors

simultaneously executing different instructions

on different data.

? Program model converges with SPMD (single

program multiple data)

57

Clusters have Arrived

58

Whats a Cluster?

- Collection of independent computer systems

working together as if a single system. - Coupled through a scalable, high bandwidth, low

latency interconnect.

59

Clusters of SMPs

- SMPs are the fastest commodity machine, so use

them as a building block for a larger machine

with a network - Common names

- CLUMP Cluster of SMPs

- What is the right programming model?

- Treat machine as flat, always use message

passing, even within SMP (simple, but ignores an

important part of memory hierarchy). - Shared memory within one SMP, but message passing

outside of an SMP.

60

WSU CLUMP Cluster of SMPs

Symmetric Multiprocessor (SMP)

61

Convergence Generic Parallel Architecture

- A generic modern multiprocessor

- Node Processor(s), memory, plus communication

assist - Network interface and communication controller

- Scalable network

- ? Convergence allows lots of innovation, now

within the same framework - integration of assist within node, what

operation, how efficiently

62

Lecture Summary

- Parallel computing

- A parallel computer is a collection of

processing elements that can - communicate and cooperate to solve large

problems fast - Parallel computing has become central and

mainstream - Application demands

- Technology and architecture trends

- Economics

- Convergence in parallel architecture

- initially close coupling of programming model

and architecture - Shared address space, message passing, data

parallel - now separation and identification of dominant

models/architectures - Programming models shared address space message

passing, and data parallel - Architectures small-scale shared memory,

large-scale distributed memory, large-scale SMP

cluster.