Can a network learn syntax - PowerPoint PPT Presentation

1 / 13

Title:

Can a network learn syntax

Description:

Can a network learn syntax? A fundamental issue with R&M's ... the, piano, played, dropped, on, floor, toe, carol, his, her, cat, in, room, she, with, custard, ... – PowerPoint PPT presentation

Number of Views:43

Avg rating:3.0/5.0

Title: Can a network learn syntax

1

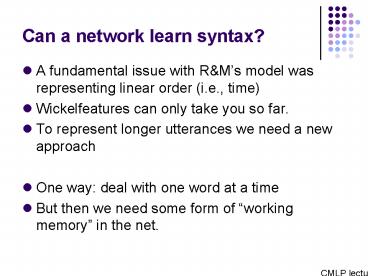

Can a network learn syntax?

- A fundamental issue with RMs model was

representing linear order (i.e., time) - Wickelfeatures can only take you so far.

- To represent longer utterances we need a new

approach - One way deal with one word at a time

- But then we need some form of working memory in

the net.

CMLP lecture 6

2

Representing time

- Lets imagine that we presented words one at a

time to a net - With a normal network would it matter which order

the words were given? - No. Each word is a brand-new experience

- The network needs some way of relating each

experience with what has gone before - Intuitively, each word needs to be presented

along with what the network was thinking about

when it heard the previous word.

3

The Simple Recurrent Net (SRN)

Output

Copy back connections

Hidden

Input

Context

- At each time step, the input is

- a new experience

- plus a copy of the hidden unit activations at the

last time step

4

What inputs and outputs should the SRN be given?

- Elman wanted a task that would force the network

to learn syntactic relations - In order to be realistic, need a task without an

external teacher - Use a next word prediction task

- INPUTS Current word ( context)

- OUTPUTS Predicted next word

- Error signal is implicit in the data.

5

Predicting the next word

- Imagine you knew only these words

- the, piano, played, dropped, on, floor, toe,

carol, his, her, cat, in, room, she, with,

custard, ? - What words do you predict will follow

- You can do better than chance because you know

syntax

?

carol

dropped

her

cat

on

the

floor

in

the

room

with

the

piano

?

6

Long distance dependencies and hierarchy

- Elmans question how much is innate?

- Many argue

- Long-distances dependencies and hierarchical

embedding are unlearnable without innate

language faculty - How well can an SRN learn them?

- Test with a toy grammar

- Examples

- boys who chase dogs see girls

- cats chase dogs

- dogs see boys who cats who mary feeds chase

- mary walks

7

First experiments

- Each word encoded as a single unit on in the

input.

8

Initial results

- How can we tell if the net has learned syntax?

- Check whether it predicts the correct number

agreement - Gets some things right, but makes many mistakes

boys who girl chase see dog

- Seems not to have learned long-distance

dependency.

9

Incremental input

- Elman tried teaching the network in stages

- Five stages

- 10,000 simple sentences (x 5)

- 7,500 simple 2,500 complex (x 5)

- 5,000 simple 5,000 complex (x 5)

- 2,500 simple 7,500 complex (x 5)

- 10,000 complex sentences (x 5)

- Surprisingly, this training regime lead to

success!

10

Is this realistic?

- Elman reasons that this is in some ways like

childrens behaviour - Children seem to learn to produce simple

sentences first - Is this a reasonable suggestion?

- Where is the incremental input coming from?

- Similar problem to RM

- Developmental schedule appears to be a product of

changing the input.

11

Another route to incremental learning

- Rather than the experimenter selecting simple,

then complex sentences, could the network? - Childrens data isnt changing children are

changing - Elman gets the network to change throughout its

life - What is a reasonable way for the network to

change? - One possibility memory

12

Reducing the attention span of a network

- Destroy memory by setting context nodes to 0.5

- Five stages of learning (with both simple and

complex sentences) - Memory blanked every 3-4 words (x 12)

- Memory blanked every 4-5 words (x 5)

- Memory blanked every 5-6 words (x 5)

- Memory blanked every 6-7 words (x 5)

- No memory limitations (x 5)

- The network learned the task.

13

Counter-intuitive conclusion starting small

- A fully-functioning network cannot learn syntax.

- A network that is initially limited (but matures)

learns well. - This seems a strange result, suggesting that

networks arent good models of language learning

after all - On the other hand

- Children mature during learning

- Infancy in humans is prolonged relative to other

species - Ultimate language ability seems to be related to

how early learning starts - i.e., there is a critical period for language

acquisition.