Course on Data Mining (581550-4) - PowerPoint PPT Presentation

Title:

Course on Data Mining (581550-4)

Description:

home exam: min 13 points (max 30 points) exercises/experiments: min 8 points (max 20 points) ... different items that customers place in their 'shopping basket' ... – PowerPoint PPT presentation

Number of Views:93

Avg rating:3.0/5.0

Title: Course on Data Mining (581550-4)

1

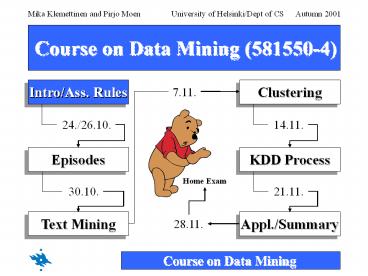

Course on Data Mining (581550-4)

Intro/Ass. Rules

Clustering

Episodes

KDD Process

Text Mining

Appl./Summary

2

Course on Data Mining (581550-4)

Today 26.10.2001

- Summary

- Course organization

- Summary

- What is data mining?

- Today's subject

- Association rules

- Next week's program

- Lecture Episodes

- Exercise Associations

- Seminar Associations

3

Course Organization

Lectures, Exercises, Exam

- 12 lectures 24.10.-30.11.2001

- Wed 14-16, Fri 12-14 (A217)

- Wed normal lecture

- Fri seminar like lecture (except for 26.10.)

- 5 exercise sessions 1.11.-29.11.2001

- Thu 12-14 (A318)

- Home exam

- Given 28.11., Returned due 21.12.2001

- Language

- Lecturing language is Finnish

- Slides and material are in English

4

Course Organization

Group Work

- Group for seminar (and exercise) work

- 10 groups, à 3 persons, 2 groups/lecture

- Dates are agreed at the beginning of course

- Articles are given on previous week's Wed

- Seminar presentations

- Presentation in an HTML page (around 3-5 printed

pages) due to seminar starting - Can be either a HTML page or a printable document

in PostScript/PDF format - 30 minutes of presentation

- 5-15 minutes of discussion

- Active participation

5

Course Organization / Groups

- Group presentation time allocation

- Fri 2.11. Group 1, Group 2 (associations)

- Fri 9.11. Group 3, Group 4 (episodes)

- Fri 16.11. Group 5, Group 6 (text mining)

- Fri 23.11. Group 7, Group 8 (clustering)

- Fri 30.11. Group 9, Group 10 (KDD process)

6

Course Organization

Course Evaluation

- Passing the course min 30 points

- home exam min 13 points (max 30 points)

- exercises/experiments min 8 points (max 20

points) - at least 3 returned and reported experiments

- group presentation min 4 points (max 10 points)

- Remember also the other requirements

- Attending the lectures (5/7)

- Attending the seminars (4/5)

- Attending the exercises (4/5)

7

Course Organization

Course Material

- Lecture slides

- Original articles

- Seminar presentations

- Book "Data Mining Concepts and Techniques" by

Jiawei Han and Micheline Kamber, Morgan Kaufmann

Publishers, August 2000. 550 pages. ISBN

1-55860-489-8 - Remember to check course website and folder for

the material!

8

SummaryWhat is Data Mining?

- Ultimately

- "Extraction of interesting (non-trivial,

implicit, previously unknown, potentially useful)

information or patterns from data in large

databases" - Often just

- "Tell something interesting about this data",

"Describe this data" - Exploratory, semi-automatic data analysis on

large data sets

9

SummaryWhat is Data Mining?

- Data mining semi-automatic discovery of

interesting patterns from large data sets - Knowledge discovery is a process

- Preprocessing

- Data mining

- Postprocessing

- To be mined, used or utilized different

- Databases (relational, object-oriented, spatial,

WWW, ) - Knowledge (characterization, clustering,

association, ) - Techniques (machine learning, statistics,

visualization, ) - Applications (retail, telecom, Web mining, log

analysis, )

10

Summary Typical KDD Process

Operational Database

Data mining

Input data

Results

2

Utilization

11

Association Rules

Basics

Examples

Generation

Multi-level Rules

Constraints

12

Market Basket Analysis

- Analysis of customer buying habits by finding

associations and correlations between the

different items that customers place in their

"shopping basket"

13

Market Basket Analysis

- Given

- A database of customer transactions (e.g.,

shopping baskets), where each transaction is a

set of items (e.g., products) - Find

- Groups of items which are frequently purchased

together

14

Market Basket Analysis

- Extract information on purchasing behavior

- "IF buys beer and sausage, THEN also by mustard

with high probability" - Actionable information can suggest...

- New store layouts and product assortments

- Which products to put on promotion

- MBA approach is applicable whenever a customer

purchases multiple things in proximity - Credit cards

- Services of telecommunication companies

- Banking services

- Medical treatments

15

Market Basket Analysis

- Useful

- "On Thursdays, grocery store consumers often

purchase diapers and beer together." - Trivial

- "Customers who purchase maintenance agreements

are very likely to purchase large appliances." - Unexplicable/unexpected

- "When a new hardaware store opens, one of the

most sold items is toilet rings."

16

Association Rules Basics

- Association rule mining

- Finding frequent patterns, associations,

correlations, or causal structures among sets of

items or objects in transaction databases,

relational databases, and other information

repositories. - Comprehensibility Simple to understand

- Utilizability Provide actionable information

- Efficiency Efficient discovery algorithms exist

- Applications

- Market basket data analysis, cross-marketing,

catalog design, loss-leader analysis, clustering,

classification, etc.

17

Association Rules Basics

- Typical representation formats for association

rules - diapers ? beer 0.5, 60

- buysdiapers ? buysbeer 0.5, 60

- "IF buys diapers, THEN buys beer in 60 of the

cases. Diapers and beer are bought together in

0.5 of the rows in the database." - Other representations (used in Han's book)

- buys(x, "diapers") ? buys(x, "beer") 0.5, 60

- major(x, "CS") takes(x, "DB") ? grade(x, "A")

1, 75

18

Association Rules Basics

- diapers ? beer 0.5, 60

"IF buys diapers, THEN buys beer in 60 of the

cases in 0.5 of the rows"

- Antecedent, left-hand side (LHS), body

- Consequent, right-hand side (RHS), head

- Support, frequency ("in how big part of the data

the things in left- and right-hand sides occur

together") - Confidence, strength ("if the left-hand side

occurs, how likely the right-hand side occurs")

19

Association Rules Basics

- Support denotes the frequency of the rule

within transactions. - support(A ? B s, c ) p(A?B) support

(A,B) - Confidence denotes the percentage of

transactions containing A which contain also B. - confidence(A ? B s, c ) p(BA) p(A?B) /

p(A) support(A,B) / support(A)

20

Association Rules Basics

- Minimum support ?

- High ? few frequent itemsets

- ? few valid rules which occur very often

- Low ? many valid rules which occur rarely

- Minimum confidence ?

- High ? few rules, but all "almost logically

true" - Low ? many rules, many of them very "uncertain"

- Typical values ? 2 -10 , ? 70 - 90

21

Association Rules Basics

- Transaction

- Relational format Compact format

- ltTid,itemgt ltTid,itemsetgt

- lt1, item1gt lt1, item1,item2gt

- lt1, item2gt lt2, item3gt

- lt2, item3gt

- Item vs. itemsets single element vs. set of

items - Support of an itemset I of transaction

containing I - Minimum support ? threshold for support

- Frequent itemset with support ? ?.

22

Association Rules Basics

- Given (1) database of transactions, (2) each

transaction is a list of items bought (purchased

by a customer in a visit) - Find all rules with minimum support and

confidence

- If min. support 50 and min. confidence 50, then

- A ? C 50, 66.6, C ? A 50, 100

23

Association Rule Generation

- Association rule mining is a two-step process

- STEP 1 Find the frequent itemsets the sets of

items that have minimum support. - So called Apriori trick A subset of a frequent

itemset must also be a frequent itemset - i.e., if AB is a frequent itemset, both A and

B should be frequent itemsets - Iteratively find frequent itemsets with size from

1 to k (k-itemset) - STEP 2 Use the frequent itemsets to generate

association rules.

24

Frequent Sets with Apriori

- Join Step Ck is generated by joining Lk-1with

itself - Prune Step Any (k-1)-itemset that is not

frequent cannot be a subset of a frequent

k-itemset - Pseudo-code

- Ck Candidate itemset of size k Lk Frequent

itemset of size k - L1 frequent items

- for (k 1 Lk !? k) do begin

- Ck1 candidates generated from Lk

- for each transaction t in database do

- increment the count of all candidates in

Ck1 that are

contained in t - Lk1 candidates in Ck1 with

min_support - end

- return ?k Lk

25

Apriori Candidate Generation

- The Apriori principle

- Any subset of a frequent itemset must be frequent

- L3abc, abd, acd, ace, bcd

- Self-joining L3L3

- abcd from abc and abd

- acde from acd and ace

- Pruning

- acde is removed because ade is not in L3

- C4abcd

26

Apriori Example (1/6)

27

Apriori Example (2/6)

28

Apriori Example (3/6)

29

Apriori Example (4/6)

Search Space of Database D

12345

1234 1235 1245 1345 2345

123 124 125 134 135 145 234

235 245 345

12 13 14 15 23 24 25

34 35 45

1 2 3 4 5

30

Apriori Example (5/6)

Apriori Trick on Level 1

12345

1234 1235 1245 1345 2345

123 124 125 134 135 145 234

235 245 345

12 13 14 15 23 24 25

34 35 45

1 2 3 4 5

31

Apriori Example (6/6)

Apriori Trick on Level 2

12345

1234 1235 1245 1345 2345

123 124 125 134 135 145 234

235 245 345

12 13 14 15 23 24 25

34 35 45

1 2 3 4 5

32

Is Apriori Fast Enough?

- The core of the Apriori algorithm

- Use frequent (k 1)-itemsets to generate

candidate frequent k-itemsets - Use database scan and pattern matching to collect

counts for the candidate itemsets - The bottleneck of Apriori candidate generation

- Huge candidate sets

- 104 frequent 1-itemset will generate 107

candidate 2-itemsets - To discover a frequent pattern of size 100, e.g.,

a1, a2, , a100, one needs to generate 2100 ?

1030 candidates. - Multiple scans of database

- Needs (n 1 ) scans, n is the length of the

longest pattern

33

Is Apriori Fast Enough?

- In practice

- For basic Apriori approach the number of

attributes in the row is usually much more

critical than the number of transaction rows - For example

- 50 attributes each having 1-3 values, 100.000

rows (not very bad) - 50 attributes each having 10-100 values, 100.000

rows (quite bad) - 10.000 attributes each having 5-10 values, 100

rows (very bad...) - Notice

- One attribute might have several different values

- Association rule algorithms typically treat every

attribute-value pair as one attribute (2

attribute with 5 values each gt "10 attributes") - There are some ways to overcome the problem...

34

Improving Apriori Performance

- Hash-based itemset counting

- A k-itemset whose corresponding hashing bucket

count is below the threshold cannot be frequent - Transaction reduction

- A transaction that does not contain any frequent

k-itemset is useless in subsequent scans - Partitioning

- Any itemset that is potentially frequent in DB

must be frequent in at least one of the

partitions of DB - Sampling

- Mining on a subset of given data, lower support

threshold a method to determine the completeness

35

Association Rules from Itemsets

- Pseudo-code

- for every frequent itemset l

- generate all nonempty subsets s of l

- for every nonempty subset s of l

- output the rule "s ? (l-s)" if

support(l)/support(s) ? min_conf", where

min_conf is the minimum confidence threshold - E.g. frequent set l abc, subsets s a, b,

c, ab, ac, bc) - a ? b, a ? c, b ? c

- a ? bc, b ? ac, c ? ab

- ab ? c, ac ? b, bc ? a

36

Association Rule Generation

- Rule 1 to remember

- Generating frequent sets is slow (especially

itemsets of size 2) - Generating association rules from frequent

itemsets is fast - Rule 2 to remember

- For itemset generation, support threshold is used

- For association rules, confidence threshold is

used - What happens in reality, how long does it take to

create frequent sets and association rules? - Let's take small real-life examples

- Experiments are made with Citum 4/275 Alpha

server with 512 MB of main memory Red Hat Linux

release 5.0 (kernel 2.0.30)

37

Performance Example (1/4)

Alarms

38

Performance Example (2/4)

- Telecom data containing alarms

- 1234 EL1 PCM 940926082623 A1 ALARMTEXT..

- Example data 1

- 43 478 alarms (26.9.94 - 5.10.94 10 days)

- 2 234 different types of alarms, 23 attributes,

5503 different values - Example data 2

- 73 679 alarms (1.2.95 - 22.3.95 7 weeks)

- 287 different types of alarms, 19 attributes,

3411 different values

Alarm type

Date, time

Alarm severity class

Alarming network element

Alarm number

39

Performance Example (3/4)

Example rule alarm_number1234, alarm_typePCM

? alarm_severityA1 2,45

40

Performance Example (4/4)

- Example results for data 1

- Frequency threshold 0.1 (lowest

possible with this data) - Candidate sets 109 719 Time 12.02 s

- Frequent sets 79 311 Time 64 855.73 s

- Rules 3 750 000 Time 860.60 s

- Example results for data 2

- Frequency threshold 0.1 (lowest

possible with this data) - Candidate sets 43 600 Time 1.70 s

- Frequent sets 13 321 Time 10 478.93 s

- Rules 509 075 Time 143.35 s

41

Selecting the Interesting Rules?

- Usually the result set is very big, one must

select interesting ones based on - Objective measures

- Two popular measurements

- support and

- confidence

- Subjective measures (Silberschatz Tuzhilin,

KDD95) - A rule (pattern) is interesting if

- it is unexpected (surprising to the user)

and/or - actionable (the user can do something with it)

- These issues will be discussed with KDD processes

42

Boolean vs. Quantitative Rules

- Boolean vs. quantitative association rules (based

on the types of values handled) - Boolean Rule concerns associations between the

presence or absence of items (e.g. "buys A" or

"does not buy A") - buysSQLServer, buysDMBook ? buysDBMiner

2,60 - buys(x, "SQLServer") buys(x, "DMBook")

buys(x, "DBMiner") 0.2, 60 - Quantitative Rule concerns associations between

quantitative items or attributes - age30..39, income42..48K ? buysPC 1, 75

- age(x, "30..39") income(x, "42..48K")

buys(x, "PC") 1, 75

43

Quantitative Rules

- Quantitative attributes e.g., age, income,

height, weight - Categorical attributes e.g., color of car

Problem too many distinct values for

quantitative attributes Solution transform

quantitative attributes in categorical ones via

discretization ? more about this in seminar!

44

Single- vs. Multi-dimensional Rules

- Single-dimensional vs. multi-dimensional

associations - Single-dimensional Items or attributes in the

rule refer to only one dimension (e.g., to

"buys") - Beer, Chips ? Bread 0.4, 52

- buys(x, "Beer") buys(x, "Chips") buys(x,

"Bread") 0.4, 52 - Multi-dimensional Items or attributes in the

rule refer to two or more dimensions (e.g.,

"buys", "time_of_transaction", "customer_category"

) - In the following example nationality, age,

income

45

Multi-dimensional Rules

RULES nationality French ? income high 50,

100 income high ? nationality French 50,

75 age 50 ? nationality Italian 33, 100

46

Single- vs. Multi-level Rules

- Single-level vs. multi-level associations

- Single-level Associations between items or

attributes from the same level of abstraction

(i.e., from the same level of hierarchy) - Beer, Chips ? Bread 0.4, 52

- Multi-level Associations between items or

attributes from different levels of abstraction

(i.e, from different levels of hierarchy) - BeerKarjala, ChipsEstrellaBarbeque ? Bread

0.1, 74 - More about multi-level association rules on the

next slides

47

Multi-level Association Rules

- Is difficult to find interesting patterns at a

too primitive level - high support too few rules

- low support too many rules, most uninteresting

- Approach reason at suitable level of abstraction

- A common form of background knowledge is that an

attribute may be generalized or specialized

according to a hierarchy of concepts - Multi-level association rules rules which

combine associations with hierarchy of concepts

48

Multi-level Association Rules

- Items often form hierarchies

- Items at the lower level are expected to have

lower support - Rules regarding itemsets at appropriate levels

could be quite useful - Transaction database can be encoded based on

dimensions and levels

49

Multi-level Association Rules

1

2

1

2

1

2

1

2

121 milk - 2 - Fraser

50

Multi-level Association Rules

- A top-down, progressive deepening approach

- First find high-level strong rules

- milk bread 20,

60 - Then find their lower-level "weaker" rules

- 2 milk wheat bread

6, 50 - Variations at mining multi-level association

rules - Level-crossed association rules

- milk wheat bread

- Association rules with multiple, alternative

hierarchies - milk Wonder bread

51

Multi-level Association Rules

- Generalizing/specializing values of attributes

- ...from specialized to general support of rules

increases (new rules may become valid) - ...from general to specialized support of rules

decreases (rules may become not valid, their

support falls under the threshold) - Too low level gt too many rules and too primitive

- Pepsi light 0.5l bottle ? Taffel Barbeque Chips

200gr - Too high level gt uninteresting rules

- Food ? Clothes

52

Redundancy Filtering

- Some rules may be redundant due to "ancestor"

relationships between items - Example (milk has 4 subclasses)

- milk ? wheat bread support 8, confidence

70 - 2 milk ? wheat bread support 2, confidence

72 - We say the first rule is an ancestor of the

second rule - A rule is redundant if its support is close to

the "expected" value, based on the rules

ancestor - Above the second rule could be redundant

53

Uniform vs. Reduced Support

- Uniform Support the same minimum support for all

levels - One minimum support threshold. No need to

examine itemsets containing any item whose

ancestors do not have minimum support. - Lower level items do not occur as frequently.

If support threshold - too high ? miss low level associations

- too low ? generate too many high level

associations - Reduced Support reduced minimum support at lower

levels

54

Uniform Support

Multi-level mining with uniform support

Level 1 min_sup 5

Milk support 10

2 Milk support 6

Skim Milk support 4

Level 2 min_sup 5

55

Reduced Support

Multi-level mining with reduced support

Level 1 min_sup 5

Milk support 10

2 Milk support 6

Skim Milk support 4

Level 2 min_sup 3

56

Progressive Deepening

- A top-down, progressive deepening approach

- First mine high-level frequent items

- milk (15), bread

(10) - Then mine their lower-level "weaker" frequent

itemsets - 2 milk (5),

wheat bread (4) - Different min_support thresholds across

multi-levels lead to different algorithms - If adopting the same min_support across

multi-levels - then do not examine t if any of ts ancestors is

infrequent - If adopting reduced min_support at lower levels

- then examine only those descendents whose

ancestors support is frequent/non-negligible

57

Constraint-Based Mining

- Interactive, exploratory mining giga-bytes of

data? - Could it be real? By making good use of

constraints! - What kinds of constraints can be used in mining?

- Knowledge type constraint classification,

association, etc. - Data constraint SQL-like queries

- Find product pairs sold together in Vancouver in

Dec.98 - Dimension/level constraints

- In relevance to region, price, brand, customer

category - Interestingness constraints

- Strong rules (min_support ? 3, min_confidence ?

60) - Rule constraints (see the next slides)

58

Rule Constraints

- Two kinds of rule constraints

- Rule form constraints meta-rule guided mining

- Metarule P(X, Y) Q(X, W) takes(X,

"database systems") - Matching rule age(X, "30..39") income(X,

"41K..60K") takes(X, "database systems"). - Rule content constraint constraint-based query

optimization (Ng, et al., SIGMOD98) - sum(LHS) lt 100 min(LHS) gt 20 count(LHS) gt 3

sum(RHS) gt 1000

59

Rule Constraints

- 1-variable vs. 2-variable constraints

(Lakshmanan, et al. SIGMOD99) - 1-var A constraint confining only one side (L/R)

of the rule, e.g., - sum(LHS) lt 100 min(LHS) gt 20 count(LHS) gt 3

sum(RHS) gt 1000 - 2-var A constraint confining both sides (L and

R). - sum(LHS) lt min(RHS) max(RHS) lt 5 sum(LHS)

60

Summary

- Association rule mining

- Probably the most significant contribution from

the database community in KDD - Rather simple concept, but the "thinking" gives

basis for extensions and other methods - A large number of papers have been published

- Many interesting issues have been explored

- Interesting research directions

- Association analysis in other types of data

spatial data, multimedia data, time series data,

etc.

61

References (1/5)

- R. Agarwal, C. Aggarwal, and V. V. V. Prasad. A

tree projection algorithm for generation of

frequent itemsets. In Journal of Parallel and

Distributed Computing (Special Issue on High

Performance Data Mining), 2000. - R. Agrawal, T. Imielinski, and A. Swami. Mining

association rules between sets of items in large

databases. SIGMOD'93, 207-216, Washington, D.C. - R. Agrawal and R. Srikant. Fast algorithms for

mining association rules. VLDB'94 487-499,

Santiago, Chile. - R. Agrawal and R. Srikant. Mining sequential

patterns. ICDE'95, 3-14, Taipei, Taiwan. - R. J. Bayardo. Efficiently mining long patterns

from databases. SIGMOD'98, 85-93, Seattle,

Washington. - S. Brin, R. Motwani, and C. Silverstein. Beyond

market basket Generalizing association rules to

correlations. SIGMOD'97, 265-276, Tucson,

Arizona. - S. Brin, R. Motwani, J. D. Ullman, and S. Tsur.

Dynamic itemset counting and implication rules

for market basket analysis. SIGMOD'97, 255-264,

Tucson, Arizona, May 1997. - K. Beyer and R. Ramakrishnan. Bottom-up

computation of sparse and iceberg cubes.

SIGMOD'99, 359-370, Philadelphia, PA, June 1999. - D.W. Cheung, J. Han, V. Ng, and C.Y. Wong.

Maintenance of discovered association rules in

large databases An incremental updating

technique. ICDE'96, 106-114, New Orleans, LA. - M. Fang, N. Shivakumar, H. Garcia-Molina, R.

Motwani, and J. D. Ullman. Computing iceberg

queries efficiently. VLDB'98, 299-310, New York,

NY, Aug. 1998.

62

References (2/5)

- G. Grahne, L. Lakshmanan, and X. Wang. Efficient

mining of constrained correlated sets. ICDE'00,

512-521, San Diego, CA, Feb. 2000. - Y. Fu and J. Han. Meta-rule-guided mining of

association rules in relational databases.

KDOOD'95, 39-46, Singapore, Dec. 1995. - T. Fukuda, Y. Morimoto, S. Morishita, and T.

Tokuyama. Data mining using two-dimensional

optimized association rules Scheme, algorithms,

and visualization. SIGMOD'96, 13-23, Montreal,

Canada. - E.-H. Han, G. Karypis, and V. Kumar. Scalable

parallel data mining for association rules.

SIGMOD'97, 277-288, Tucson, Arizona. - J. Han, G. Dong, and Y. Yin. Efficient mining of

partial periodic patterns in time series

database. ICDE'99, Sydney, Australia. - J. Han and Y. Fu. Discovery of multiple-level

association rules from large databases. VLDB'95,

420-431, Zurich, Switzerland. - J. Han, J. Pei, and Y. Yin. Mining frequent

patterns without candidate generation. SIGMOD'00,

1-12, Dallas, TX, May 2000. - T. Imielinski and H. Mannila. A database

perspective on knowledge discovery.

Communications of ACM, 3958-64, 1996. - M. Kamber, J. Han, and J. Y. Chiang.

Metarule-guided mining of multi-dimensional

association rules using data cubes. KDD'97,

207-210, Newport Beach, California. - M. Klemettinen, H. Mannila, P. Ronkainen, H.

Toivonen, and A.I. Verkamo. Finding interesting

rules from large sets of discovered association

rules. CIKM'94, 401-408, Gaithersburg, Maryland.

63

References (3/5)

- F. Korn, A. Labrinidis, Y. Kotidis, and C.

Faloutsos. Ratio rules A new paradigm for fast,

quantifiable data mining. VLDB'98, 582-593, New

York, NY. - B. Lent, A. Swami, and J. Widom. Clustering

association rules. ICDE'97, 220-231, Birmingham,

England. - H. Lu, J. Han, and L. Feng. Stock movement and

n-dimensional inter-transaction association

rules. SIGMOD Workshop on Research Issues on

Data Mining and Knowledge Discovery (DMKD'98),

121-127, Seattle, Washington. - H. Mannila, H. Toivonen, and A. I. Verkamo.

Efficient algorithms for discovering association

rules. KDD'94, 181-192, Seattle, WA, July 1994. - H. Mannila, H Toivonen, and A. I. Verkamo.

Discovery of frequent episodes in event

sequences. Data Mining and Knowledge Discovery,

1259-289, 1997. - R. Meo, G. Psaila, and S. Ceri. A new SQL-like

operator for mining association rules. VLDB'96,

122-133, Bombay, India. - R.J. Miller and Y. Yang. Association rules over

interval data. SIGMOD'97, 452-461, Tucson,

Arizona. - R. Ng, L. V. S. Lakshmanan, J. Han, and A. Pang.

Exploratory mining and pruning optimizations of

constrained associations rules. SIGMOD'98, 13-24,

Seattle, Washington. - N. Pasquier, Y. Bastide, R. Taouil, and L.

Lakhal. Discovering frequent closed itemsets for

association rules. ICDT'99, 398-416, Jerusalem,

Israel, Jan. 1999.

64

References (4/5)

- J.S. Park, M.S. Chen, and P.S. Yu. An effective

hash-based algorithm for mining association

rules. SIGMOD'95, 175-186, San Jose, CA, May

1995. - J. Pei, J. Han, and R. Mao. CLOSET An Efficient

Algorithm for Mining Frequent Closed Itemsets.

DMKD'00, Dallas, TX, 11-20, May 2000. - J. Pei and J. Han. Can We Push More Constraints

into Frequent Pattern Mining? KDD'00. Boston,

MA. Aug. 2000. - G. Piatetsky-Shapiro. Discovery, analysis, and

presentation of strong rules. In G.

Piatetsky-Shapiro and W. J. Frawley, editors,

Knowledge Discovery in Databases, 229-238.

AAAI/MIT Press, 1991. - B. Ozden, S. Ramaswamy, and A. Silberschatz.

Cyclic association rules. ICDE'98, 412-421,

Orlando, FL. - J.S. Park, M.S. Chen, and P.S. Yu. An effective

hash-based algorithm for mining association

rules. SIGMOD'95, 175-186, San Jose, CA. - S. Ramaswamy, S. Mahajan, and A. Silberschatz. On

the discovery of interesting patterns in

association rules. VLDB'98, 368-379, New York,

NY.. - S. Sarawagi, S. Thomas, and R. Agrawal.

Integrating association rule mining with

relational database systems Alternatives and

implications. SIGMOD'98, 343-354, Seattle, WA. - A. Savasere, E. Omiecinski, and S. Navathe. An

efficient algorithm for mining association rules

in large databases. VLDB'95, 432-443, Zurich,

Switzerland. - A. Savasere, E. Omiecinski, and S. Navathe.

Mining for strong negative associations in a

large database of customer transactions. ICDE'98,

494-502, Orlando, FL, Feb. 1998.

65

References (5/5)

- C. Silverstein, S. Brin, R. Motwani, and J.

Ullman. Scalable techniques for mining causal

structures. VLDB'98, 594-605, New York, NY. - R. Srikant and R. Agrawal. Mining generalized

association rules. VLDB'95, 407-419, Zurich,

Switzerland, Sept. 1995. - R. Srikant and R. Agrawal. Mining quantitative

association rules in large relational tables.

SIGMOD'96, 1-12, Montreal, Canada. - R. Srikant, Q. Vu, and R. Agrawal. Mining

association rules with item constraints. KDD'97,

67-73, Newport Beach, California. - H. Toivonen. Sampling large databases for

association rules. VLDB'96, 134-145, Bombay,

India, Sept. 1996. - D. Tsur, J. D. Ullman, S. Abitboul, C. Clifton,

R. Motwani, and S. Nestorov. Query flocks A

generalization of association-rule mining.

SIGMOD'98, 1-12, Seattle, Washington. - K. Yoda, T. Fukuda, Y. Morimoto, S. Morishita,

and T. Tokuyama. Computing optimized rectilinear

regions for association rules. KDD'97, 96-103,

Newport Beach, CA, Aug. 1997. - M. J. Zaki, S. Parthasarathy, M. Ogihara, and W.

Li. Parallel algorithm for discovery of

association rules. Data Mining and Knowledge

Discovery, 1343-374, 1997. - M. Zaki. Generating Non-Redundant Association

Rules. KDD'00. Boston, MA. Aug. 2000. - O. R. Zaiane, J. Han, and H. Zhu. Mining

Recurrent Items in Multimedia with Progressive

Resolution Refinement. ICDE'00, 461-470, San

Diego, CA, Feb. 2000.

66

Course Organization

Next Week

- Lecture 31.10. Episodes and recurrent patterns

- Mika gives the lecture

- Excercise 1.11. Associations

- Pirjo takes care of you! -)

- Seminar 2.11. Associations

- Pirjo gives the lecture

- 2 group presentations

67

Seminar Presentations

- Seminar presentations

- Articles are given on previous week's Wed

- Presentation in an HTML page (around 3-5 printed

pages) due to seminar starting - Can be either a HTML page or a printable document

in PostScript/PDF format - 30 minutes of presentation

- 5-15 minutes of discussion

- Active participation

68

Seminar Presentations

- Seminar presentations

- Try to understand the "message" in the article

- Try to present the basic ideas as clearly as

possible, use examples - Do not present detailed mathematics or algorithms

- Test do you understand your own presentation?

- In the presentation, use PowerPoint or

conventional slides

69

Seminar Presentations/Groups 1-2

Quantitative Rules

R. Srikant, R. Agrawal "Mining Quantitative

Association Rules in Large Relational Tables",

Proc. of the ACM-SIGMOD 1996

MINERULE

Rosa Meo, Giuseppe Psaila, Stefano Ceri "A New

SQL-like Operator for Mining Association Rules".

VLDB 1996 122-133

70

Introduction to Data Mining (DM)

Thank you for your attention and have a nice

weekend! Thanks to Jiawei Han from Simon Fraser

University for his slides which greatly helped

in preparing this lecture! Also thanks to Fosca

Giannotti and Dino Pedreschi from Pisa for their

slides.