Binary Symmetric channel (BSC) is idealised model used for noisy channel. - PowerPoint PPT Presentation

1 / 13

Title:

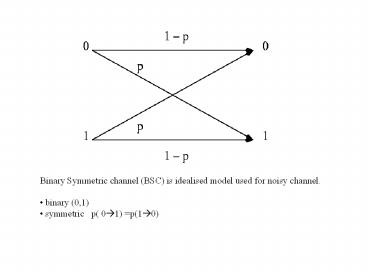

Binary Symmetric channel (BSC) is idealised model used for noisy channel.

Description:

symmetric p( 0 1) =p(1 0) Binary Symmetric Channel (BSC) ... Summary of basic formulae by Venn Diagram. H(x) H(y) Error entropy. Equivocation ... – PowerPoint PPT presentation

Number of Views:611

Avg rating:3.0/5.0

Title: Binary Symmetric channel (BSC) is idealised model used for noisy channel.

1

- Binary Symmetric channel (BSC) is idealised model

used for noisy channel. - binary (0,1)

- symmetric p( 0?1) p(1?0)

2

X0

P(Y0X0) Y0

P(X0)

P(Y0)

P(Y1X0)

P(Y0X1)

X1

P(Y1X1)

Y1 P(X1)

P(Y1) Forward transition probabilities of

the noisy binary symmetric channel.

Binary Symmetric Channel (BSC). Transmitted

source symbols Xi i0,1. Received symbols

Yj j0,1, Given P(Xi), source probability

P(Yj Xi) Pe if i?j

P(Yj Xi) 1- Pe if ij where Pe is

the given error rate.

3

Calculate the average mutual information (the

average amount of source information acquired per

received symbol, as distinguished for that per

source symbol, which was given by the entropy

H(X)). Step 1 P(Yj,Xi)P(Xi)P(YjXi)

i0,1. j0,1. Step 2 P(Yj) SX

P(Yj,Xi) j0,1.

(Logically the probability of receiving a

particular Yj is the sum of all joint

probabilities over the range of Xi. (i.e. the

prob of receiving 1 is the sum of the probability

of sending 1 receiving 1 plus the probability of

sending 0, receiving 1. that is, the sum of

the probability of sending 1 and receiving

correctly plus the probability of sending 0,

receiving wrongly. )

4

Step 3 I(Yj,Xi) log P(XiYj)/P(Xi)

log P(Yj,Xi)/

P(Xi)P(Yj) i0,1. j0,1. (This

quantifies the amount of information conveyed,

when Xi is transmitted, and Yj is received. Over

a perfect noiseless channel, this is self

information of Xi, because each received symbol

uniquely identifies a transmitted symbol with

P(XiYj)1 If it is very noisy, or

communication breaks down P(Yj,Xi)P(Xi)P(Yj),

this is zero, no information has been

transferred). Step 4 I(X,Y) I(Y ,X ) SX SY

P(Yj,Xi)logP(XiYj)/P(Xi)

SX SY P(Yj,Xi) log P(Yj,Xi)/

P(Xi)P(Yj)

5

- Equivocation

Represents the destructive effect of noise, or

additional information needed to make the

reception correct

y

x

Noisy channel

receiver

transmitter

Noiseless channel

Hypothetical observer

The observer looks at the transmitted and

received digits if they are the same, reports a

1, if different a 0.

6

x M M S S M M M S M

y M S S S M M M S S

observer 1 0 1 1 1 1 1 1 0

The information sent by the observer is easily

evaluated as -p(0)logp(0)p(1)logp(1) applied

to the binary string. The probability of 0 is

just the channel error probability.

Example A binary system produces Marks and

Spaces with equal probabilities, 1/8 of all

pulses being received in error. Find the

information sent by the observer.

The information sent by observer is -7/8log

(7/8)1/8log(1/8)0.54 bits since the input

information is 1 bit/symbol, the net information

is 1-0.540.46 bits, agreeing with previous

results.

7

The noise in the system has destroyed 0.55 bits

of information, or that the equivocation is 0.55

bits.

- General expression for equivocation

Consider a specific pair of transmitted and

received digits x,y

- Noisy channel probability change p(x)?p(xy)

- Receiver probability correction p(xy)?1

The information provided by the observer -log(

p(xy) )

Averaging over all pairs

probability of a given pair

General expression for equivocation

8

The information transferred via the noisy channel

(in the absence of the observer)

Information transfer

Information loss due to noise (equivocation)

Information in noiseless system (source entropy)

9

Example A binary system produces Marks with

probability of 0.7 and Spaces with probability

0.3, 2/7 of the Marks are received in error and

1/3 of the Spaces. Find the information

transfer using the expression for equivocation.

x M M M M M M M S S S

y M M M M M S S S S M

x M M S S

y M S S M

P(x) 0.7 0.7 0.3 0.3

P(y) 0.6 0.4 0.4 0.6

P(xy) 5/6 1/2 1/2 1/6

P(yx) 5/7 2/7 2/3 1/3

P(xy) 0.5 0.2 0.2 0.1

I(xy) terms 0.126 -0.997 0.147 -0.085

10

- Summary of basic formulae by Venn Diagram

H(xy)

H(yx)

I(xy)

H(y)

H(x)

11

Quantity Definition

Source information

Received information

Mutual information

Average mutual information

Source entropy

Destination entropy

Equivocation

Error entropy

12

- Channel capacity

Cmax I(xy) ? that is

maximum information transfer

Binary Symmetric Channels

The noise in the system is random, then the

probabilities of errors in 0 and 1 is the

same. This is characterised by a single value p

of binary error probability.

Channel capacity of this channel

13

Channel capacity of BSC channel

Mutual information increases as error rate

decreases

0 0

p(0)

x (transmit)

y (receive)

p(1)1-p(0)

1 1