Artificial Intelligence 15-381 Web Spidering - PowerPoint PPT Presentation

Title:

Artificial Intelligence 15-381 Web Spidering

Description:

SPIDER1 only reaches pages in the the connected web subgraph where ROOT page lives. ... Running a full web spider takes days even with hundreds of dedicated servers ... – PowerPoint PPT presentation

Number of Views:25

Avg rating:3.0/5.0

Title: Artificial Intelligence 15-381 Web Spidering

1

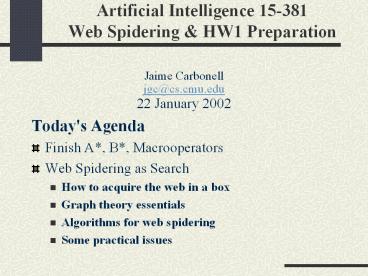

Artificial Intelligence 15-381Web Spidering

HW1 Preparation

- Jaime Carbonell

- jgc_at_cs.cmu.edu

- 22 January 2002

- Today's Agenda

- Finish A, B, Macrooperators

- Web Spidering as Search

- How to acquire the web in a box

- Graph theory essentials

- Algorithms for web spidering

- Some practical issues

2

Search Engines on the Web

- Revising the Total IR Scheme

- 1. Acquire the collection, i.e. all the

documents - Off-line process

- 2. Create an inverted index (IR lecture, later)

- Off-line process

- 3. Match queries to documents (IR lecture)

- On-line process, the actual retrieval

- 4. Present the results to user

- On-line process display, summarize, ...

3

Acquiring a Document Collection

- Document Collections and Sources

- Fixed, pre-existing document collection

- e.g., the classical philosophy works

- Pre-existing collection with periodic updates

- e.g., the MEDLINE biomedical collection

- Streaming data with temporal decay

- e.g., the Wall-Street financial news feed

- Distributed proprietary document collections

- Distributed, linked, publicly-accessible

documentse.g. the Web

4

Properties of Graphs I (1)

- Definitions

- Graph

- a set of nodes n and a set of edges (binary

links) v between the nodes. - Directed graph

- a graph where every edge has a pre-specified

direction.

5

Properties of Graphs I (2)

- Connected graph

- a graph where for every pair of nodes there

exists a sequence of edges starting at one node

and ending at the other. - The web graph

- the directed graph where n all web pages and

v all HTML-defined links from one web page to

another.

6

Properties of Graphs I (3)

- Tree

- a connected graph without any loops and with a

unique path between any two nodes - Spanning tree of graph G

- a tree constructed by including all n in G, and

a subset of v such that G remains connected, but

all loops are eliminated.

7

Properties of Graphs I (4)

- Forest

- a set of trees (without inter-tree links)

- k-Spanning forest

- Given a graph G with k connected subgraphs, the

set of k trees each of which spans a different

connected subgraphs.

8

Graph G ltn, vgt

9

Directed Graph Example

10

Tree

11

Web Graph

lthref gt lthref gt

lthref gt

lthref gt lthref gt

lthref gt lthref gt

HTML references are links Web Pages are nodes

12

More Properties of Graphs

- Theorem 1 For every connected graph G, there

exists a spanning tree. - Proof Depth-first search starting at any node in

G builds the spanning tree.

13

Properties of Graphs

- Theorem 2 For every G with k disjoint connected

subgraphs, there exists a k-spanning forest. - Proof Each connected subgraph has a spanning

tree (Theorem 1), and the set of k spanning trees

(being disjoint) define a k-spanning forest.

14

Properties of Web Graphs

- Additional Observations

- The web graph at any instant of time contains

k-connected subgraphs (but we do not know the

value of k, nor do we know a-priori the structure

of the web-graph). - If we knew every connected web subgraph, we could

build a k-web-spanning forest, but this is a very

big "IF."

15

Graph-Search Algorithms I

- PROCEDURE SPIDER1(G)

- Let ROOT any URL from G

- Initialize STACK ltstack data structuregt

- Let STACK push(ROOT, STACK)

- Initialize COLLECTION ltbig file of URL-page

pairsgt - While STACK is not empty,

- URLcurr pop(STACK)

- PAGE look-up(URLcurr)

- STORE(ltURLcurr, PAGEgt, COLLECTION)

- For every URLi in PAGE,

- push(URLi, STACK)

- Return COLLECTION

- What is wrong with the above algorithm?

16

Depth-first Search

numbers order in which nodes are visited

17

Graph-Search Algorithms II (1)

- SPIDER1 is Incorrect

- What about loops in the web graph?

- gt Algorithm will not halt

- What about convergent DAG structures?

- gt Pages will replicated in collection

- gt Inefficiently large index

- gt Duplicates to annoy user

18

Graph-Search Algorithms II (2)

- SPIDER1 is Incomplete

- Web graph has k-connected subgraphs.

- SPIDER1 only reaches pages in the the connected

web subgraph where ROOT page lives.

19

A Correct Spidering Algorithm

- PROCEDURE SPIDER2(G)

- Let ROOT any URL from G

- Initialize STACK ltstack data structuregt

- Let STACK push(ROOT, STACK)

- Initialize COLLECTION ltbig file of URL-page

pairsgt - While STACK is not empty,

- Do URLcurr pop(STACK)

- Until URLcurr is not in COLLECTION

- PAGE look-up(URLcurr)

- STORE(ltURLcurr, PAGEgt, COLLECTION)

- For every URLi in PAGE,

- push(URLi, STACK)

- Return COLLECTION

20

A More Efficient Correct Algorithm

- PROCEDURE SPIDER3(G)

- Let ROOT any URL from G

- Initialize STACK ltstack data structuregt

- Let STACK push(ROOT, STACK)

- Initialize COLLECTION ltbig file of URL-page

pairsgt - Initialize VISITED ltbig hash-tablegt

- While STACK is not empty,

- Do URLcurr pop(STACK)

- Until URLcurr is not in VISITED

- insert-hash(URLcurr, VISITED)

- PAGE look-up(URLcurr)

- STORE(ltURLcurr, PAGEgt, COLLECTION)

- For every URLi in PAGE,

- push(URLi, STACK)

- Return COLLECTION

21

Graph-Search Algorithms VA More Complete Correct

Algorithm

- PROCEDURE SPIDER4(G, SEEDS)

- Initialize COLLECTION ltbig file of URL-page

pairsgt - Initialize VISITED ltbig hash-tablegt

- For every ROOT in SEEDS

- Initialize STACK ltstack data structuregt

- Let STACK push(ROOT, STACK)

- While STACK is not empty,

- Do URLcurr pop(STACK)

- Until URLcurr is not in VISITED

- insert-hash(URLcurr, VISITED)

- PAGE look-up(URLcurr)

- STORE(ltURLcurr, PAGEgt, COLLECTION)

- For every URLi in PAGE,

- push(URLi, STACK)

- Return COLLECTION

22

Completeness Observations

- Completeness is not guaranteed

- In k-connected web G, we do not know k

- Impossible to guarantee each connected subgraph

is sampled - Better more seeds, more diverse seeds

23

Completeness Observations

- Search Engine Practice

- Wish to maximize subset of web indexed.

- Maintain (secret) set of diverse seeds

- (grow this set opportunistically, e.g. when X

complains his/her page not indexed). - Register new web sites on demand

- New registrations are seed candidates.

24

To Spider or not to Spider? (1)

- User Perceptions

- Most annoying Engine finds nothing

- (too small an index, but not an issue since 1997

or so). - Somewhat annoying Obsolete links

- gt Refresh Collection by deleting dead links

- (OK if index is slightly smaller)

- gt Done every 1-2 weeks in best engines

- Mildly annoying Failure to find new site

- gt Re-spider entire web

- gt Done every 2-4 weeks in best engines

25

To Spider or not to Spider? (2)

- Cost of Spidering

- Semi-parallel algorithmic decomposition

- Spider can (and does) run in hundreds of severs

simultaneously - Very high network connectivity (e.g. T3 line)

- Servers can migrate from spidering to query

processing depending on time-of-day load - Running a full web spider takes days even with

hundreds of dedicated servers

26

Current Status of Web Spiders

- Historical Notes

- WebCrawler first documented spider

- Lycos first large-scale spider

- Top-honors for most web pages spidered First

Lycos, then Alta Vista, then Google...

27

Current Status of Web Spiders )

- Enhanced Spidering

- In-link counts to pages can be established during

spidering. - Hint In SPIDER4, store ltURL, COUNTgt pair in

VISITED hash table. - In-link counts are the basis for GOOGLEs

page-rank method

28

Current Status of Web Spiders

- Unsolved Problems

- Most spidering re-traverses stable web graph

- gt on-demand re-spidering when changes occur

- Completeness or near-completeness is still a

major issue - Cannot Spider JAVA-triggered or local-DB stored

information