Class III Entropy, Information and Life - PowerPoint PPT Presentation

1 / 30

Title:

Class III Entropy, Information and Life

Description:

In classical thermodynamics, we deal with single extensive systems, ... ou encore: l'entropie ne peut que. cro tre. MAE 217-Professor Marc J. Madou. Information ... – PowerPoint PPT presentation

Number of Views:36

Avg rating:3.0/5.0

Title: Class III Entropy, Information and Life

1

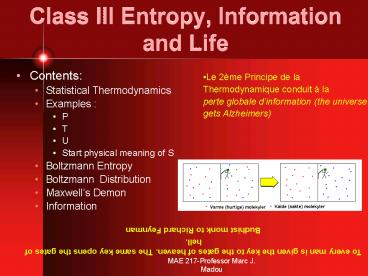

Class III Entropy, Information and Life

- Contents

- Statistical Thermodynamics

- Examples

- P

- T

- U

- Start physical meaning of S

- Boltzmann Entropy

- Boltzmann Distribution

- Maxwells Demon

- Information

- Le 2ème Principe de la

- Thermodynamique conduit à la

- perte globale dinformation (the universe

- gets Alzheimers)

To every man is given the key to the gates of

heaven. The same key opens the gates of

hell. Budhist monk to Richard Feynman

2

Statistical Thermodynamics

- In classical thermodynamics, we deal with single

extensive systems, whereas in statistical

mechanics we recognize the role of the tiny

constituents of the system. The temperature, for

instance, of a system defines a macrostate,

whereas the kinetic energy of each molecule in

the system defines a microstate. The macrostate

variable, temperature, is recognized as an

expression of the average of the microstate

variables, an average kinetic energy for the

system. Hence, if the molecules of a gas move

faster, they have more kinetic energy, and the

temperature naturally goes up. - The approach in statistical thermodynamics is

thus to use statistical methods and to assume

that the average values of the mechanical

variables of the molecules in a thermodyamic

system are the same as the measurable quantities

in classical thermodynamics, at least in the

limit that the number of particles is very large

. Lets explore this with examples for P, T, U

and S.

3

Example (1) Derivation of the pressure P of an

ideal gas

- Assumptions

- The number of molecules in a gas is large, and

the average separation between them is larged

compared to their dimensions - The molecules obey Newtons law of motion, but as

a whole they move randomly - The molecules interact only by short-range forces

during elastic collisions - The molecules make only elastic collisions with

the walls - All molecules are identical.

First focus our attention on one of these

molecules with mass m and velocity vi

4

Example (1) Derivation of the pressure P of an

ideal gas

- Molecule collides elastically with any wall

- The magnitude of the average force exerted by the

wall to produce this change in momentum can be

found from the impulse equation - We're dealing with the average force, so the time

interval is just the time between collisions,

which is the time it takes for the molecule to

make a round trip in the box and come back to hit

the same wall. This time simply depends on the

distance travelled in the x-direction (2d), and

the x-component of velocity.

5

Example (1) Derivation of the pressure P of an

ideal gas

- Time interval between two collisions with the

same wall - The x component of the average force exerted by

the wall on the molecule is ( I am leaving out

the x sub in the force notation) - By Newtons third law (for every action, there

is an equal and opposite reaction), the average x

component of the force exerted by the molecule on

the wall is

6

Example (1) Derivation of the pressure P of an

ideal gas

- The total force exerted by the gas on the wall is

found by adding the average forces exerted by

each individual molecule

- For a very large amount of molecules the average

force is the same over any time interval. Thus,

the constant force F on the wall due to molecule

collision is - The average speed (rms) is given by

7

Example (1) Derivation of the pressure P of an

ideal gas

- Thus, the total force on the wall can be written

- Consider now how this average x-velociy compares

to the average velocity. For any molecule, the

velocity can be found from its components using

the Pythagorean thereom in three dimenensions.

The square of the speed of molecules - For a randomly-chosen molecule, the x, y, and z

components of velocity may be similar but they

don't have to be. If we take an average over all

the molecules in the box, though, then the

average x, y, and z speeds should be equal,

because there's no reason for one direction to be

preferred over another since the motion of the

molecules is completely random

8

Example (1) Derivation of the pressure P of an

ideal gas

- The total force exerted on the wall then is is

- The total pressure on the wall is

The pressure of a gas is proportional to the

number of molecules per unit volume and to the

average translational kinetic energy of the

molecules

9

Example (1) Derivation of the pressure P of an

ideal gas

- We just saw that to find the total force (F), we

needed to include all the molecules, which

travel at a wide range of speeds. The

distribution of speeds follows a curve that looks

a bit like a Bell curve, with the peak of the

distribution increasing as the temperature

increases. The shape of the speed distribution is

known as the Maxwell/Boltzmann distribution curve.

10

Example (1) Derivation of the pressure P of an

ideal gas

- Root Mean Square Velocity the square root of the

average of the squares of the individual

velocities of gas particles (molar mass M mNa).

- At given temperature lighter molecules

- move faster, on the average, than do heavier

- molecules

11

Example (2) Derivation of the temperature T of

an ideal gas

- For the molecular interpretation of temperature

(T) we compare the equation we just derived with

the equation of state for an ideal gas (with kB

Boltzmanns constant) - It follows that the temperature is a direct

measure of average molecular kinetic energy

-

3.14 - The average translational kinetic energy per

molecule -

3.15

12

Example (2) Derivation of the temperature T of

an ideal gas

- The absolute temperature is a measure of the

average kinetic energy of its molecules - If two different gases are at the same

temperature, their molecules have the same

average kinetic energy - If the temperature of a gas is doubled, the

average kinetic energy of its molecules is

doubled

13

Example (3) Derivation of the internal energy U

of an ideal gas

- Equipartition of energy

3.16 - Each degree of freedom contributes kBT to the

energy of a system - Possible degrees of freedom include translations,

rotations and vibrations of molecules - The total translational kinetic energy of N

molecules of gas -

-

3.17 - The internal energy U of an ideal gas depends

only on temperature

14

Example(4) Entropy

Example of a type of distribution of particles

(electrons) over many quantum levels the

Fermi-Dirac distribution.

- Statistical mechanics can also provide a physical

interpretation of the entropy just as it does for

the other extensive parameters U, V and N. - Here we introduce again some postulates

- Quantum mechanics tells us that a macroscopic

system might have many discrete quantum states

consistent with the specified values for U,V, and

N. The system may be in any of these permissible

states. - A realistic view of a macroscopic system is one

in which the system makes enormously rapid random

transitions among its quantum states. A

macroscopic measurement senses only an average of

the properties of the myriads of quantum states.

Lets apply this to a semiconductor.

15

Example(4) Entropy

- Because the transitions are induced by purely

random processes, it is reasonable to suppose

that a macroscopic system samples every

permissible quantum state with equal probability

- a permissible quantum state being one

consistent with the external constraints. This

assumption of equal probability of all

permissible microstates is the most fundamental

postulate of statistical mechanics. - The number of microstates (W) among which the

system undergoes transitions, and which thereby

share uniform probability of occupation,

increases to the maximum permitted by the imposed

constraints. Does this not sound a lot like

entropy ? - Entropy (S) is additive though and the number of

microstates (W) is multiplicative (e.g., the

microstates of two dice is 6 x 6 36). The answer

is that entropy equals the logarithm of the

number of available microstates or

Jackson Pollock

16

Boltzmanns Entropy

- Employing statistical mechanics in 1877 Boltzmann

suggested this microscopic explanation for

entropy. He stated that every spontaneous change

in nature tends to occur in the direction of

higher disorder, and entropy is the measure of

that disorder. From the size of disorder entropy

can be calculated as - S k ln W 3.19

- where W is the number of microstates permissible

and k is chosen to obtain agreement with the

Kelvin scale of temperature (defined by

T-1?S/?U). We will see that this agreement is

reached by making this constant R/NA

kB(Boltzmann s constant) 1.3807x10-23 J/K .

Equation 3.19 is as important as Emc2! - Boltzmanns entropy has the same mathematical

roots as the information concept the computing

of the probabilities of sorting objects into

bins-a set of N into subsets of sizes ni

Ludwig Boltzmann (1844 - 1906). Boltzmann

committed suicide by hanging

17

Boltzmanns Entropy

- Thermodynamicsno external work or heating so dU

TdS -PdV 0 or dS (P/T)dV for an ideal gas

(P/T) (R/V) NAkB/V

The illustration at the far left represents the

allowed thermal energy states of an ideal gas.

The larger the volume in which the gas is

enclosed, the more closely-spaced are these

states, resulting in a huge increase in the

number of microstates into which the available

thermal energy can reside this can be considered

the origin of the thermodynamic "driving force"

for the spontaneous expansion of a gas.Osmosis is

entropy-driven.

18

Boltzmanns Entropy

- Statistics If the system changes from state 1 to

state 2, the molar entropy change is

- So, we get the same result

- The statistical entropy is the same as the

thermodynamic entropy - The entropy is a measure of the disorder in the

system, and is related to the Boltzmann constant

19

Maxwells Demon

- Maxwell's Demon is a simple beast he sits at the

door of your office in summertime and watches the

molecules wandering back and forth. Those which

move faster than some limit he only lets pass out

of the room, and those moving slower than some

limit he only lets pass into the room. He doesn't

need to expend energy to do this -- he simply

closes a lightweight shutter whenever he spots a

molecule coming that he wants to deflect. The

molecule then just bounces off the shutter and

returns the way it came. All he needs, it would

appear, is patience, remarkably good eyesight and

speedly reflexes, and the brains to figure out

how to herd brainless molecules. - The result of the Demon's work is cool,

literally. Since the average speed at which the

molecules in the air zoom around is what we

perceive as the temperature of the air, the Demon

will by excluding high-speed molecules from the

room and admitting only low-speed molecules cause

the average speed and hence temperature to drop.

He is an air-conditioner that needs no power

supply.

20

Maxwells Demon

- This imaginary situation seemed to contradict the

second law of thermodynamics. To explain the

paradox scientists point out that to realize such

a possibility the demon would still need to use

energy to observe the molecules (in the form of

photons for example). And the demon itself (plus

the trap door mechanism) would gain entropy from

the gas as it moved the trap door. Thus the total

entropy of the system still increases. - It's an excellent demonstration of entropy, how

it is related to (a) the fraction of energy

that's not available to do useful work, and (b)

the amount of information we lack about the

detailed state of the system. In Maxwell's

thought experiment, the demon manages to decrease

the entropy, in other words it increases the

amount of energy available by increasing its

knowledge about the motion of all the molecules.

21

Maxwells Demon

- There have been several brilliant refutations of

Maxwell's Demon. Leo Szilard in 1929 suggested

that the Demon had to process information in

order to make his decisions, and suggested, in

order to preserve the first and second laws (of

conservation of energy and of entropy) that the

energy requirement for processing this

information was always greater than the energy

stored up by sorting the molecules. - It was this observation that inspired Shannon to

posit his formulation that all transmissions of

information require a phsyical channel, and later

to equate (along with his co-worker Warren

Weaver, and in parallel to Norbert Wiener) the

entropy of energy with a certain amount of

information (negentropy).

Au cours du temps, linformation contenue dans un

système isolé ne peut quêtre

détruite ou encore lentropie ne peut que

croître

22

Information

- Information theory has found its main application

in EE, in the form of optimizing the information

communicated per unit of time or energy - Information theory also gives insight into

information storage in DNA and the conversion of

instructions embedded in a genome into functional

proteins (nature uses four molecules for coding)

23

Information

- According to information theory, the essential

aspects of communicating are a message encoded by

a set of symbols, a transmitter, a medium through

which the information is transmitted, a receiver,

and noise. - Information concerns arrangements of symbols,

which of themselves need not have any particular

meaning. Meaning said to be encoded by a

specific combination of symbols can only be

conferred to an intelligent observer.

24

Information

- Proteins use twenty aminoacids for coding their

functions in biological cells (picture is that of

triptophane). - When cells divide DNA is divided to transmit the

information, repetition and redundancy are also

present.

Say it again !

25

Information

- George BOOLE

- (1815-1864) used only two symbols for coding

logical operations --binary system - John von NEUMANN (1903-1957)

- developed the concept of programming with

this binary system to code all information

0 1

26

Information

- Measure of information Let I(n) the missing

information to determine the location of an

object whose location is unknown when it is

equally likely to be in one of n similar boxes

states. In the figure below n7. - I(n) must satisfy the following

- I(1) 0 (If there is only one box then no

information is needed to locate the object.) - I(n) gt I(m) (The more states/boxes there are to

choose from the more information that will be

needed to determine the object's location. - I(nm) I(n) I(m) (If each of the n boxes is

divided into m equal compartments, then there

will be n.m similar compartments in which the

object can be located.). In the Figure below m3

and n4. - The information needed to find the objecy is now

I(n.m)

27

Information

- Yet more about the properties of the information

function I - The information-needed can also be determined by

first locating in which box the object is located

I(n) plus the information needed to locate the

compartment I(m) in which it is located . - I(nm) must equal I(n) I(m). In other words

these two approaches must give the same

information. - One function which meets all these conditions is

I(n) k ln(n) since ln(A.B) ln(A) ln(B).

Here k is an arbitrary constant. More correctly

we should write I (n) kln(1/n). With I negative

for n gt 1 meaning that it is information that has

to be acquired in order to make the correct

choice. - For uniform subsets I kln (m/n).

- One Bit of Information 1 Bit k ln(2). Or 1

Bit of information the information needed to

locate an object when there are only two equally

probable states. We can take this equation for I

one step further to the general case where the

subsets are nonuniform in size. We identify m/n

then with the proportion of the subsets (pi). We

can then specify the mean information which is

given as

28

Information

- This is the equation that Claude Shannon set

forth in a theorem in the 1940s, a classic in

information theory. Information is a

dimensionless entity. Its partner entity , which

has a dimension, is entropy. - Equation 3.19 can be generalized as

- In the last expression k is the Boltzmann

constant.Shannons and Boltzmanns equations are

formally similar. They convert to another as S

-(kln2)I. Thus, an entropy unit equals -kln bit.

An increase of entropy implies a decrease of

information and vice versa.The sum of information

change and entropy change in a given system is

zero.Even the most cunning biological Maxwell

demon must obey this rule.

29

Information

30

Information

- Claude E. Shannon (1916-2001) Claude Elwood

Shannon is considered as the founding father of

electronic communications age. He is an American

mathematical engineer, whose work on technical

and engineering problems within the

communications industry, laying the groundwork

for both the computer industry and

telecommunications. After Shannon noticed the

similarity between Boolean algebra and the

telephone switching circuits, he applied Boolean

algebra to electrical systems at the

Massachusetts Institute of technology (MIT) in

1940. Later he joined the staff of Bell Telephone

Laboratories in 1942. While working at Bell

Laboratories, he formulated a theory explaining

the communication of information and worked on

the problem of most efficiently transmitting

information. The mathematical theory of

communication was the climax of Shannon's

mathematical and engineering investigations. The

concept of entropy was an important feature of

Shannon's theory, which he demonstrated to be

equivalent to a shortage in the information

content (a degree of uncertainty) in a message.