Content - PowerPoint PPT Presentation

Title: Content

1

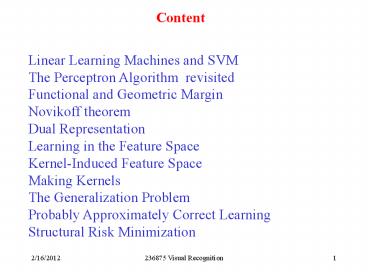

Content

Linear Learning Machines and SVM The Perceptron

Algorithm revisited Functional and Geometric

Margin Novikoff theorem Dual Representation Learni

ng in the Feature Space Kernel-Induced Feature

Space Making Kernels The Generalization Problem

Probably Approximately Correct

Learning Structural Risk Minimization

2

Linear Learning Machines and SVM

- Basic Notation

- Input space

- Output space for

classification - for regression

- Hypothesis

- Training Set

- Test error also R(a)

- Dot product

3

The Perceptron Algorithm

revisited

- Linear separation

- of the input space

- The algorithm requires that the input patterns

are linearly separable, - which means that there exist linear discriminant

function which has - zero training error. We assume that this is the

case.

4

The Perceptron Algorithm (primal

form)

- initialize

- repeat

- error false

- for i1..l

- if

then - error true

- end if

- end for

- until (errorfalse)

- return k,(wk,bk) where k is the number of

mistakes

5

The Perceptron Algorithm

Comments

- The perceptron works by adding misclassified

positive or subtracting misclassified negative

examples to an arbitrary weight vector, which

(without loss of generality) we assumed to be the

zero vector. So the final weight vector is a

linear combination of training points - where, since the sign of the coefficient of

is given by label yi, the are

positive values, proportional to the number of

times, misclassification of has caused the

weight to be updated. It is called the embedding

strength of the pattern .

6

Functional and Geometric

Margin

- The notion of margin of a data point w.r.t. a

linear discriminant will turn out to be an

important concept. - The functional margin of a linear discriminant

(w,b) w.r.t. a labeled pattern

is defined as - If the functional margin is negative, then the

pattern is incorrectly classified, if it is

positive then the classifier predicts the correct

label. - The larger the further away xi is from

the discriminant. - This is made more precise in the notion of the

geometric margin

7

Functional and Geometric

Margin cont.

The geometric margin of The

margin of a training set two

points

8

Functional and Geometric

Margin cont.

- which measures the Euclidean distance of a

point from the decision boundary. - Finally, is called the

(functional) margin of (w,b) - w.r.t. the data set S(xi,yi).

- The margin of a training set S is the maximum

geometric margin over all hyperplanes. A

hyperplane realizing this maximum is a maximal

margin hyperplane. - Maximal Margin

Hyperplane

9

Novikoff theorem

- Theorem

- Suppose that there exists a vector

and a bias term such that

the margin on a (non-trivial) data set S is at

least , i.e. - then the number of update steps in the

perceptron algorithm is at most - where

10

Novikoff theorem

cont.

- Comments

- Novikoff theorem says that no matter how small

the margin, if a data set is linearly separable,

then the perceptron will find a solution that

separates the two classes in a finite number of

steps. - More precisely, the number of update steps (and

the runtime) will depend on the margin and is

inverse proportional to the squared margin. - The bound is invariant under rescaling of the

patterns. - The learning rate does not matter.

11

Dual

Representation

- The decision function can be rewritten as

follows - And also the update rule can be rewritten as

follows - The learning rate only influences the overall

scaling of the hyperplanes, it does no affect an

algorithm with zero starting vector, so we can

put

12

Duality First Property of

SVMs

- DUALITY is the first feature of Support Vector

Machines - SVM are Linear Learning Machines represented in a

dual fashion - Data appear only inside dot products (in the

decision - function and in the training algorithm)

- The matrix is

called Gram matrix

13

Limitations of Linear

Classifiers

- Linear Learning Machines (LLM) cannot deal with

- Non-linearly separable data

- Noisy data

- This formulation only deals with vectorial data

14

Limitations of Linear

Classifiers

- Neural networks solution multiple layers of

thresholded linear functions multi-layer neural

networks. Learning algorithms back-propagation. - SVM solution kernel representation.

- Approximation-theoretic issues are independent

of the learning-theoretic ones. Learning

algorithms are decoupled from the specifics of

the application area, which is encoded into

design of kernel.

15

Learning in the Feature

Space

- Map data into a feature space where they are

linearly separable (i.e.

attributes features)

16

Learning in the Feature Space

cont.

- Example

- Consider the target function

- giving gravitational force between two

bodies. - Observable quantities are masses m1, m2 and

distance r. A linear machine could not represent

it, but a change of coordinates - gives the representation

17

Learning in the Feature

Space cont.

- The task of choosing the most suitable

representation is known as feature selection. - The space X is referred to as the input space,

while - is

called the feature space. - Frequently one seeks to find smallest possible

set of features that still conveys essential

information (dimensionality reduction

18

Problems with Feature Space

- Working in high dimensional feature spaces solves

the problem of expressing complex functions - BUT

- There is a computational problem (working with

very large vectors) - And a generalization theory problem (curse of

- dimensionality)

19

Implicit Mapping to Feature Space

- We will introduce Kernels

- Solve the computational problem of working with

many dimensions - Can make it possible to use infinite dimensions

- Efficiently in time/space

- Other advantages, both practical and conceptual

20

Kernel-Induced Feature Space

- In order to learn non-linear relations we select

non-linear features. Hence, the set of hypotheses

we consider will be functions of type - where is a

non-linear map from input space to feature space - In the dual representation, the data points only

appear inside dot products

21

Kernels

- Kernel is a function that returns the value of

the dot product between the images of the two

arguments - When using kernels, the dimensionality of space F

not necessarily important. We may not even know

the map - Given a function K, it is possible to verify that

it is a kernel

22

Kernels cont.

- One can use LLMs in a feature space by simply

rewriting it in dual representation and replacing

dot products with kernels

23

The Kernel Matrix (Gram Matrix)

- K

24

The Kernel Matrix

- The central structure in kernel machines

- Information bottleneck contains all necessary

information for the learning algorithm - Fuses information about the data AND the kernel

- Many interesting properties

25

Mercers Theorem

- The kernel matrix is Symmetric Positive Definite

- Any symmetric positive definite matrix can be

regarded as a kernel matrix, that is as an inner

product matrix in some space - More formally, Mercers Theorem Every (semi)

positive definite, symmetric function is a

kernel i.e. there exists a mapping such

that it is possible to write - Definition of Positive Definiteness

26

Mercers Theorem cont.

- Eigenvalues expansion of Mercers Kernels

- That is the eigenvalues act as features!

27

Examples of Kernels

- Simple examples of kernels are

- which is a polynomial of degree d

- which is Gaussian RBF

- two-layer sigmoidal neural network

28

Example Polynomial Kernels

29

Example

30

Making Kernels

- The set of kernels is closed under some

operations. If - K,K are kernels, then

- KK is a kernel

- cK is a kernel, if cgt0

- aKbK is a kernel, for a,bgt0

- Etc

- One can make complex kernels from simple ones

modularity!

31

Second Property of SVMs

- SVMs are Linear Learning Machines, that

- Use a dual representation

- Operate in a kernel induced feature space (that

is - is a linear function in the feature space

implicitly defined by K)

32

A bad kernel

- .. Would be a kernel whose kernel matrix is

mostly diagonal all points orthogonal to each

other, no clusters, no structure.

33

No Free Kernel

- In mapping in a space with too many irrelevant

features, kernel matrix becomes diagonal - Need some prior knowledge of target so choose a

good kernel

34

The Generalization Problem

- The curse of dimensionality easy to overfit in

high dimensional spaces - (regularities could be found in the training

set that are accidental, that is that would not

be found again in a test set) - The SVM problem is ill posed (finding one

hyperplane that separates the data many such

hyperplanes exist) - Need principled way to choose the best possible

hyperplane

35

The Generalization Problem cont.

- Capacity of the machine ability to learn any

training set without error. - A machine with too much capacity is like a

botanist with a photographic memory who, when

presented with a new tree, concludes that it is

not a tree because it has a different number of

leaves from anything she has seen before a

machine with too little capacity is like the

botanists lazy brother, who declares that if

its green, its a tree -

C. Burges

36

Probably Approximately Correct Learning

- Assumptions and Definitions

- Suppose

- We are given l observations

- Train and test points drawn randomly (i.i.d) from

some unknown probability distribution D(x,y) - The machine learns the mapping

and outputs a hypothesis . A

particular choice of - generates trained machine.

- The expectation of the test error or expected

risk is

37

A Bound on the Generalization Performance

- The empirical risk is

- Choose some such that . With

probability the following bound holds

(Vapnik,1995) - where is called VC dimension is a

measure of capacity of machine. - R.h.s. of (3) is called the risk bound of

h(x,a) in distribution D.

38

A Bound on the Generalization Performance

- The second term in the right-hand side is called

VC confidence. - Three key points about the actual risk bound

- It is independent of D(x,y)

- It is usually not possible to compute the left

hand side. - If we know d, we can compute the right hand side.

- This gives a possibility to compare learning

machines!

39

The VC Dimension

- Definition the VC dimension of a set of

functions - is d if and only if there

exists a set of points such that these

points can be labeled in all 2d possible

configurations, and for each labeling, a member

of set H can be found which correctly assigns

those labels, but that no set exists

where qgtd satisfying this property.

40

The VC Dimension

- Saying another wayVC dimension is size of

largest subset of X shattered by H (every

dichotomy implemented). VC dimension measures the

capacity of a set H of functions. - If for any number N, it is possible to find N

points - that can be separated in

all the 2N possible ways, we will say that the

VC-dimension of the set is infinite

41

The VC Dimension Example

- Suppose that the data live in space, and the

set - consists of oriented straight lines, (linear

discriminants). While it is possible to find

three points that can be shattered by this set of

functions, it is not possible to find four. Thus

the VC dimension of the set of linear

discriminants in is three.

42

The VC Dimension cont.

- Theorem 1 Consider some set of m points in

. Choose any one of the points as origin. Then

the m points can be shattered by oriented

hyperplanes if and only if the position vectors

of the remaining points are linearly independent. - Corollary The VC dimension of the set of

oriented hyperplanes in is n1, since we

can always choose n1 points, and then choose one

of the points as origin, such that the position

vectors of the remaining points are linearly

independent, but can never choose n2 points

43

The VC Dimension cont.

- VC dimension can be infinite even when the number

of parameters of the set of

hypothesis functions is low. - Example

- For any integer l with any labels

- we can find l points and

parameter a such that those points can be

shattered by - Those points are

- and parameter a is

44

Minimizing the Bound by Minimizing d

45

Minimizing the Bound by Minimizing d

- VC confidence (second term in (3)) dependence on

d/l given 95 confidence level (

) and assuming training sample of size 10000. - One should choose that learning machine whose set

of functions has minimal d - For d/lgt0.37 (for and

l10000) VC confidence gt1. Thus for higher d/l

the bound is not tight.

46

Example

- Question. What is VC dimension and empirical

risk of the nearest neighbor classifier? - Any number of points, labeled arbitrarily, will

be successfully learned, thus and

empirical risk 0 . - So the bound provide no information in this

example.

47

Structural Risk Minimization

- Finding a learning machine with the minimum upper

bound on the actual risk leads us to a method of

choosing an optimal machine for a given task.

This is the essential idea of the structural risk

minimization (SRM). - Let be a

sequence of nested subsets of hypotheses whose VC

dimensions satisfy - d1 lt d2 lt d3 lt SRM then consists of

finding that subset of functions which minimizes

the upper bound on the actual risk. This can be

done by training a series of machines, one for

each subset, where for a given subset the goal of

training is to minimize the empirical risk. One

then takes that trained machine in the series

whose sum of empirical risk and VC confidence is

minimal.