Class Outline - PowerPoint PPT Presentation

1 / 29

Title:

Class Outline

Description:

Cov(ut,us)=0 ij (no serial correlation) ... The test is easy if we know the error term ut. ... term is an estimate of having replaced ut by. Durbin Watson Test ... – PowerPoint PPT presentation

Number of Views:49

Avg rating:3.0/5.0

Title: Class Outline

1

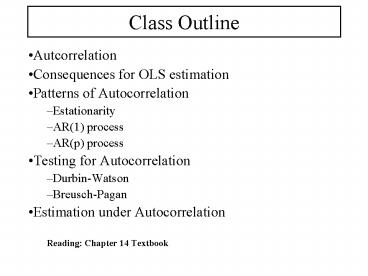

Class Outline

- Autcorrelation

- Consequences for OLS estimation

- Patterns of Autocorrelation

- Estationarity

- AR(1) process

- AR(p) process

- Testing for Autocorrelation

- Durbin-Watson

- Breusch-Pagan

- Estimation under Autocorrelation

- Reading Chapter 14 Textbook

2

Autocorrelation

- Consider the K variable linear model

- The Assumptions of this model are

- E(ut)0, i1,,n

- Var(ut)E(ut-E(ut))2E(ut2)?2 i1,,n

(The variance of the error

term is constant for all observations

(homoscedasticity). - Cov(ut,us)0 ? i?j (no serial correlation).

- The explanatory variables are non-stochastic and

no exact linear relationship exists between them.

3

Autocorrelation

- One of the assumptions is the no serial

correlation

When each observation corresponds to a different

period we say that we have time series data Time

provides a natural way to sort observations In

time series the no-serial correlation assumption

is labeled the no autocorrelation assumption It

means that the error term corresponding a

particular period is not linearly related to the

error term of any other observation in the past

or future

4

Autocorrelation

- When we have autocorrelation,

5

Autocorrelation

6

Why Autocorrelation Occur?

- Inertia

- Specification Bias

- Excluded variables

- Incorrect functional form

- Cobweb Phenomenon

- Lags

- Manipulation of Data

- Data Transformation

- Nonstationarity

7

Consequences for OLS estimation

- What impact we have on our model if we ignore

autocorrelation? - The least squares estimator is still unbiased,

but it is no longer best - The formulas for the standard errors are no

longer correct

8

Consequences for OLS estimation

- Least Square Estimator,

- Then,

- ?OLS is still a linear estimator

- ?OLS is still unbiased (when we proved

unbiasedness we did not use the assumption

constant variance - ?OLS is no longer the BLUE of ?

- Now, S2(XX)-1 is no longer an unbiased estimator

of V(?)(XX)-1(X?X)(XX)-1

9

Patterns of Autocorrelation

- How is the structure of the errors?

- Consider a time series process with T ordered

observations - An important characteristics of this process is

stationarity. The process ut is stationary if and

only if the following holds - E(ut)?lt? ?t

- Cov(ut,ut-j)?jlt ? ?t, j

10

Patterns of Autocorrelation

- Property one requires the mean to exists and to

be constant across time - The second property requires the covariance to

exist and to depend only on the distance between

observations and not on the period where they are

measured. - (for example, stationarity requires the

covariance between consumption in 1981 and 1983

to be the same as the covariance between 1996 and

1998). - The second requisite implies that the variances

are constant (V(ut)Cov(ut,ut) which is a

constant)

11

Patterns of Autocorrelation

- First Order Autorregressive process AR(1)

- We say that ut follows a zero mean AR(1) if,

- ut?ut-1?t

- Where ?t is a white noise process. It can be show

that the AR(1) process is stationary if and only

if ?lt1. We will assume that this stationarity

condition holds

12

Patterns of Autocorrelation

- AR(p) process

- A natural generalization is the AR(p) process,

- ut?1ut-1?2ut-2?put-p?t

13

Testing for Autocorrelation

- There are two main tests for Autocorrelation

- Durbin-Watson test

- Breusch-Pagan LM test

14

Durbin Watson Test

- Consider the simple linear model with AR(1)

autocorrelation, - where ? is a white noise process normally

distributed

15

Durbin Watson Test

- The Test is

- H0?0

- H1??0

- The test is easy if we know the error term ut.

- We would simply regress ut on ut-1 and test the

null hypothesis. But in practice we do not know

the values of the uts.

16

Durbin Watson Test

- Instead we will use the residuals from the OLS

model ignoring the problem of autocorrelation. - However, things are not easy, since the

Durbin-Watson test is based in the following

statistic,

17

Durbin Watson Test

- In order to get some intuition from the DW test,

lets solve the square in the numerator,

18

Durbin Watson Test

- Assume that the number of observations is large,

then - The first term should be close to one

- The second term should be close to one also

- In the case of the third term we have that

- then the third term is an estimate of ? having

replaced ut by

19

Durbin Watson Test

- Then, the following approximation for the Durbin

Watson holds, - DW11-2 2(1- )

- Then, for the null hypothesis H0?0, the DW

should be close to 2. - On the alternative hypothesis DW should be lower

than 2, approximating to zero as ? increases.

20

Durbin Watson Test

- If we follow the procedure of other tests, we

should find a critical value dc, compute DW and

evaluate if we accept or reject the Null

hypothesis. - However, because the distribution of the DW

depends on the values of X, and since the X vary

case by case, it is impossible to tabulate the

distribution of DW for every possible problem.

21

Durbin Watson Test

- Luckily, Durbin and Watson find that the critical

value, dc is bounded by two values dl and du, and

these values do not depend on the data. - dlltdcltdu

- Then, once we calculated DW, we should follow the

following - DWltdl then DWltdc Reject H0 and Accept H1?gt0

- DWgtdu then DWgtdc Accept H0

- dlltDWltdu Test is inconclusive

22

Durbin Watson Test

23

Durbin Watson Test

- Comments about the DW test

- This is a finite sample test since the

distribution of the DW test can be derived from

the normality assumption of ? for every sample

size - The model must include an intercept

- It is crucial that the X is non-stochastic

- This test is for AR(1) process, but it is not

informative about general processes like AR(p)

24

The Breusch-Pagan LM Test

- Consider the following model,

- H0?1?2?p0

- H1 ?1?0 or ?2?0 oror ?p?0

25

The Breusch-Pagan LM Test

- Breusch and Pagan propose the following

procedure - 1. Estimate the OLS model and save the residuals

- 2. Regress

- 3. Compute the test statistic (T-p)R2

- Under the null hypothesis this statistic has a ?2

distribution with p degrees of freedom.

26

The Breusch-Pagan LM Test

- Characteristics of this test

- Is an asymptotic test (large number of

observations) - We do not need non-stochastic X

- It explores different AR(p) processes including

AR(1)

27

Estimation under Autocorrelation

- Consider the simple model,

- Since the model is valid for any period, the

following statement holds

28

Estimation under Autocorrelation

- Now, multiply both sides of the last equation by

?, - subtract one equation from the other and we will

have that - where

29

Estimation under Autocorrelation

- If ? is known this procedure is straightforward

- If we do not know ?, we can estimate it by some

technique. One approximation is DW?2(1- ) - First estimate ?

- Second, transform the variables and obtain y and

x - Third, run the OLS model of the transformed

variables, knowing that the estimators are now

BLUE - Estimator ?1 can be easily recovered since